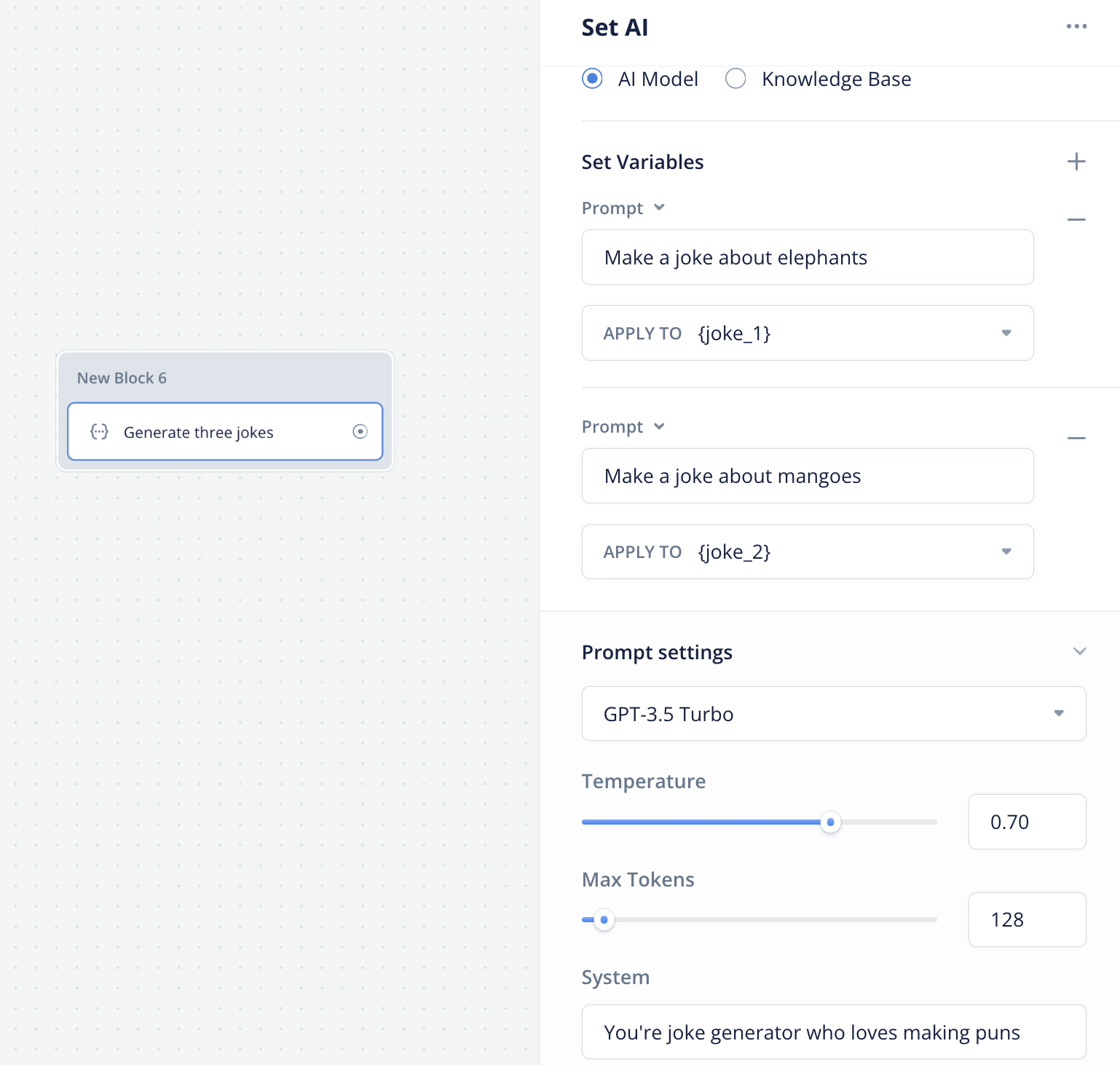

Set AI step

We encourage all users to use the prompt type in the Set step moving forward to perform dynamic variable setting.

The Set AI step let you leverage the power of LLMs like GPT 4 to do reasoning and prompt chaining.

Response or Set AI?

- Response AI: This displays the AI response directly to the customer. You can select the Knowledge base or the AI model directly as the data source. This supports Markdown formatting. You can learn more about it here.

- Set AI: This saved the AI response to a variable. So the user never sees it. Mastering the Set AI step is key to developing an advanced agent, as it allows you to do prompt chaining.

Both steps can either directly use models, or also respond using your Knowledge Base.

Configuring your Set AI step

Since the prompt part of the Set AI step is almost identical to the Response AI step, you can see its documentation here.

The only important difference is that you can run multiple Set AI steps in parallel (at the same time). They will all share the same system prompt and model, but can have different instructions. This does mean though that you shouldn't use the output of one set into another in the same step.

Prompt chaining

Prompt chaining is a method to get an AI to follow a chain of thought by passing an LLM output into the input of another LLM, with another prompt around it.

Prompt chaining is easy to do in Voiceflow with a chain of Set AI steps.

This can be done to reduce hallucinations, for example, by getting the AI to double-check itself and write corrections if necessary. For a great example of prompt chaining, see the video below.

Output parsing

Sometimes we might want to use an LLM to drive the logic inside our agent, like choosing a path to go down or select an option from a list. A prompt (from our Unity NPC demo) could look like:

Based off the message, choose an appropriate face sprite from the list for the character who is speaking it to have on. Be emotive and like an actor, and be very creative in choosing, and lean into the Climber's emotions.

#CHOICES

normal

distracted

angry

panic

sad

suprised

upset

phone

#CLIMBER MESSAGE YOU'RE REACTING TO

{last_utterance}

#REACTION MESSAGE TO CLASSIFY

{split_sentence}

#INSTRUCTION

Simply answer back with the choice from the list. For example, in response to talking about a gift, you respond: suprised.

Now take a deep breath. Do not answer with anything else other than the choice.

#CHOICE

We would like to get out only the choice, but the LLM might answer something like The face to choose is: normal, even thought we told it not to.

In this case, you should do some parsing on the LLM's output. Effective methods include:

- Searching for if the output includes one of a set of expected options. If you're looking for 0 or 1, instead of checking that the output is equal to “0”, check if it contains “0”.

- Ask the LLM to wrap its answer in predicable characters. Often, using backticks (`) is effective. You can then use a JavaScript step to extract the string between backticks.

Updated 6 months ago