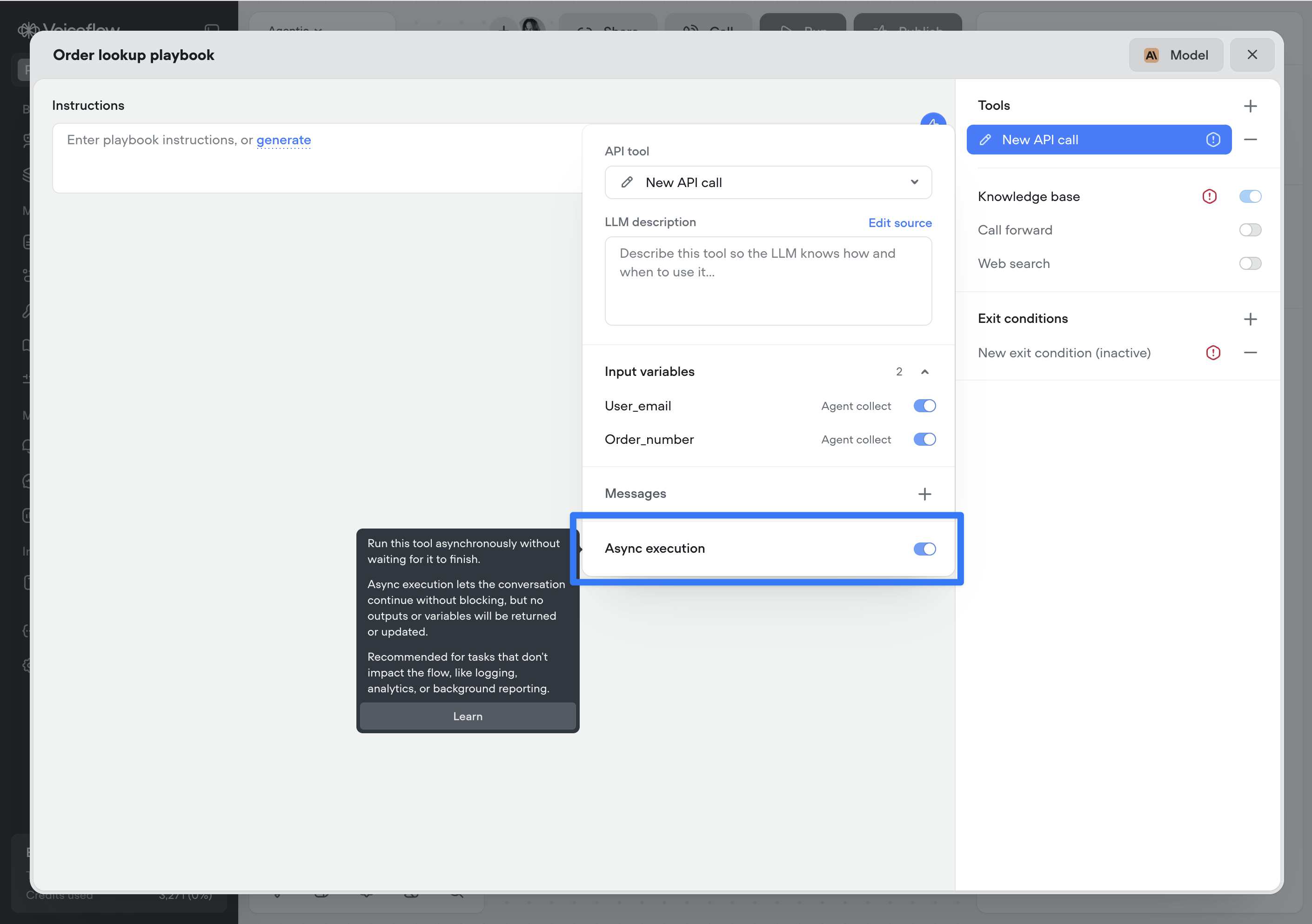

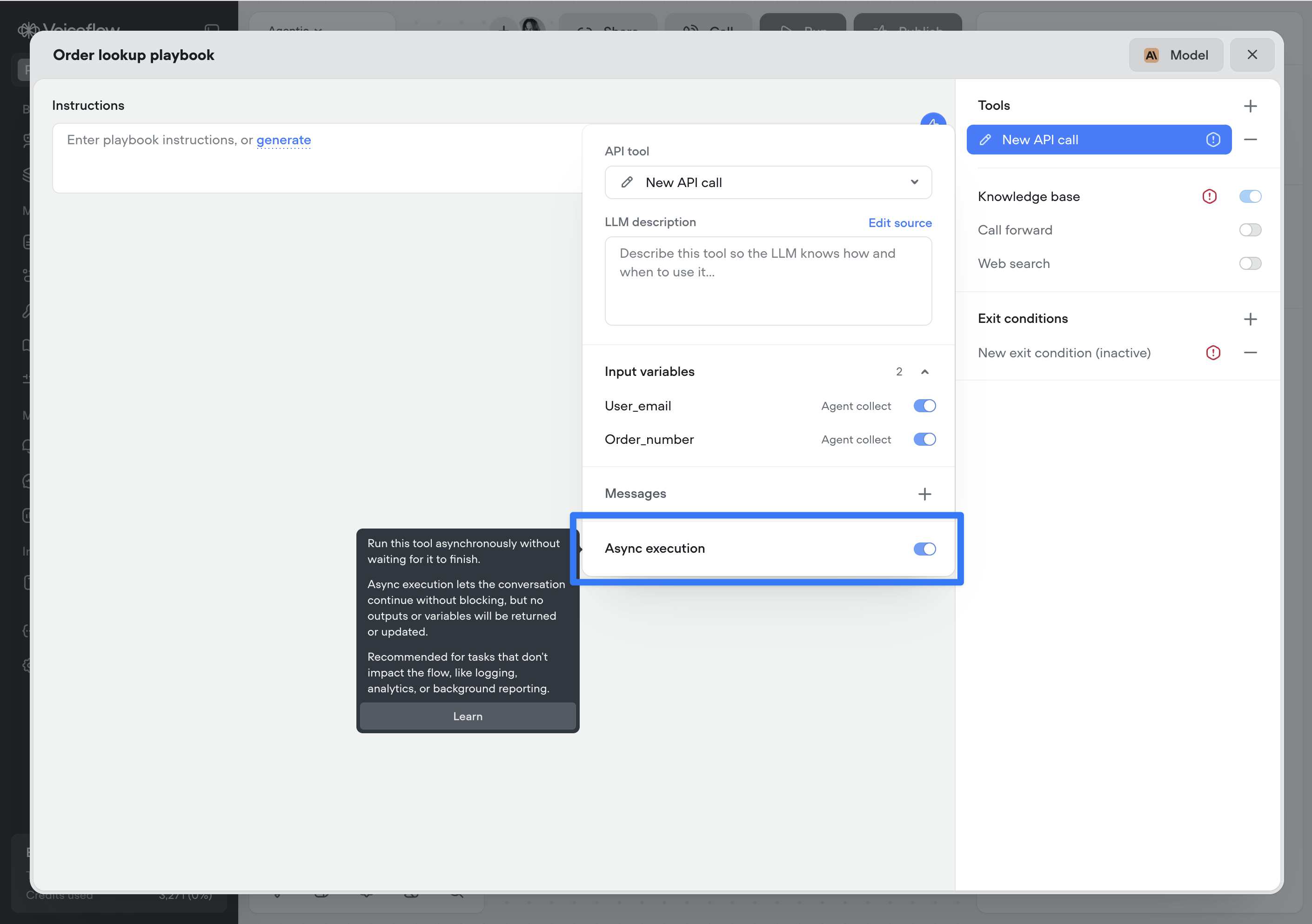

You can now run Function and API tool steps asynchronously.

Async execution allows the conversation to continue immediately without waiting for the tools to complete. No outputs or variables from the step will be returned or updated.

This is ideal for non-blocking tasks such as logging, analytics, telemetry, or background reporting that don’t affect the conversation.

Note: This setting applies to the reference of the Function or API tool — either where the tool is attached to an agent or where it’s used as a step on the canvas. It is not part of the underlying API or function definition, which allows the same tool to be reused with different async behaviour throughout your project.