Prompt step

Use AI to generate a single message inside a workflow.

The Prompt step allows your agent to generate responses to a user's message using an LLM. It’s useful for injecting AI into a specific point in your flow, such as generating product suggestions, summarizing information, or rephrasing user input. Each time the prompt step is used, it will generate a single response.

When to use the Prompt step

The prompt step is only recommended for specific situations. If you're looking to build a fully agentic conversational agent, we recommend using the Agent step instead. If you'd like to set a variable to the result of a prompt, use the Set step.

Here are some situations where the Prompt step might be a good fit:

- Searching the Knowledge Base using the KB Search step, then summarizing the output using a prompt step. This should only be done if the Agent step's built in Knowledge Base search doesn't meet your needs.

- Adding LLM-generated responses to a deterministic flow. For example, if you have a calculator that must follow specific steps in order for compliance reasons, you could build these with the Set step and Condition step, then use the Prompt step to summarize the result.

Basic usage

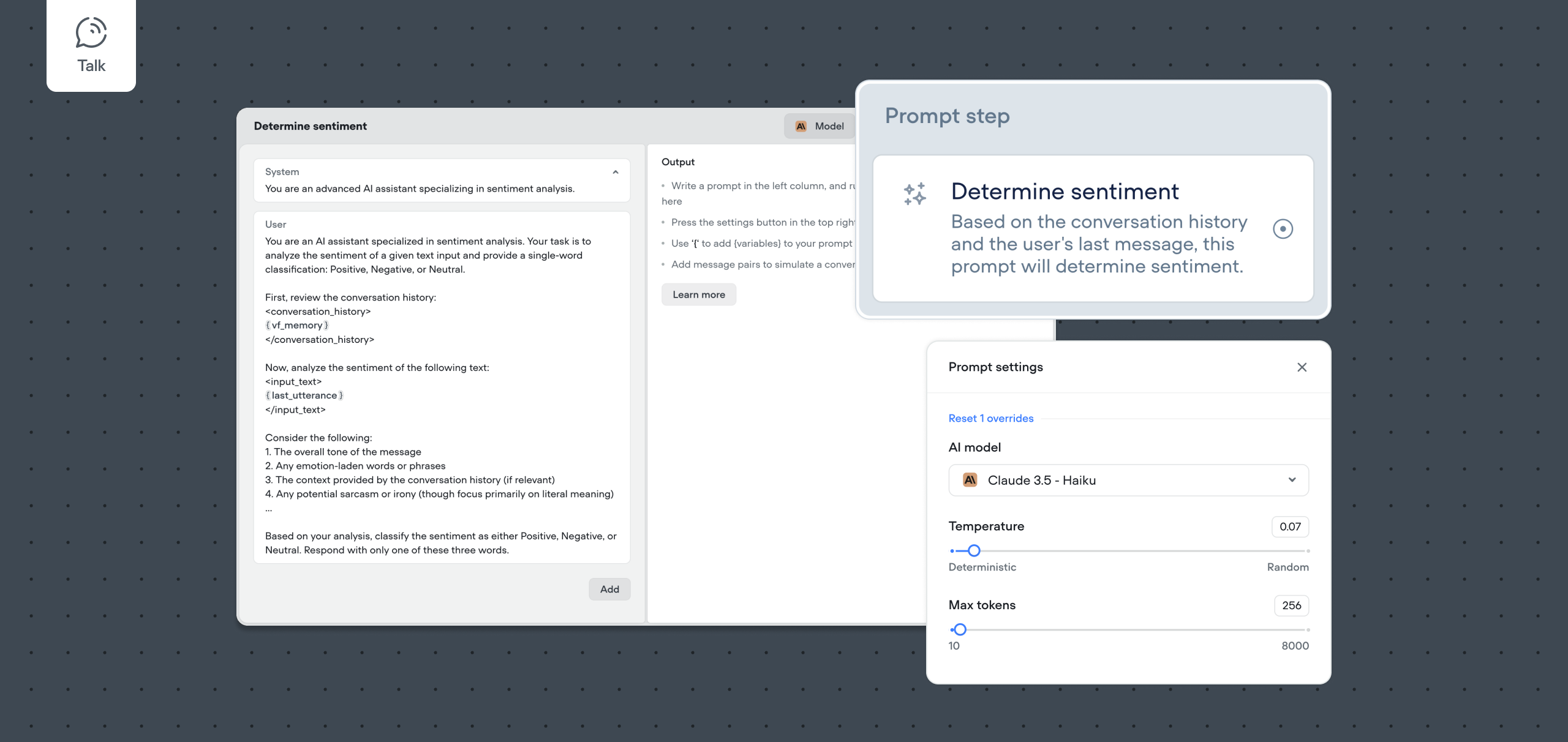

To use the prompt step, add it to the canvas, connect it to a previous step, then select the prompt you'd like to use with it. If you don't already have a prompt created in your project, you'll be prompted (😉) to create one. We recommend using the templates provided at the bottom of the prompt window as a starting point.

Advanced usage

After you've created a prompt, you can optionally change the model (LLM), temperature, and max tokens settings that should be followed by this step. You can also test the prompt from within the prompt window.

We recommend experimenting with different settings prior to launching your agent into production. Please note that different models cost a varying number of credits - learn more on our pricing page.

Updated 8 months ago