LLM fallback

Maximize agent availability by configuring secondary LLMs to take over when primary LLMs suffer an outage.

Teams and Enterprise FeatureThis feature is exclusive to Voiceflow workspaces with the Teams or Enterprise plan.

Overview

The LLM Fallback is a realtime monitoring system that detects potential outages from 3rd-party LLM providers (such as OpenAI) and substitutes calls to unavailable LLMs for calls to alternative LLMs.

The purpose of this system is to maximize the availability of Voiceflow agents. It allows users to configure redundant, secondary models that will take over the handling of LLM-related functionality in case the main models are suffering an outage.

Usage

Navigating to fallback configuration

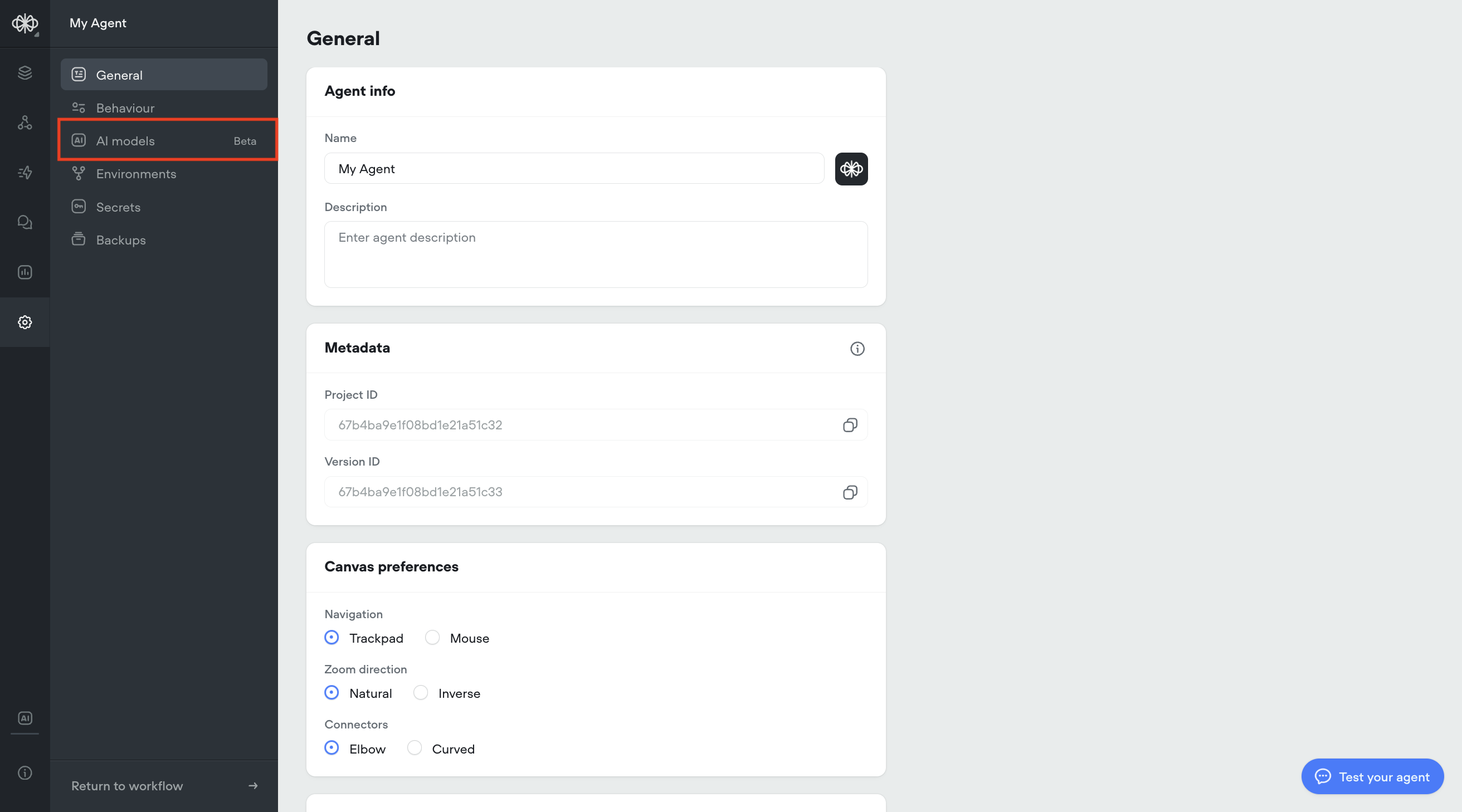

To use the LLM fallback, we must first create a fallback configuration to specify what fallback models to use. The fallback configuration can be set by navigating to the AI models page by following these steps:

-

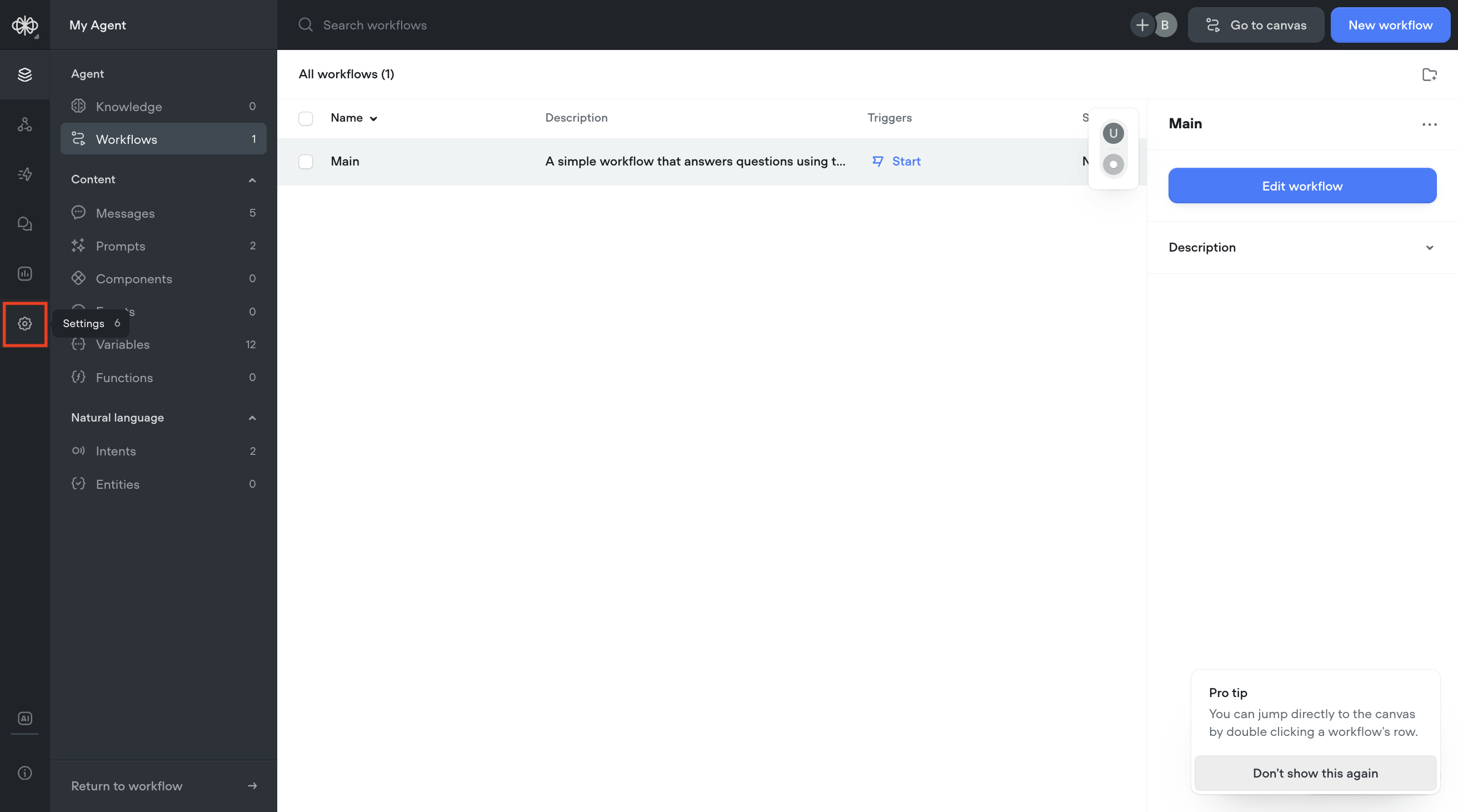

Open the Content Management System (CMS) view of your agent and navigate to Settings (red box)

-

In Settings, navigate to AI models

-

You should arrive at the AI modelspage shown below.

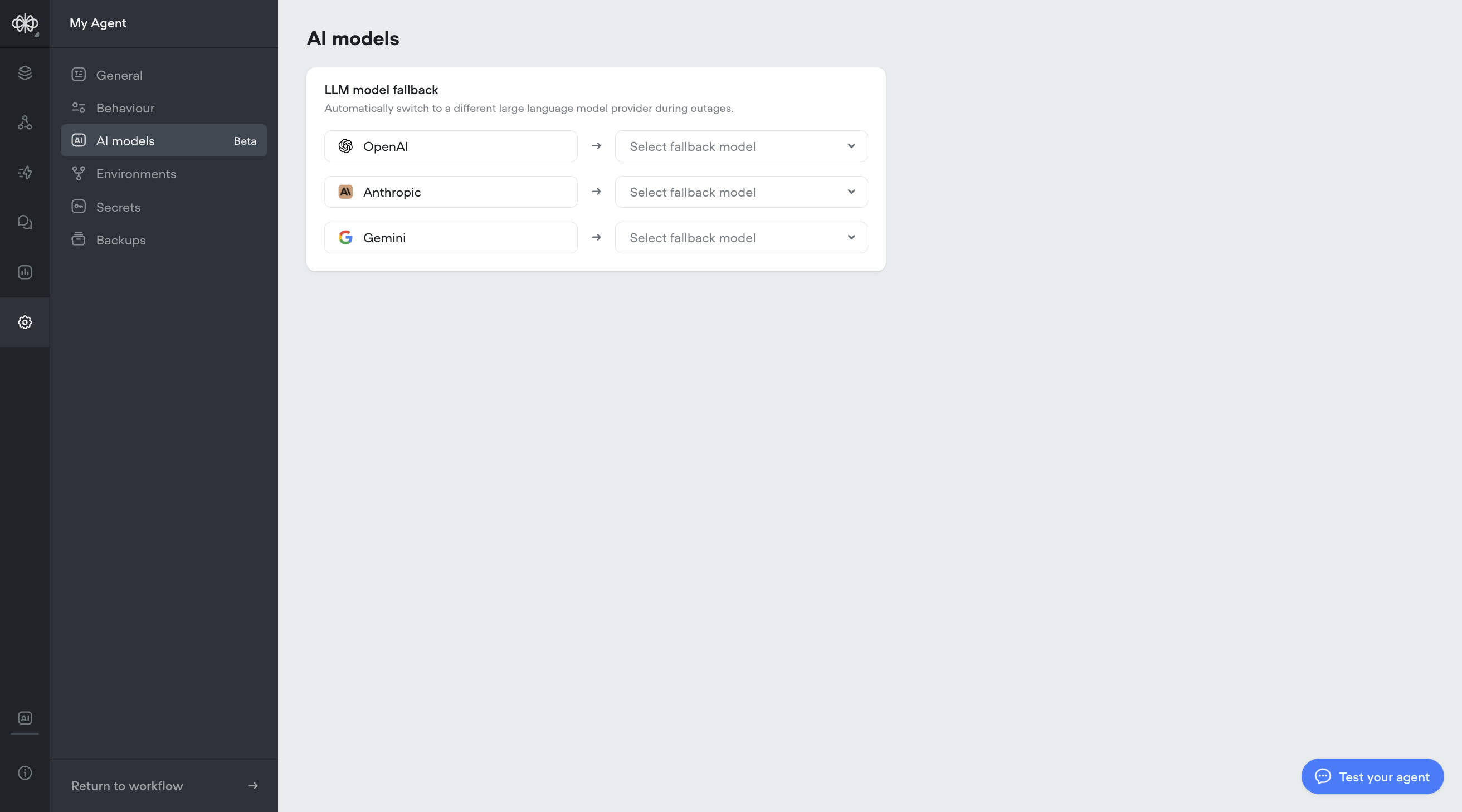

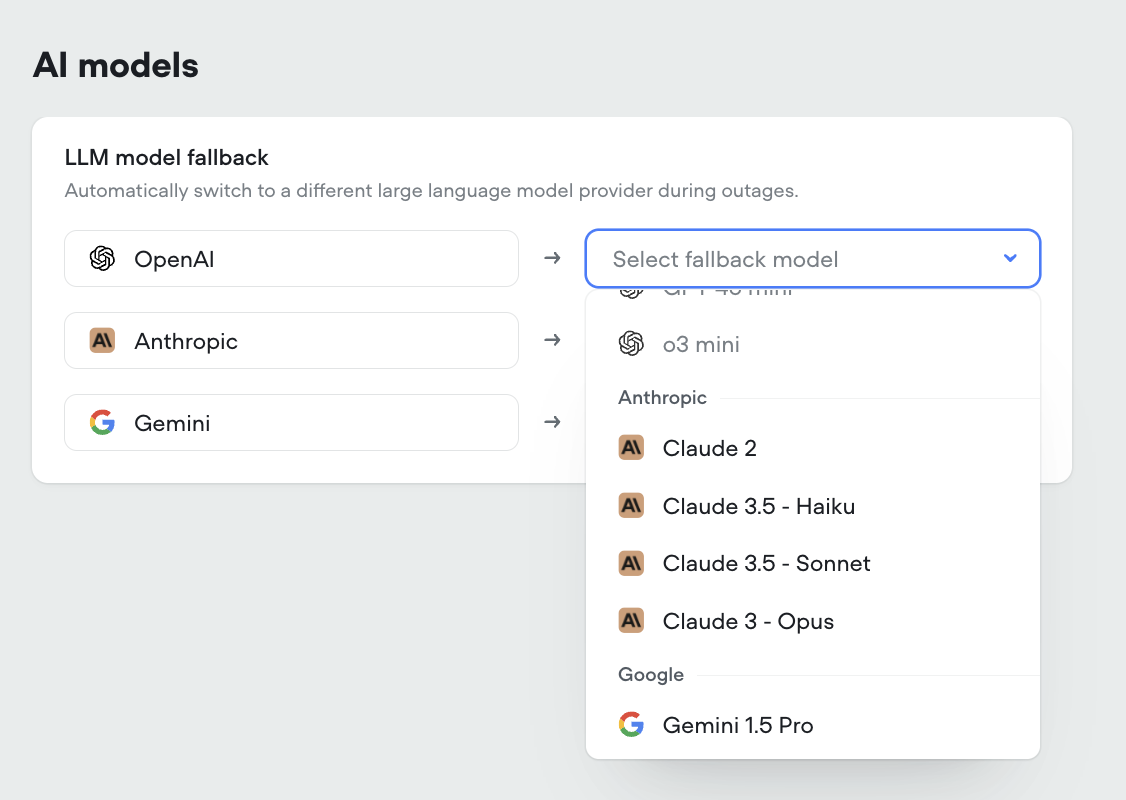

Configuring a fallback configuration

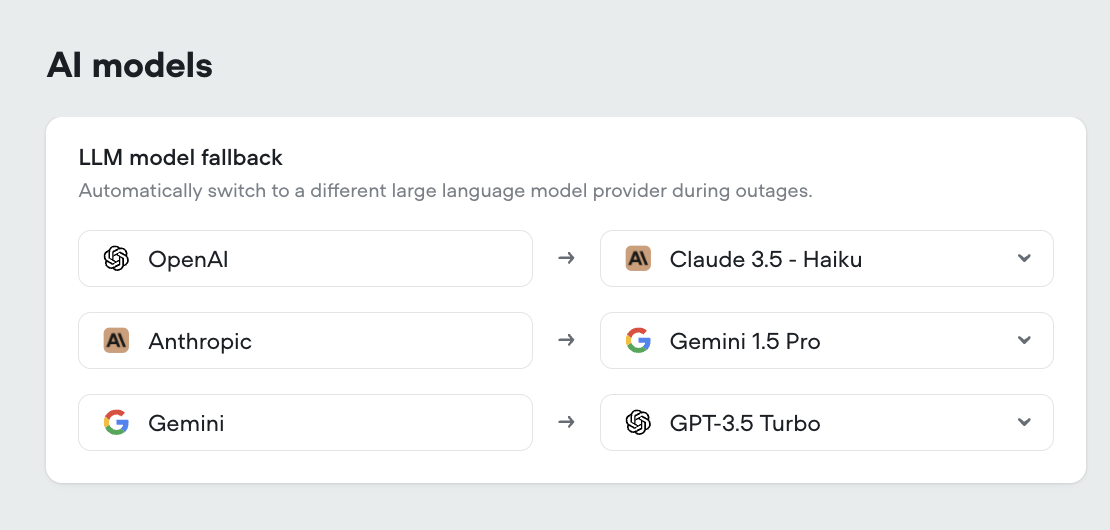

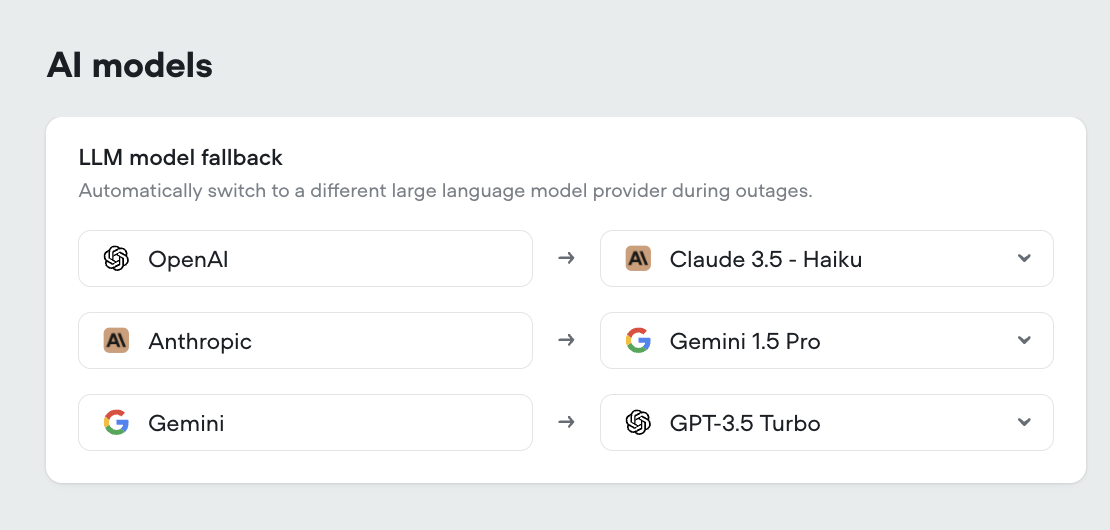

Configuring a fallback configuration is straightforward. For each 3rd-party LLM provider (OpenAI, Anthropic, and Gemini), you can set a fallback model by opening the dropdown on an input in the right column and selecting a model from the list.

As an example, in the image below, we have configured Claude 3.5 Haiku as the fallback for any OpenAI model, Gemini 1.5 Pro as the fallback for any Anthropic model, and GPT-3.5 Turbo as the fallback for any Google model.

Fallback in action

Once a fallback configuration is set, then LLM fallback is automatically enabled.

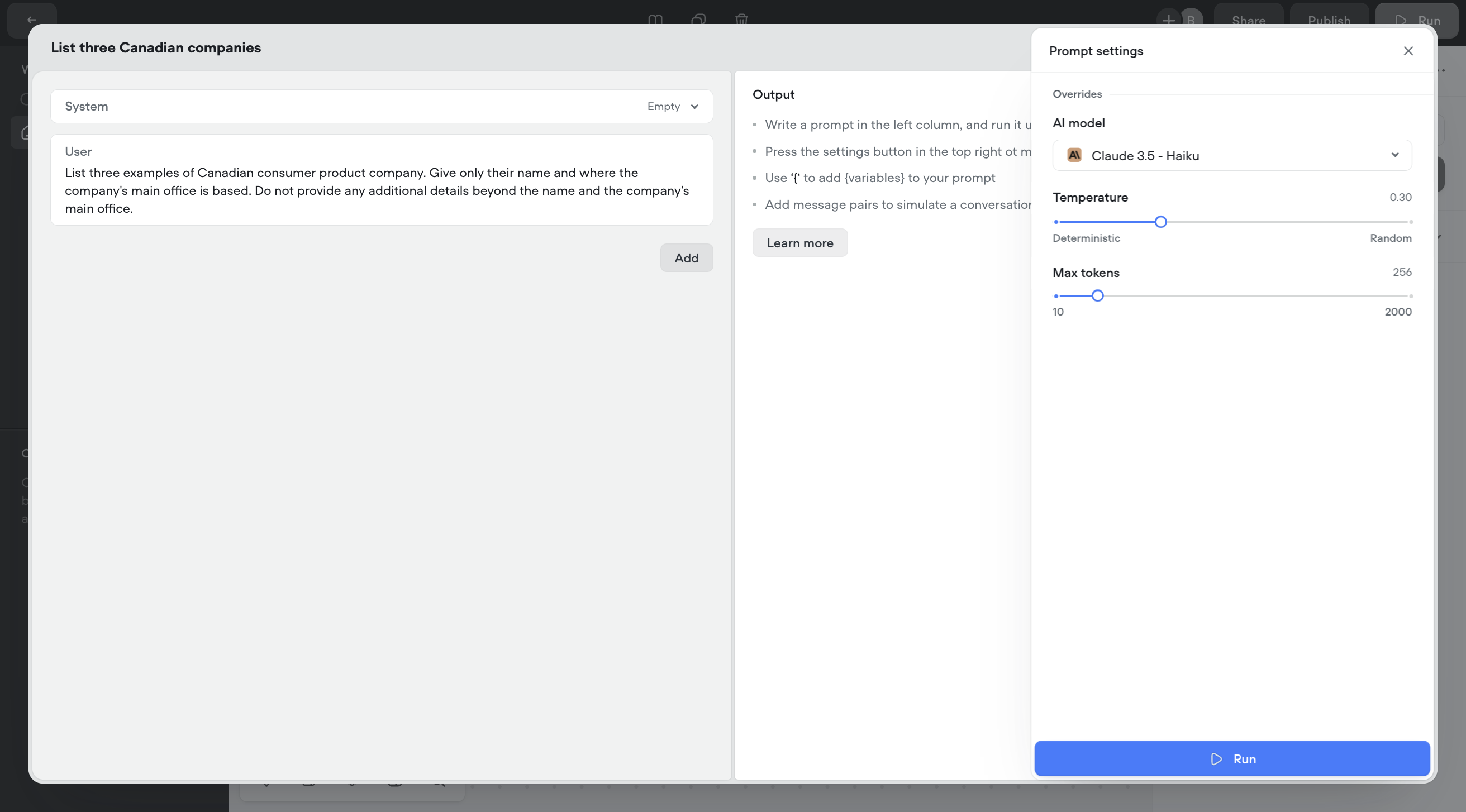

While LLM fallback is enabled and no outages occur, your Voiceflow agent will behave exactly as before. For example, consider a project with a single Prompt step with the following prompt which is configured to use Claude 3.5 - Haiku as its LLM.

When we interact with the agent, it will behave as expected. Here our agent lists out three Canadian companies.

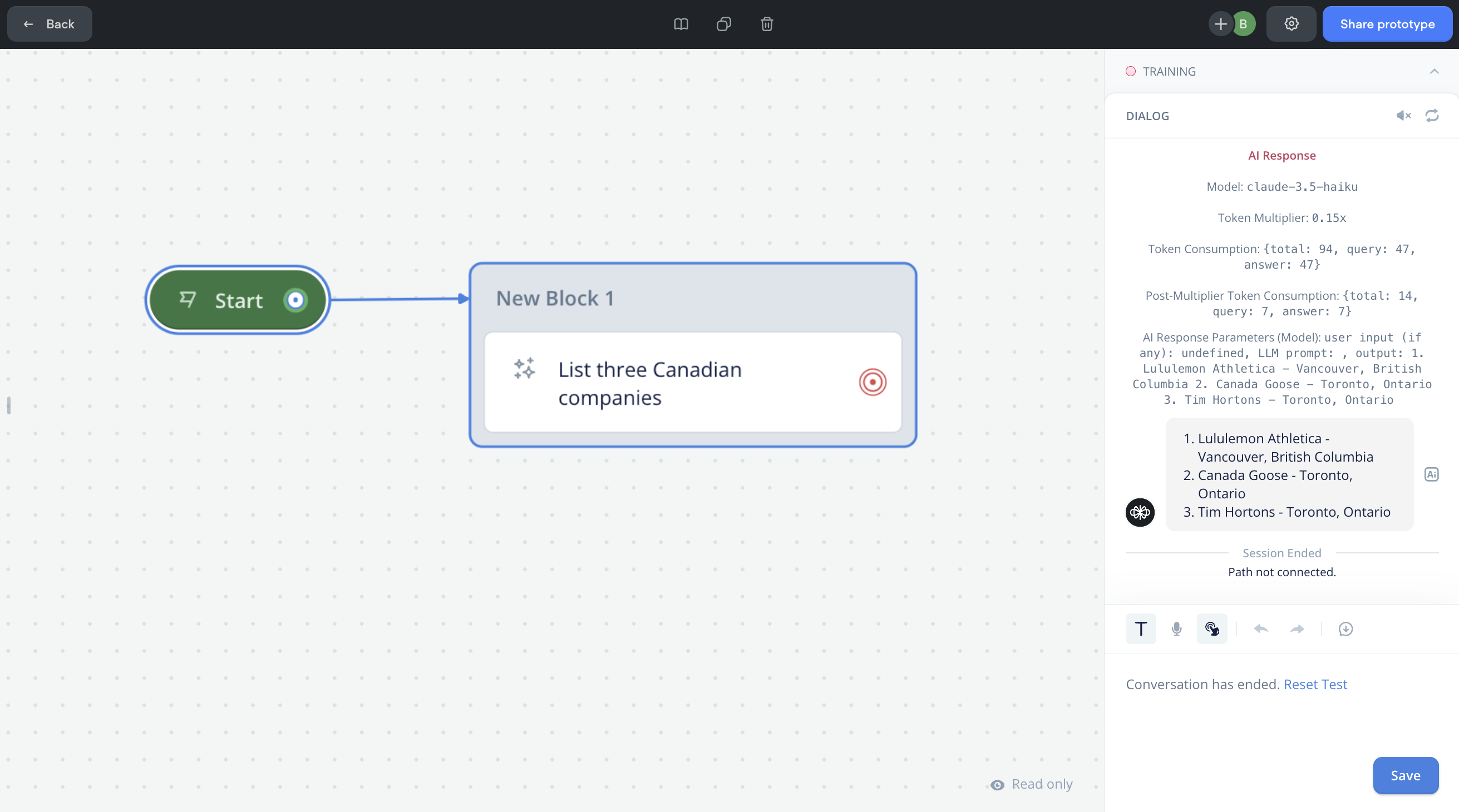

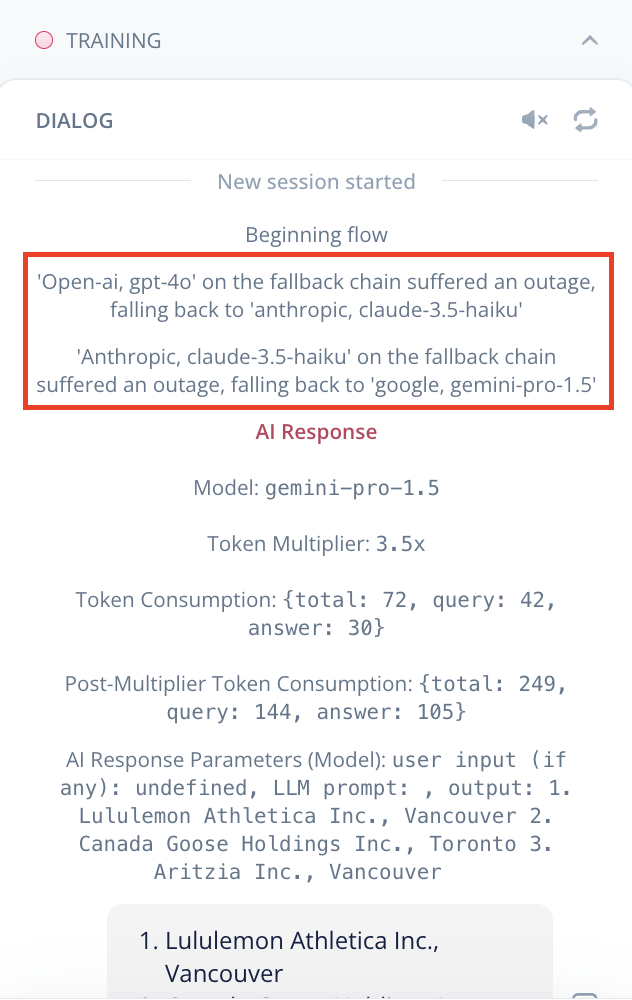

Imagine now that Anthropic is suffering an outage and so Claude 3.5 - Haiku is unavailable. In the previous section, we set the fallback model of Claude 3.5 - Haiku to be Gemini 1.5 Pro. When we execute the Prompt step during this outage, the agent will continue to work properly because after detecting that Claude 3.5 - Haiku is unavailable, it sends a request to Gemini 1.5 Pro to handle our request instead.

This behaviour is logged as a debug trace with the text "'Anthropic, claude-3.5-haiku' on the fallback chain suffered an outage, falling back to 'google, gemini-pro-1.5'" on the Prototype Tool:

Thus, as we can see, LLM fallback increases the availability of Voiceflow agents through fallback configurations providing redundant models to fallback on.

Fallback and non-outage errorsLLM fallback only triggers if an LLM fails for an outage-related reason, that is, the 3rd-party LLM provider responded with an error which Voiceflow's outage detection system classifies as an outage.

In practice, this includes nearly all errors from LLMs, but on the rare occasion that an error is classified as a non-outage, it is treated as user error that you as the Voiceflow user need to correct.

For non-outages, Voiceflow agents do not perform LLM fallback at all, as the cause of the issue is not the unavailability of 3rd-party LLM providers, but instead a problem with the Voiceflow agent's design, and thus, user action is required to fix the agent as this is not an error scenario the fallback is meant to handle.

Interpreting a fallback configuration

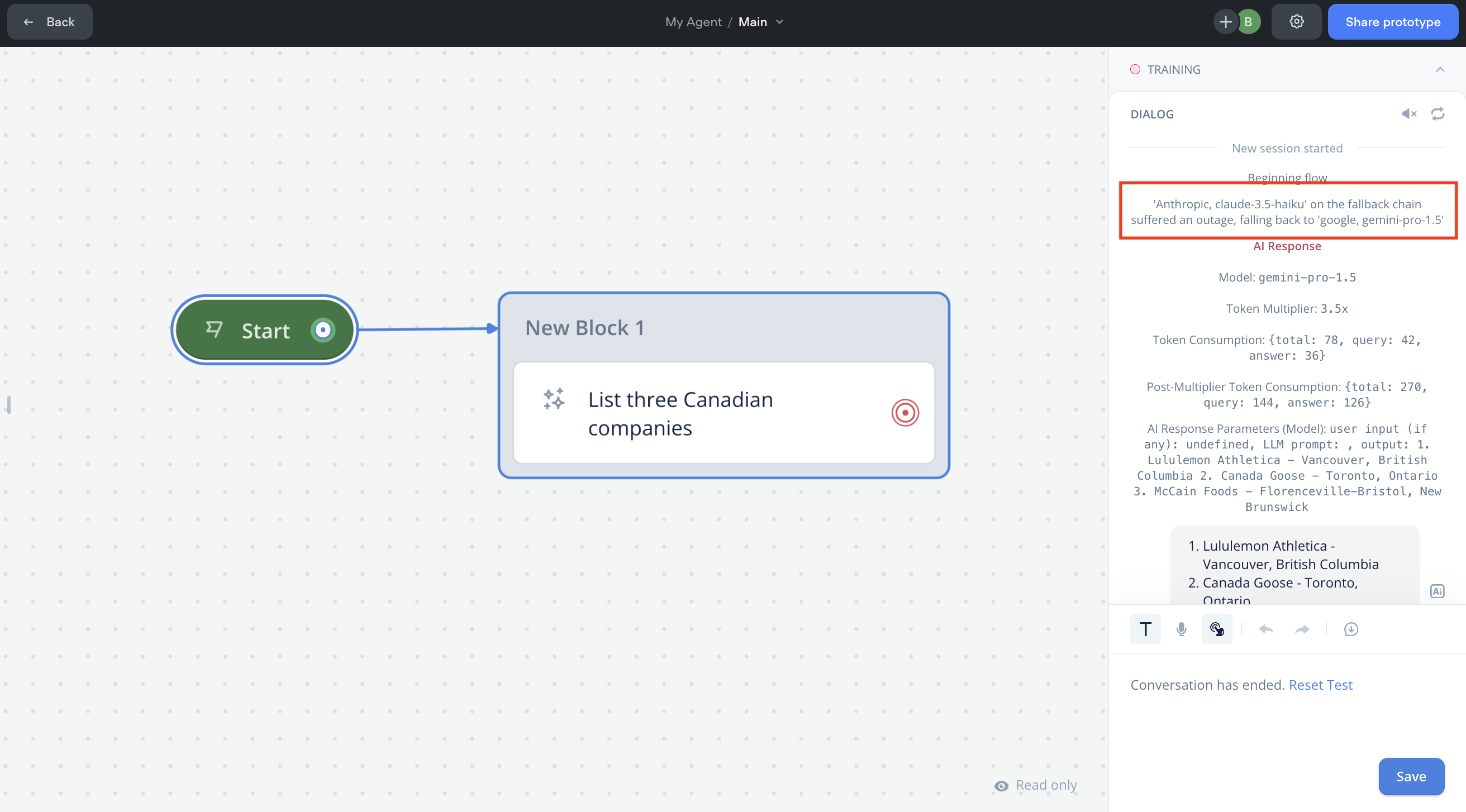

In this section, we will describe the complete behaviour of the LLM fallback system. Recall that in previous sections, we set the following fallback configuration:

This fallback configuration can be interpreted as meaning the following:

- If any OpenAI model (GPT-3.5-Turbo, GPT-4o, etc) suffers an outage, then fall back to Claude 3.5 - Haiku

- If any Anthropic model (Claude 3.5 - Haiku, Claude 3.5 - Sonnet, etc) suffers an outage, then fall back to Gemini 1.5 - Pro

- If any Google model (Gemini 1.5 Pro) suffers an outage, fall back to GPT-3.5 Turbo

Note that fallbacks cascade, meaning that if a fallback model is itself suffering an outage, then we fallback again onto another model determined by the fallback configuration. This repeats until an available model is found or all fallback models are exhausted.

For example, imagine if a Prompt step called GPT-4o (an OpenAI model), but OpenAI's and Anthropic services are suffering an outage. Therefore, GPT-4o has configured Claude 3.5 - Haiku to be its fallback model, but Claude 3.5 - Haiku is itself unavailable and act in place of GPT-4o. In this situation, the LLM fallback system will behave as follows:

- Detected that GPT-4o (OpenAI) is suffering an outage, try to fallback to Claude 3.5 - Haiku

- Detected that Claude 3.5 - Haiku (Anthropic) is suffering an outage, try to fallback to Gemini 1.5 Pro

- Gemini 1.5 Pro is available and will be used to generate the LLM output.

In the Voiceflow Prototype Tool, you will see a debug trace reflecting this behaviour:

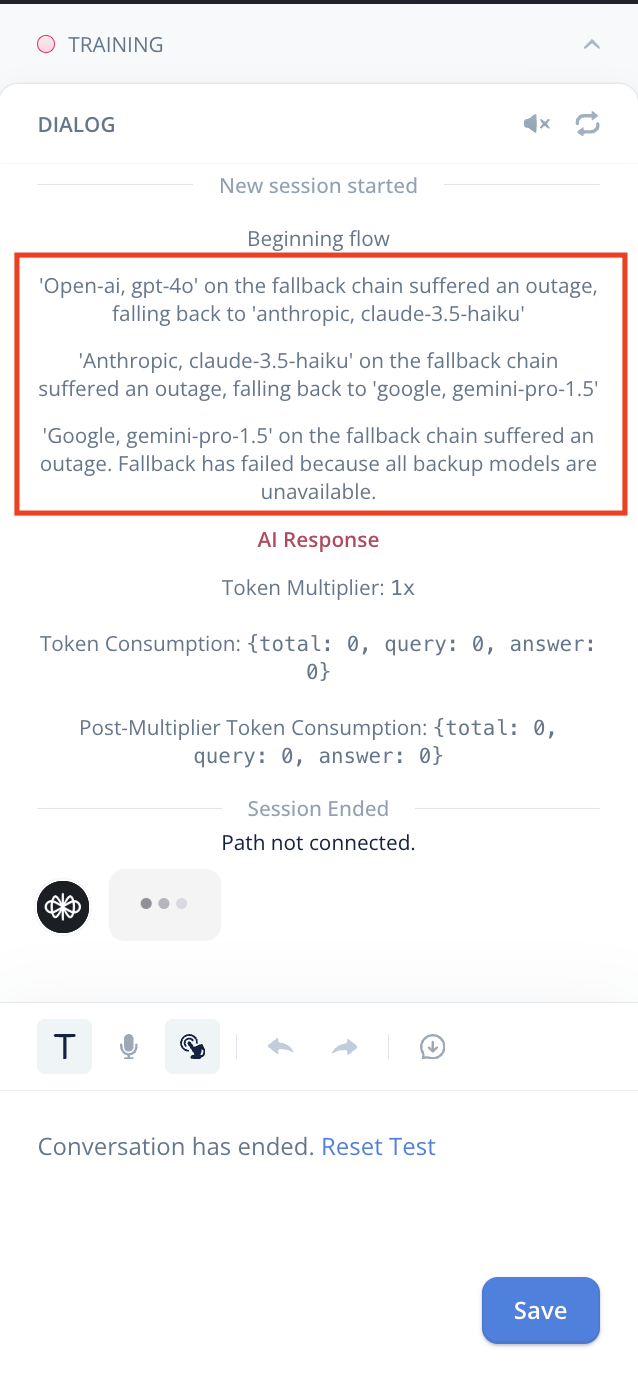

In the highly unlikely event that all supported 3rd-party LLM providers are suffering simultaneous outages, cascading behaviour has the risk of putting the LLM fallback system in an infinite loop by following the fallback configuration indefinitely.

Fortunately, the LLM fallback system explicitly checks for this possibility and the fallback process will terminate if it detects that it is revisiting previously visited fallback models, preventing an infinite loop. Concretely, if we again consider a Prompt step contacting GPT-4o and assume that all LLM providers are down, the fallback system behaves as follows:

- Detected that GPT-4o (OpenAI) is suffering an outage, try to fallback to Claude 3.5 - Haiku

- Detected that Claude 3.5 - Haiku (Anthropic) is suffering an outage, try to fallback to Gemini 1.5 Pro

- Detected that Gemini 1.5 Pro (Google) is suffering an outage, try to fallback to GPT-3.5 Turbo

- Detected that GPT-3.5 Turbo (OpenAI) is suffering an outage, try to fallback to Claude 3.5 - Haiku

- Claude 3.5 - Haiku was already visited before. An infinite loop has been detected and so we abort the fallback process.

In this worst-case scenario, fallback cannot help since there is nothing left to fallback to. The fallback system terminates and emits a debug trace indicating that an across-the-board outage has taken down the Voiceflow agent.

LLM fallback debug trace

For technical users of Voiceflow, if you are directly calling the Voiceflow Dialog Manager API, then the LLM fallback system will emit a debug trace that indicates when each fallback that occurs. For example, when the LLM falls back from GPT-4o to Claude 3.5 - Haiku, the following debug trace is emitted:

{

"time": 1739902968927,

"type": "debug",

"payload": {

"message": "'open-ai, gpt-4o' on the fallback chain suffered an outage, falling back to 'anthropic, claude-3.5-haiku'"

}

}A full example response body from the DM API, which includes LLM fallback debug traces, is shown below:

[

{

"time": 1739902965684,

"type": "debug",

"payload": {

"type": "start",

"message": "beginning flow"

}

},

{

"time": 1739902968927,

"type": "debug",

"payload": {

"message": "'open-ai, gpt-4o' on the fallback chain suffered an outage, falling back to 'anthropic, claude-3.5-haiku'"

}

},

{

"time": 1739902968927,

"type": "debug",

"payload": {

"message": "'anthropic, claude-3.5-haiku' on the fallback chain suffered an outage, falling back to 'google, gemini-pro-1.5'"

}

},

{

"time": 1739902968927,

"type": "debug",

"payload": {

"message": "__AI Response__<br />\nModel: `gemini-pro-1.5`<br />\nToken Multiplier: `3.5x`<br />\nToken Consumption: `{total: 82, query: 42, answer: 40}`<br />\nPost-Multiplier Token Consumption: `{total: 284, query: 144, answer: 140}`<br />"

}

},

{

"time": 1739902968928,

"type": "debug",

"payload": {

"type": "ai-response-parameters-model",

"message": "AI Response Parameters (Model): `user input (if any): undefined, LLM prompt: , output: 1. Lululemon Athletica Inc. - Vancouver, British Columbia\n2. Canada Goose Holdings Inc. - Toronto, Ontario\n3. Aritzia LP - Vancouver, British Columbia \n `"

},

"paths": [

{

"event": {

"type": "ai-response-parameters-model",

"payload": {

"system": "",

"assistant": "",

"output": "1. Lululemon Athletica Inc. - Vancouver, British Columbia\n2. Canada Goose Holdings Inc. - Toronto, Ontario\n3. Aritzia LP - Vancouver, British Columbia \n",

"model": "gemini-pro-1.5",

"temperature": 0.3,

"maxTokens": 256,

"queryTokens": 144,

"answerTokens": 140,

"tokens": 284,

"multiplier": 3.5

}

}

}

]

},

{

"time": 1739902968927,

"type": "text",

"payload": {

"slate": {

"id": "xr60oeg",

"content": [

{

"children": [

{

"text": "1. Lululemon Athletica Inc. - Vancouver, British Columbia"

}

]

},

{

"children": [

{

"text": "2. Canada Goose Holdings Inc. - Toronto, Ontario"

}

]

},

{

"children": [

{

"text": "3. Aritzia LP - Vancouver, British Columbia"

}

]

}

],

"messageDelayMilliseconds": 500

},

"delay": 500,

"message": "1. Lululemon Athletica Inc. - Vancouver, British Columbia\n2. Canada Goose Holdings Inc. - Toronto, Ontario\n3. Aritzia LP - Vancouver, British Columbia",

"ai": true,

"voice": "elevenlabs:eleven_flash_v2_5:FGY2WhTYpPnrIDTdsKH5"

}

},

{

"time": 1739902968929,

"type": "end"

}

]Fallback Coverage

Currently, LLM fallback covers the Prompt step and Generative No Match feature.

Future improvements will extend the coverage of fallback to internal Voiceflow LLM calls, such as entity extraction.

Definition of outage

The LLM fallback system includes an outage detection system (ODS) which checks LLM responses for possible outage-related failure. The responses to LLM requests sent by all Voiceflow agents are inspected this way (regardless if they have a paid plan with access to LLM fallback) and this data is aggregated to flag potential outages on an LLM.

The ODS defines an outage as an issue that disrupts the availability of a Voiceflow agent, which is caused by Voiceflow or 3rd-party LLM providers (OpenAI, Anthropic, Google, etc). An outage excludes anything that would be considered a user error, that is, a mistake caused by a Voiceflow user that causes their agent to behave incorrectly. Additionally, this definition excludes issues which are caused by non-LLM 3rd-party providers (e.g. AWS downtime).

Updated 4 months ago