Configuring voice settings

Overview

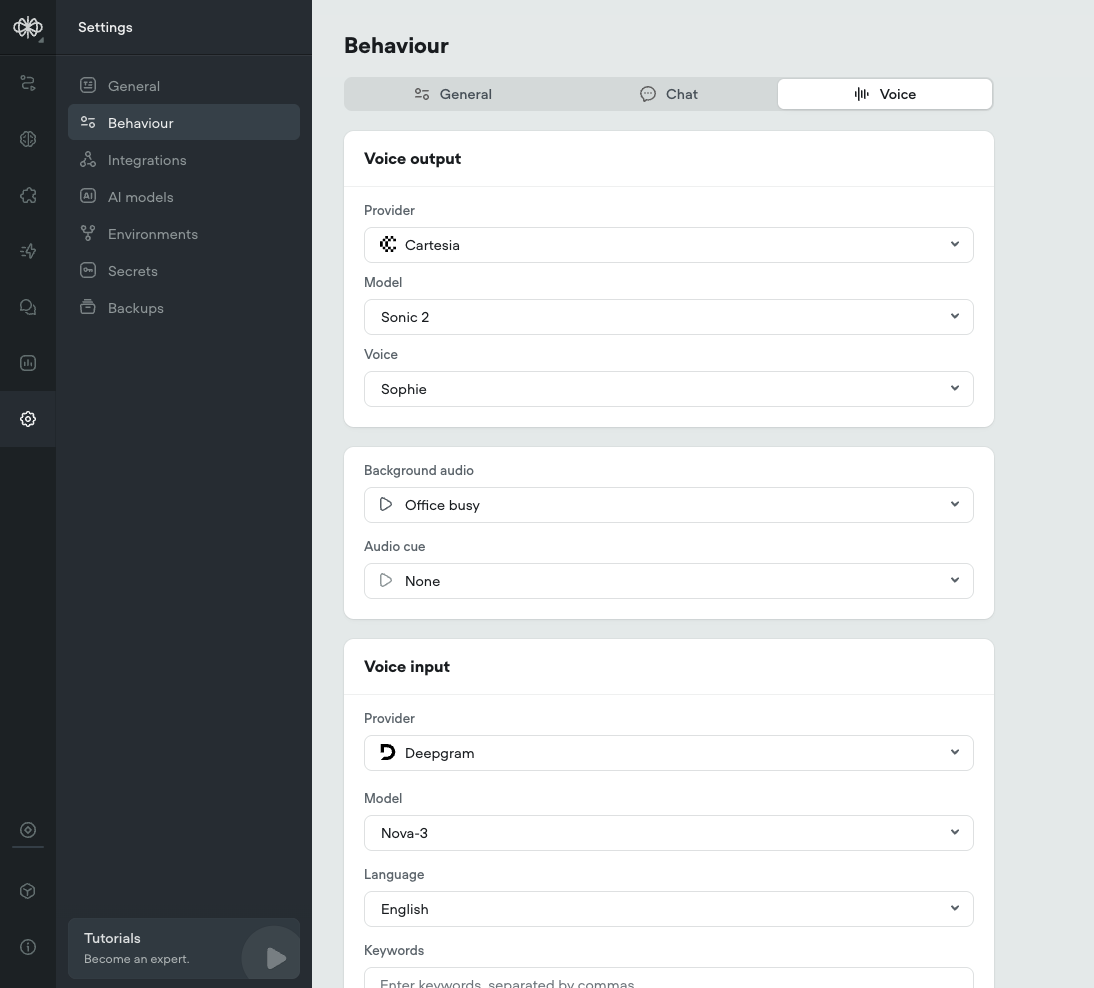

The Voice settings allow you to fine-tune the behaviour of your voice agents during voice calls. By adjusting parameters like silence timeouts, audio cues, and STT/TTS settings, you can make conversations more organic and enhance the caller experience. This guide will walk you through the available options and how to configure them for your voice agent.

Getting Started

To access the Voice behavior settings for your agent:

- Navigate to your project.

- Navigate to the Settings > Behaviour tab.

- Select the Voice tab.

You'll see several settings that control different aspects of the voice interaction.

Key Concepts

- Voice Output: Also referred to as Text-to-Speech (TTS). Determines the voice and speech patterns of the agent speaking back to the user.

- Voice Input: Also referred to as Speech-to-Text (STT). Determines how we capture user speech for the agent to understand. See Voice Input.

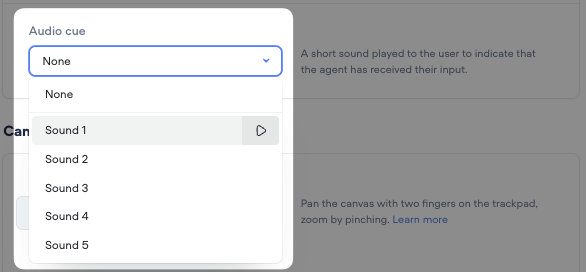

- Audio Cue: A short sound effect played to the caller to signal that the agent has received their input and is processing it.

- Background Audio:: Simulated background audio that helps give more realism to the call

How To

Set an Audio Cue

- Navigate to the Audio Cue setting.

- Click the drop-down menu and select one of the preset sound effects. Hear a preview of the cue by selecting the play button in the dropdown.

- Test the audio cue by calling your agent and hearing it play after you finish speaking.

Select TTS Voice

- Navigate to Settings > Behavior > Voice

- Choose your TTS provider (ElevenLabs, Rime, Google, Amazon, Microsoft) from the top tab selector. Different providers are more or less expensive.

- For each provider, you can hear what a voice sounds like by pressing the play button, and then select it by clicking on the voice name.

- Test the voice by calling your agent and listening to its responses.

Explore the variety of TTS voices to find one that callers find natural and engaging to interact with. Don't be afraid to experiment with different options.

Troubleshooting

Agent is Interrupting Caller

- Modify various Voice Input settings to give callers more time to start and complete their statements.

- Make sure your agent prompts are worded clearly to elicit succinct responses from callers. Open-ended or ambiguous prompts may encourage rambling.

Updated 9 months ago