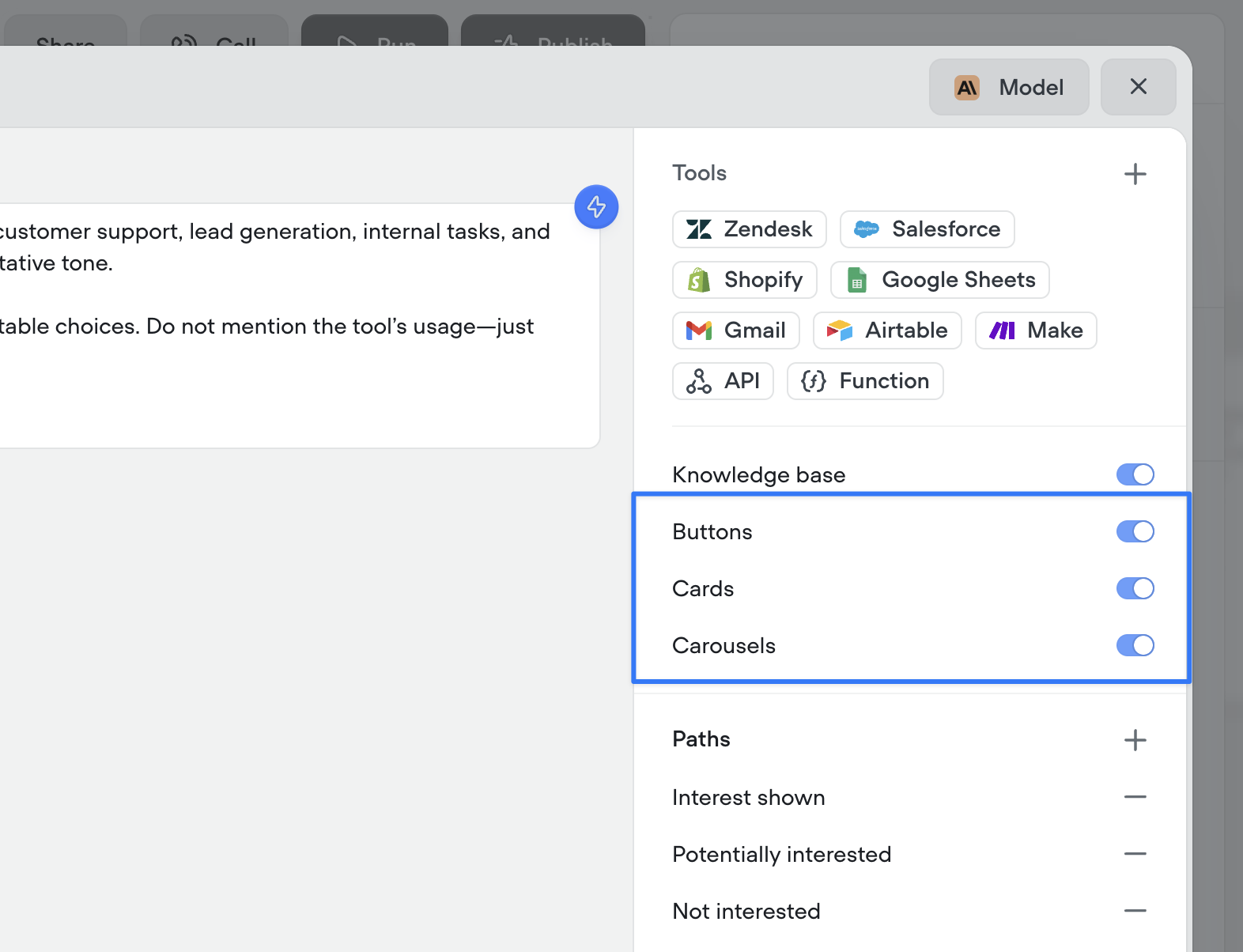

By enabling these options and providing guidance on when to use (or avoid) each special tool, your agent will intelligently enhance interactions with visual tools like buttons, cards, and carousels.

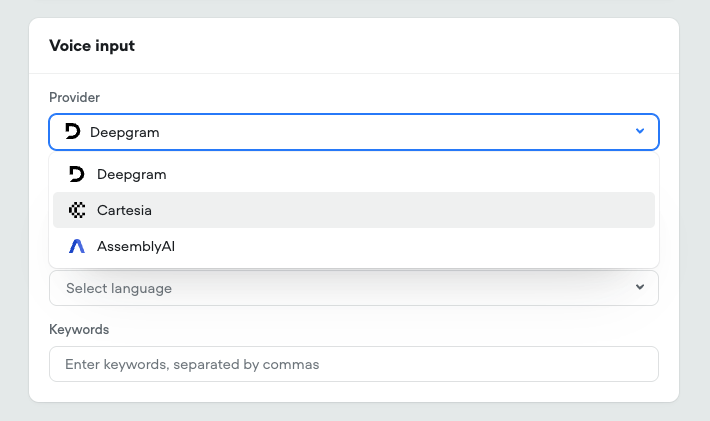

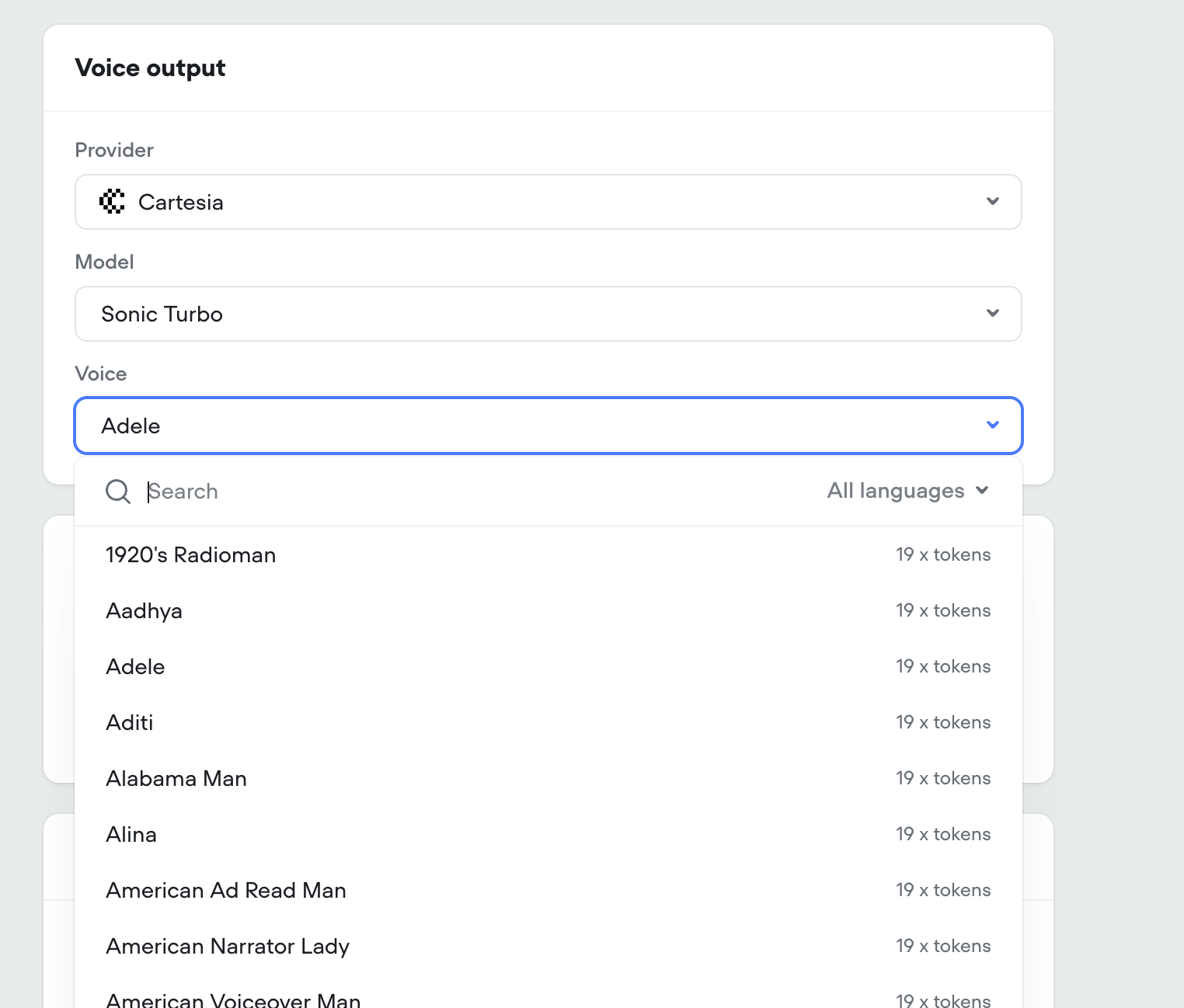

Note: these configurations are ignored during phone-based conversations, meaning it will not prohibit your ability to create multi-modal AI agents with Voiceflow.