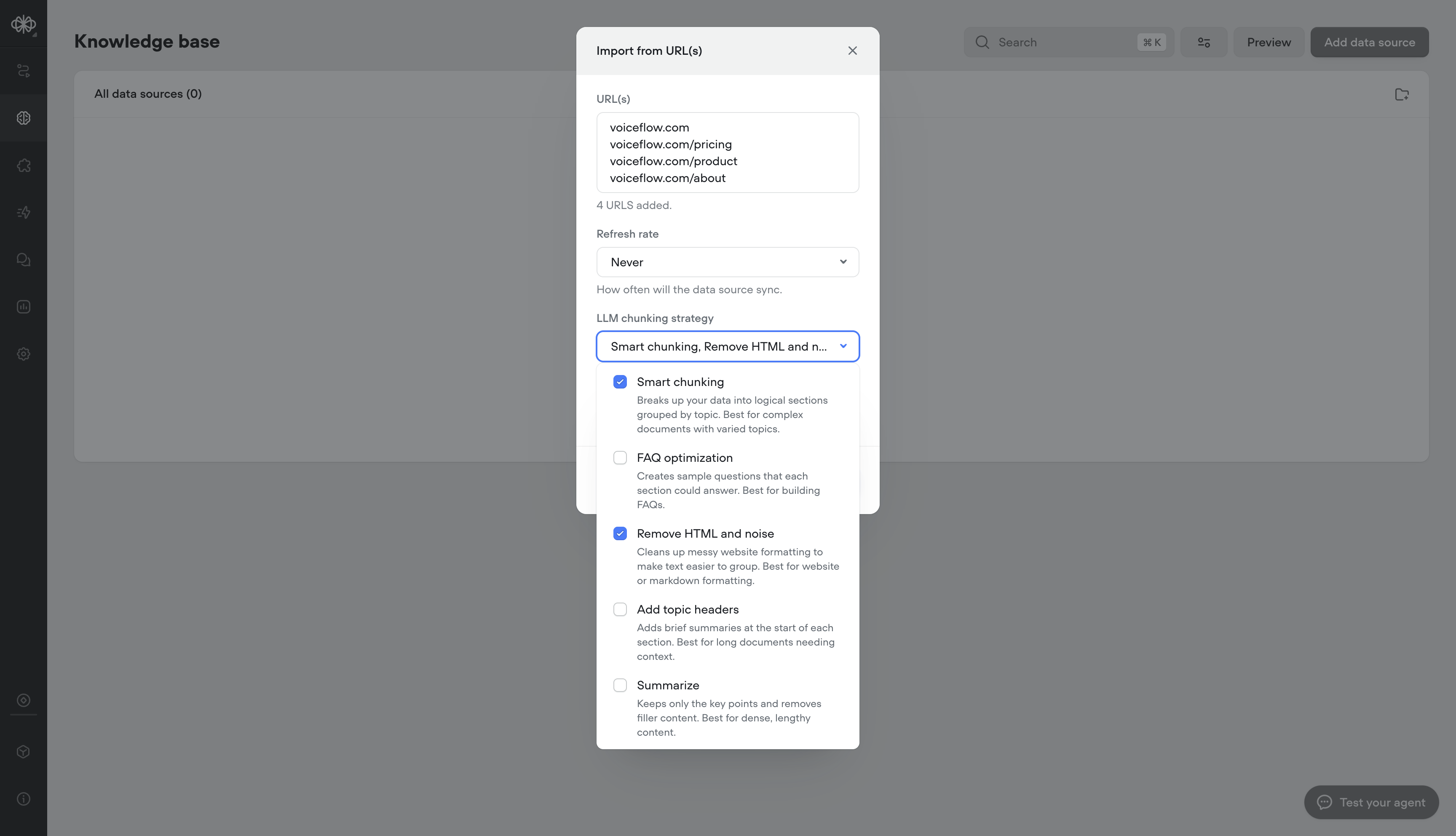

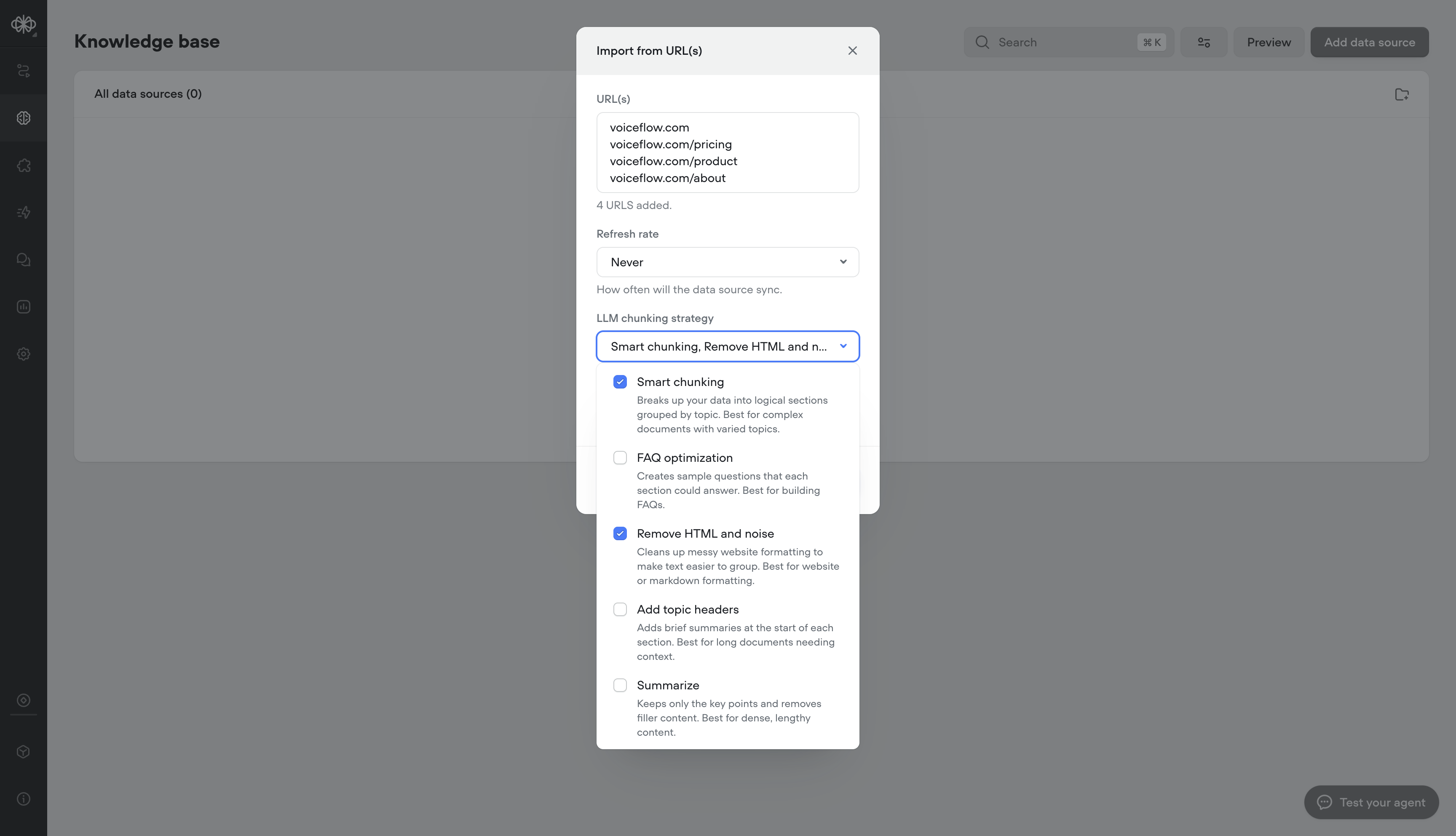

Your Knowledge Base just got a major upgrade. With our new LLM chunking strategies, you can now prep your data for AI like a pro—no manual formatting needed.

We’ve introduced 5 powerful strategies to help structure and optimize your content for maximum retrieval performance:

🧠 Smart chunking

Automatically breaks content into logical, topic-based sections. Ideal for complex documents with multiple subjects.

❓ FAQ optimization

Generates sample questions per section, perfect for creating high-impact FAQs.

🧹HTML & noise removal

Cleans up messy website markup and boilerplate. Best used on content pulled from the web or markdown.

📝Add topic headers

Inserts short, helpful summaries above each section. Great for longform content that needs context.

🔍 Summarize

Distills each section to its key points, removing fluff. Perfect for dense reports or research.

These chunking strategies help you get more accurate, more relevant answers from your AI—especially for data sources not originally built for Retrieval-Augmented Generation (RAG).

Ready to make your Knowledge Base smarter? Try out some LLM chunking strategies and watch the results speak for themselves.

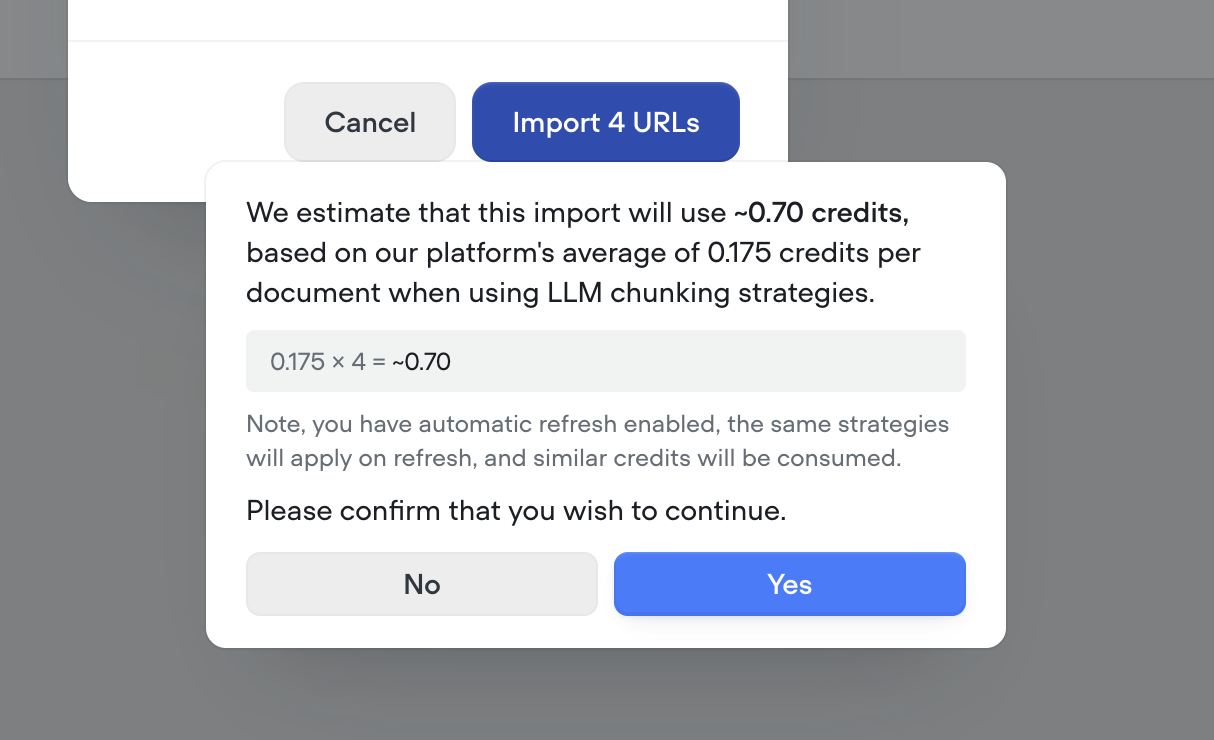

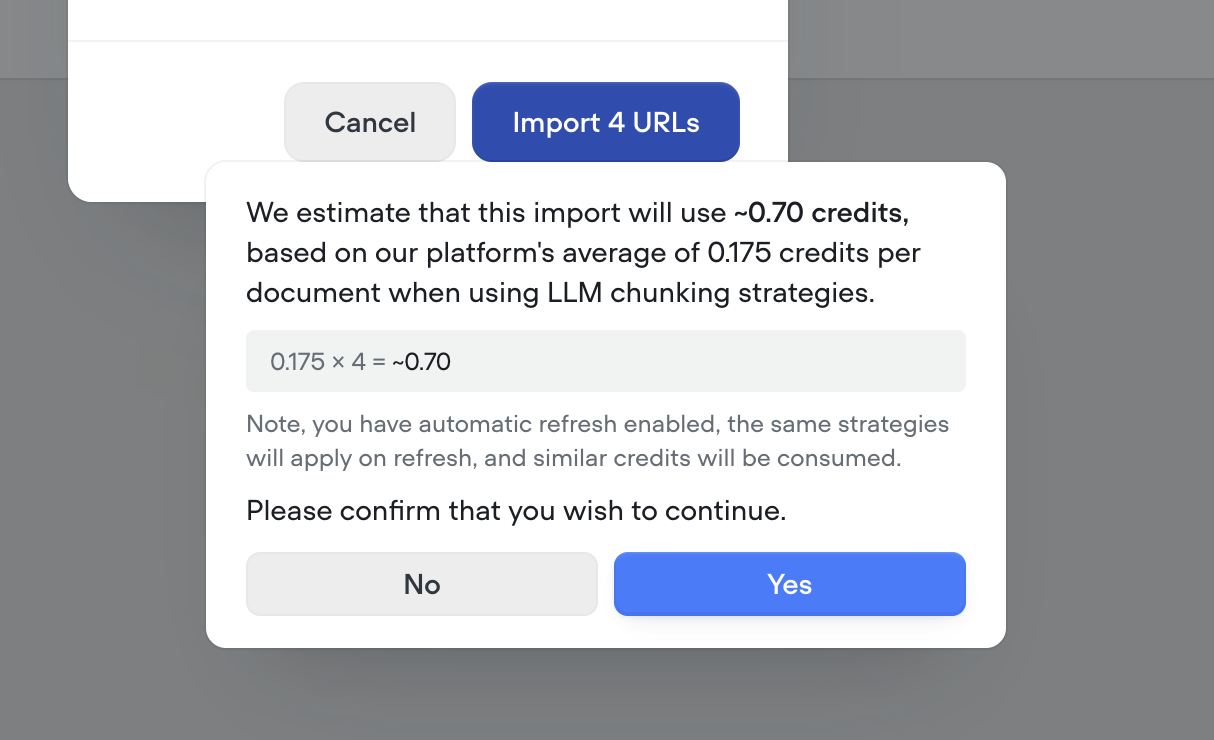

Note - LLM chunking strategies use credits. Before processing, we’ll show you a clear estimate of how many credits will be used—so you’re always in control.