Added

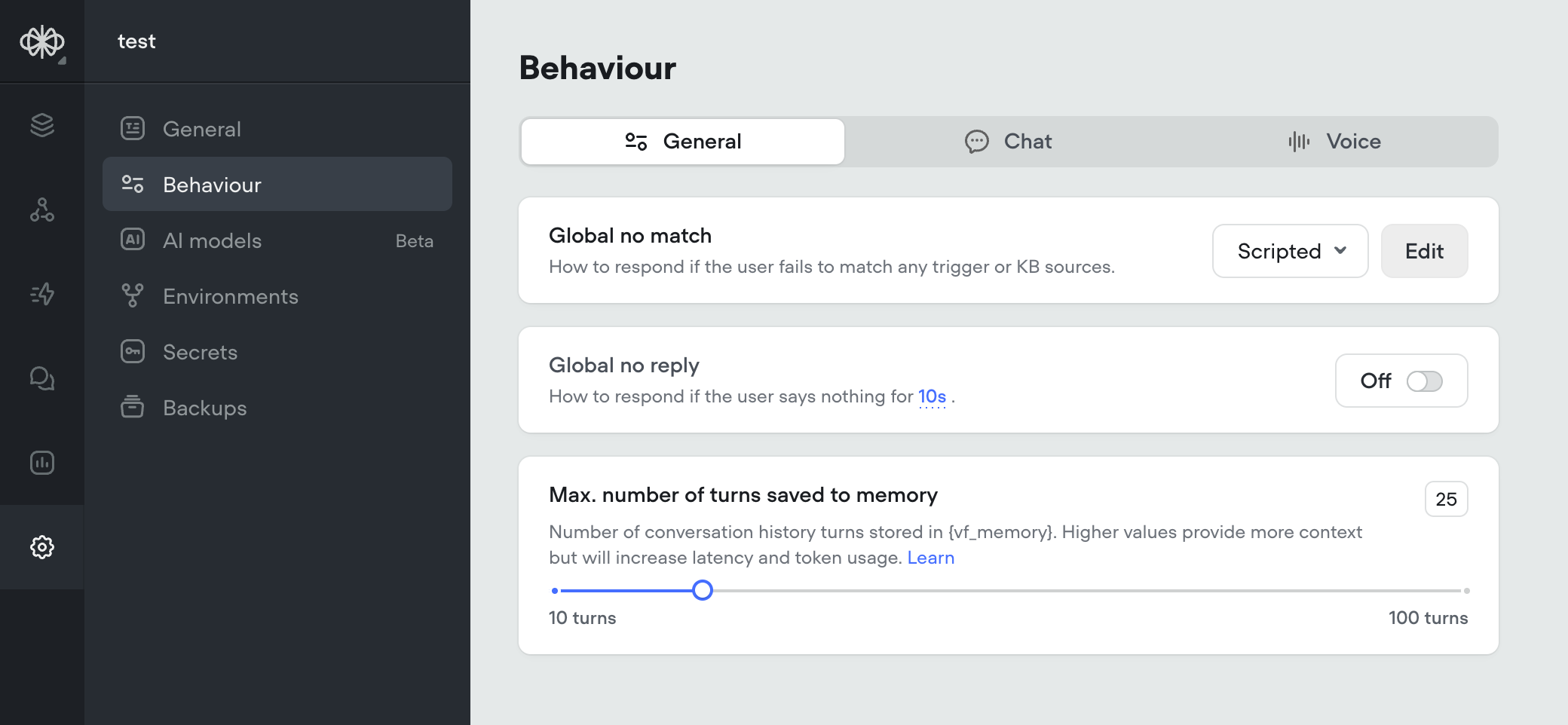

Max Memory Turns Setting

March 31st, 2025 by Tyler Han

Conversation memory is a critical component of the Agent and Prompt steps. Having longer memory gives the LLM model more context about the conversation so far, and make better decisions based on previous dialogs.

However, larger memory adds latency and costs more input tokens, so there is a drawback.

Before, memory was always set to 10 turns. All new projects will now have a default of 25 turns in memory. This can now be adjusted this in the settings, up to 100 turns.

For more information on how memory works, reference: https://docs.voiceflow.com/docs/memory