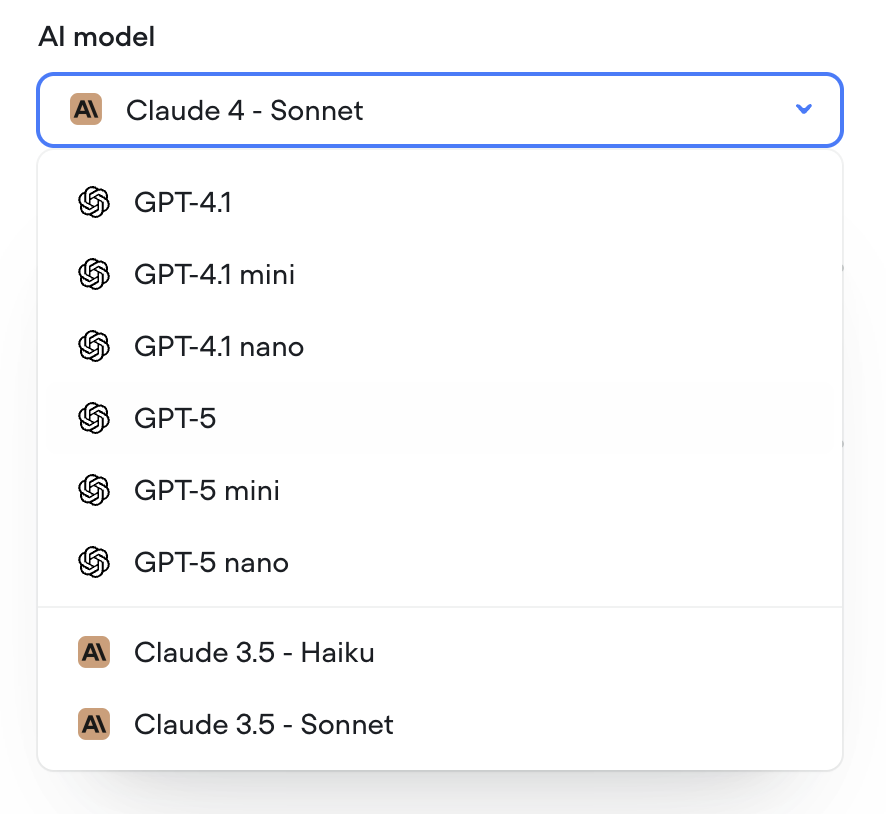

GPT-5 models

by Michael HoodGPT-5 models are now available in Voiceflow.

GPT-5 models are now available in Voiceflow.

You can now double-click an agent step to jump straight into its editor — saving yourself an extra click.

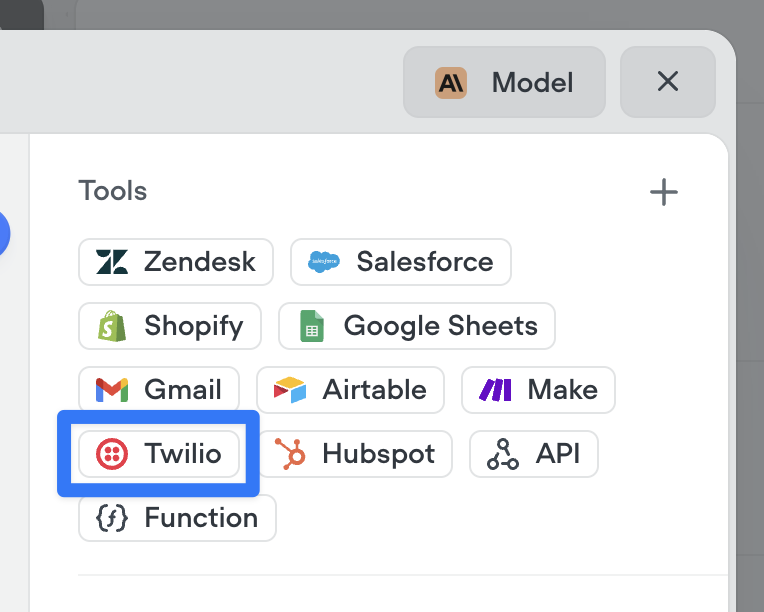

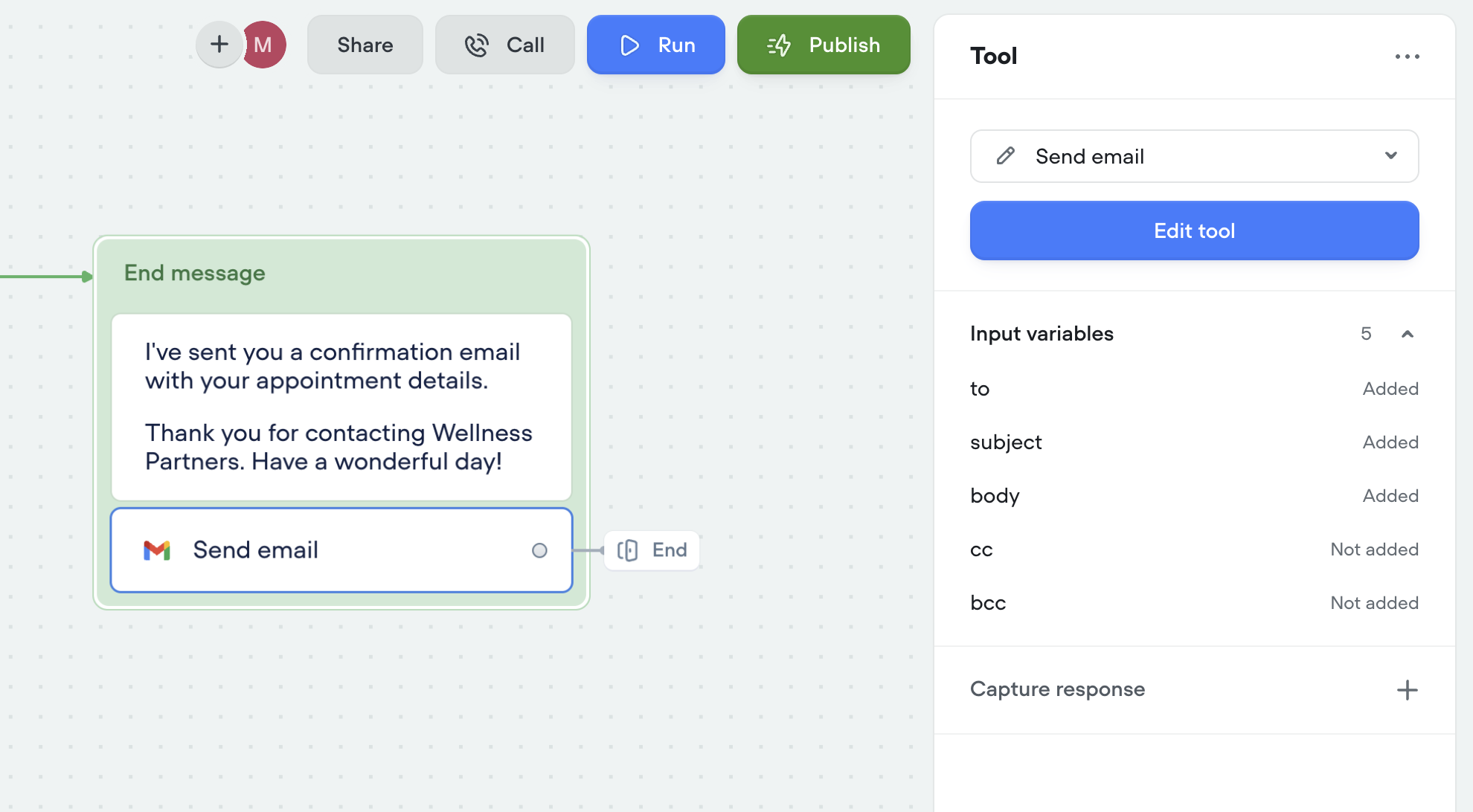

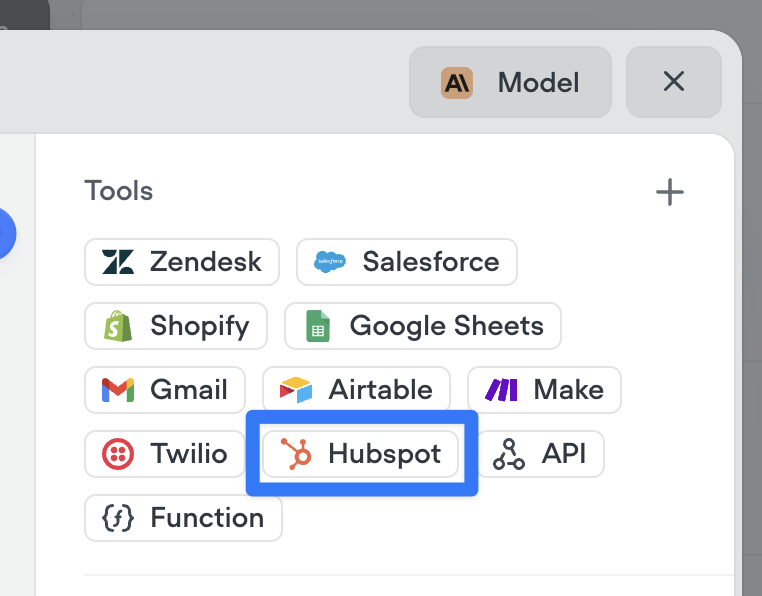

You can now run tools outside of the agent step using the new Tool Step.

This lets you trigger any tool in your agent — like sending an email or making an API call — anywhere in your workflows.

🛠️ You’ll find the call forwarding Step in the ‘Dev’ section of the step menu for now.

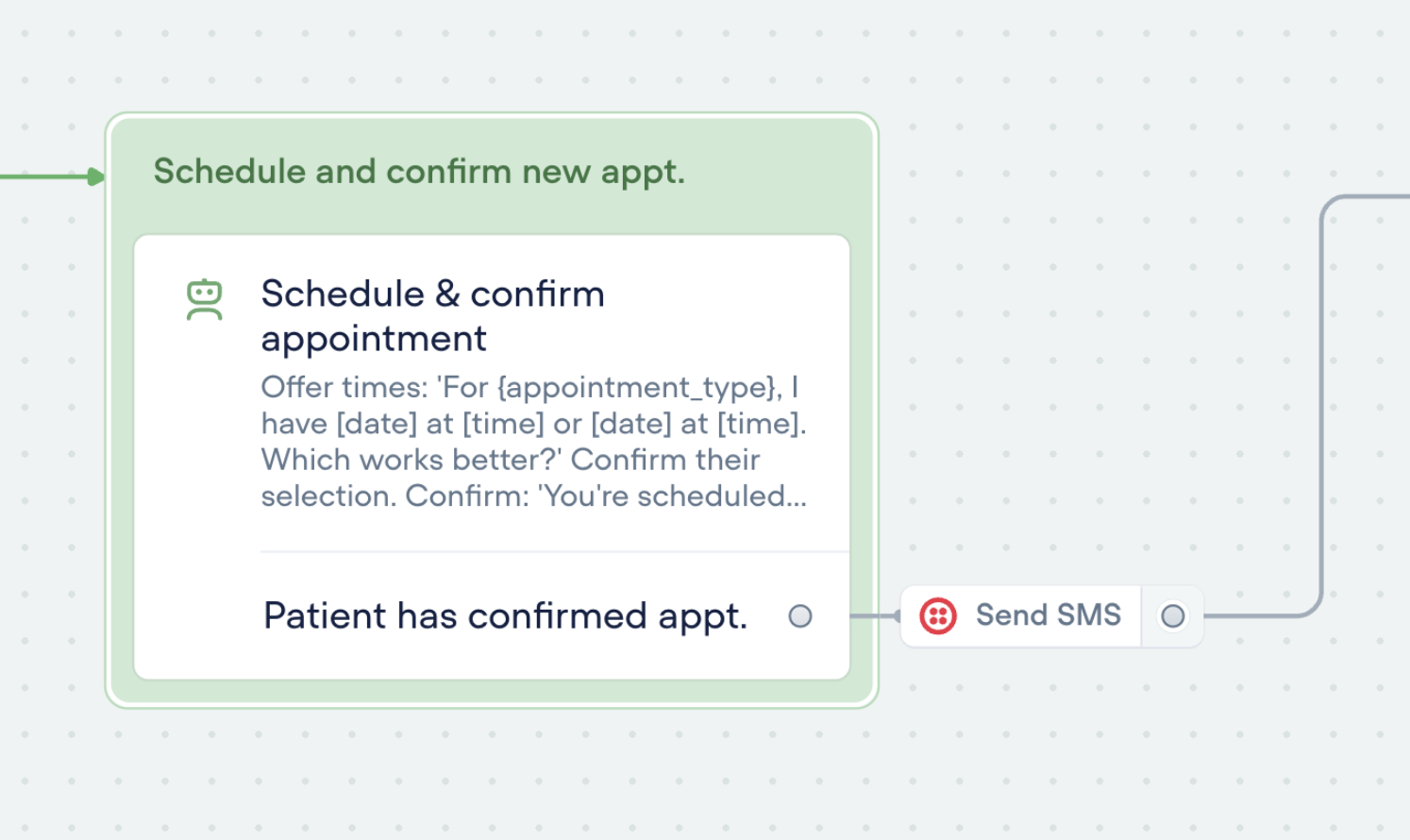

Tools can also be used as actions:

A few months ago, we released a new analytics view—giving you deeper insights into agent performance, tool usage, credit consumption, and more.

Today, we're releasing an updated Analytics API to match. This new version gives you programmatic access to the same powerful data, so you can:

Track agent performance over time

Monitor tool and credit usage

Build custom dashboards and reports

Use the new API to integrate analytics directly into your workflows and get the insights you need—where you need them.

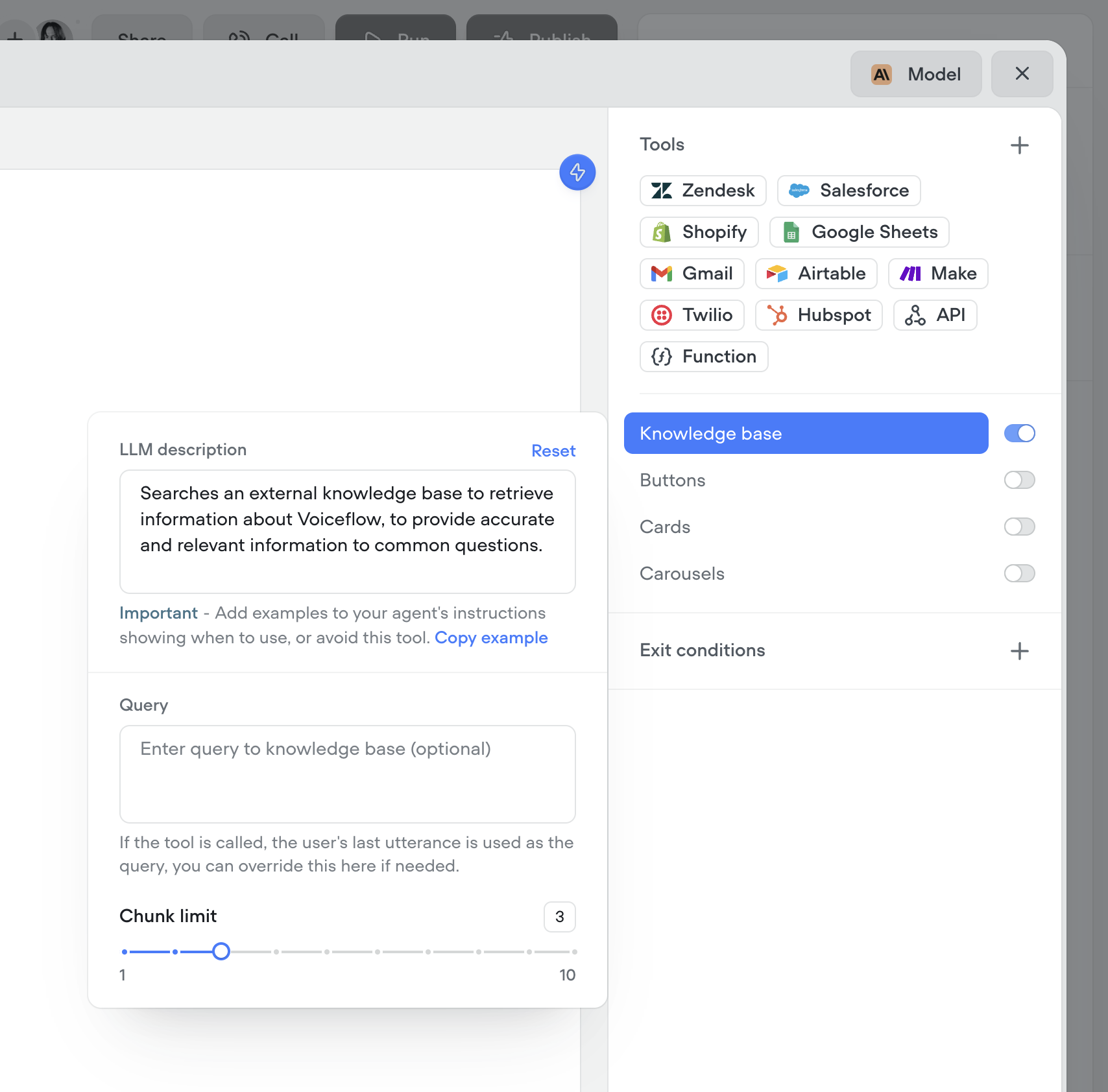

You now have more control over how your agents retrieve knowledge. Customize the query your agent uses to search the knowledge base, and fine-tune the chunk size limit to better match your content. This gives you more precision, better answers, and smarter agents.

Your AI agents just got a massive upgrade:

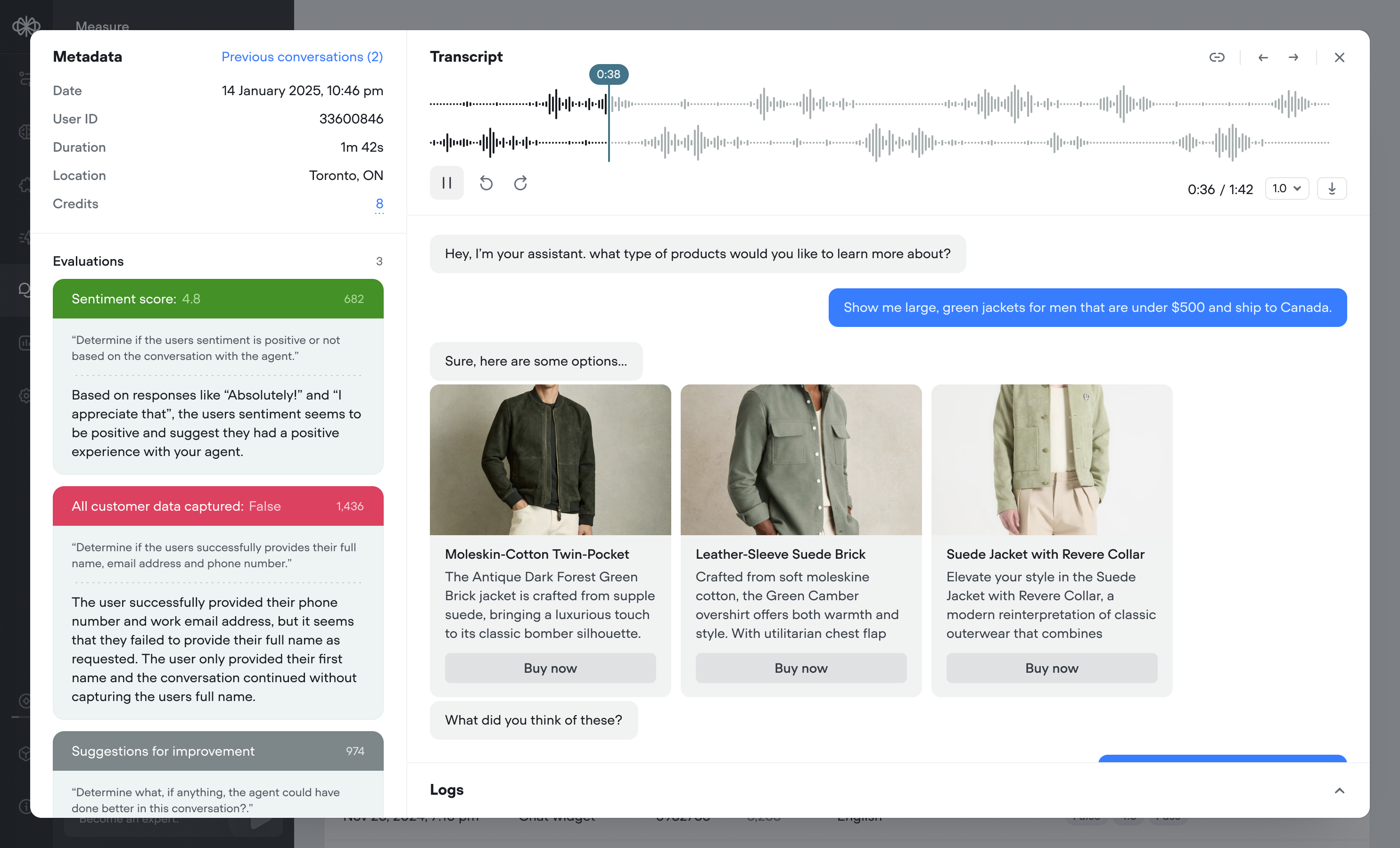

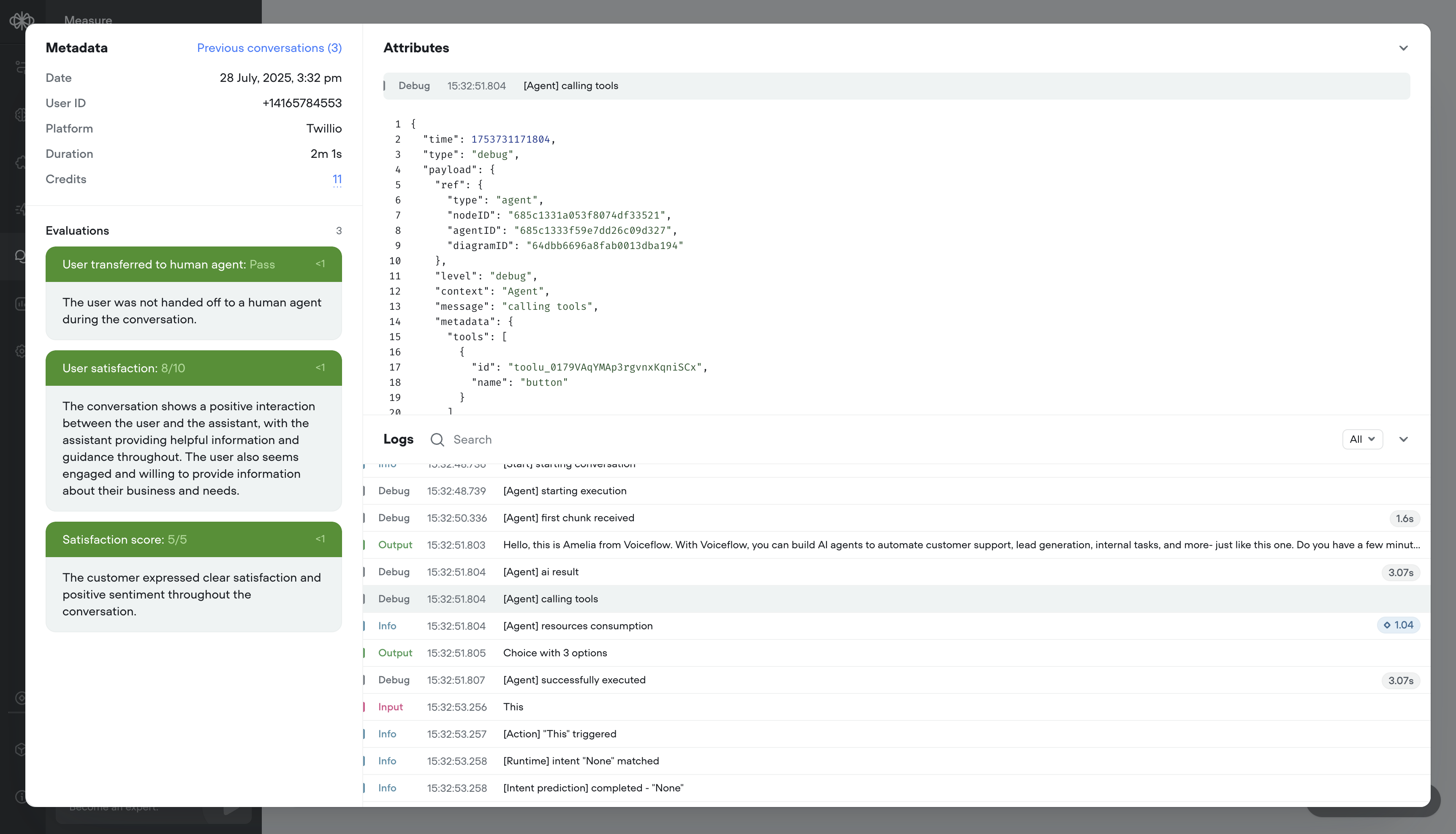

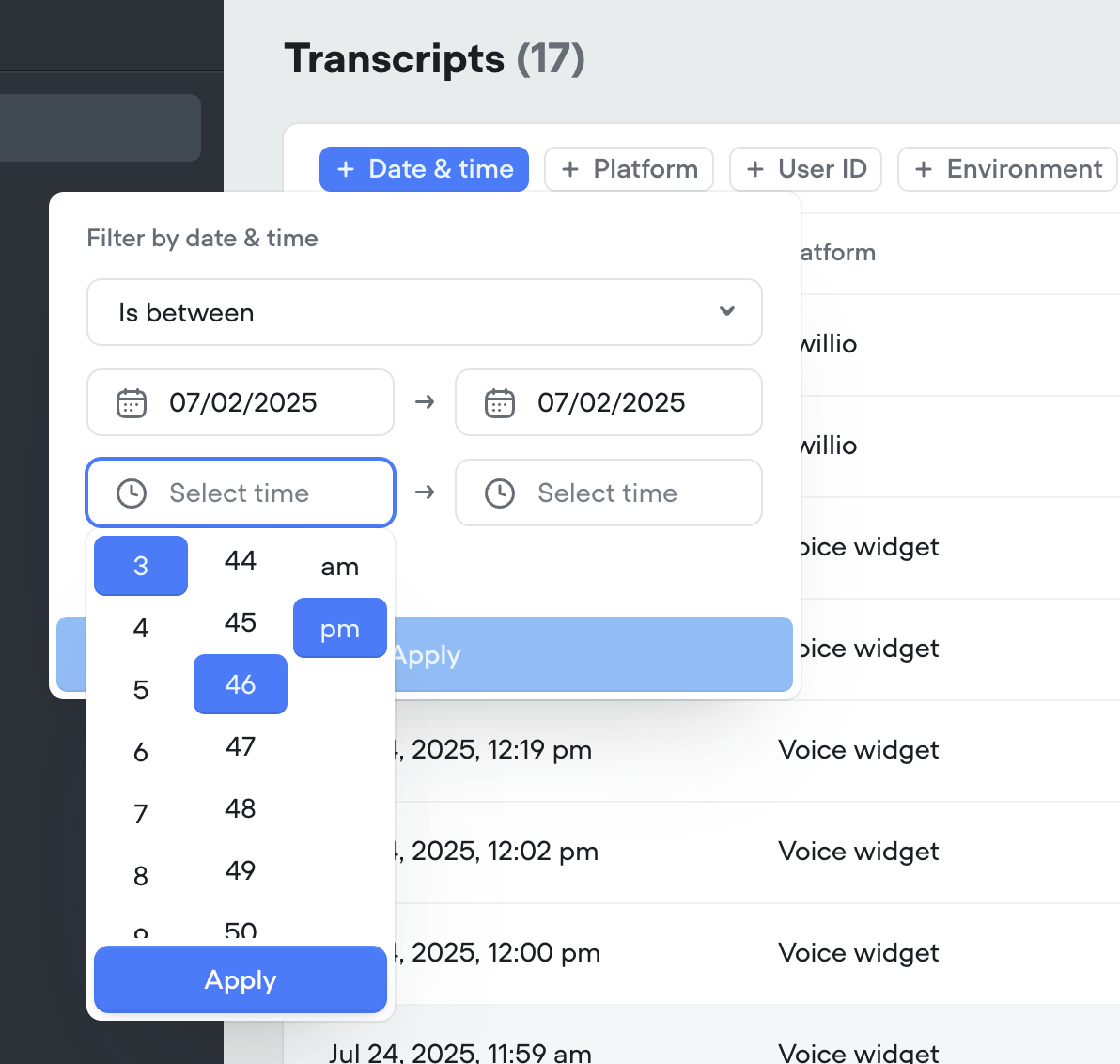

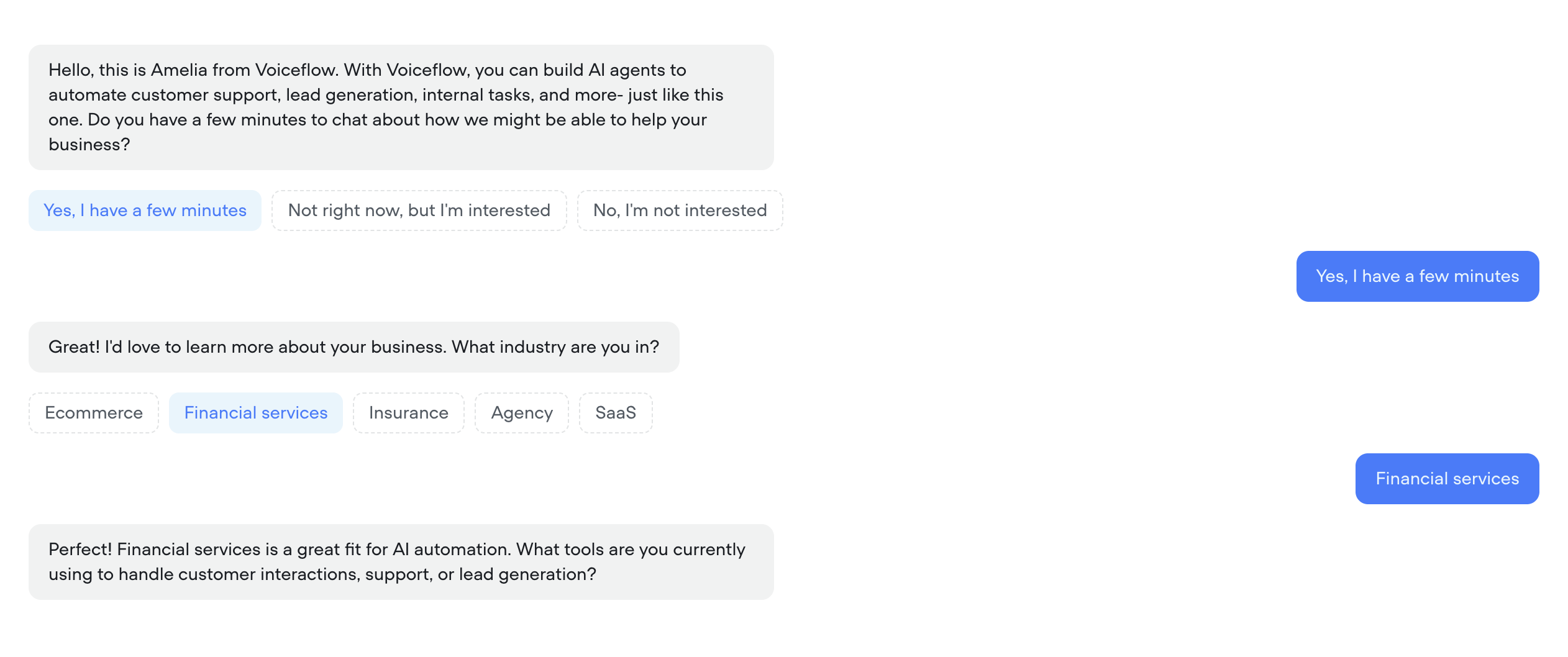

A full overhaul of the transcripts experience, built to help teams analyze, debug, and improve agents faster.

Call recordings – Replay conversations to hear how your agent performs in the real world

Robust debug logs – Trace agent decisions step-by-step

Granular filtering – Slice data by time, user ID, evaluation result, and more

Button click visualization – See exactly where users clicked in the conversation

Cleaner UI – Faster load times, more usable data

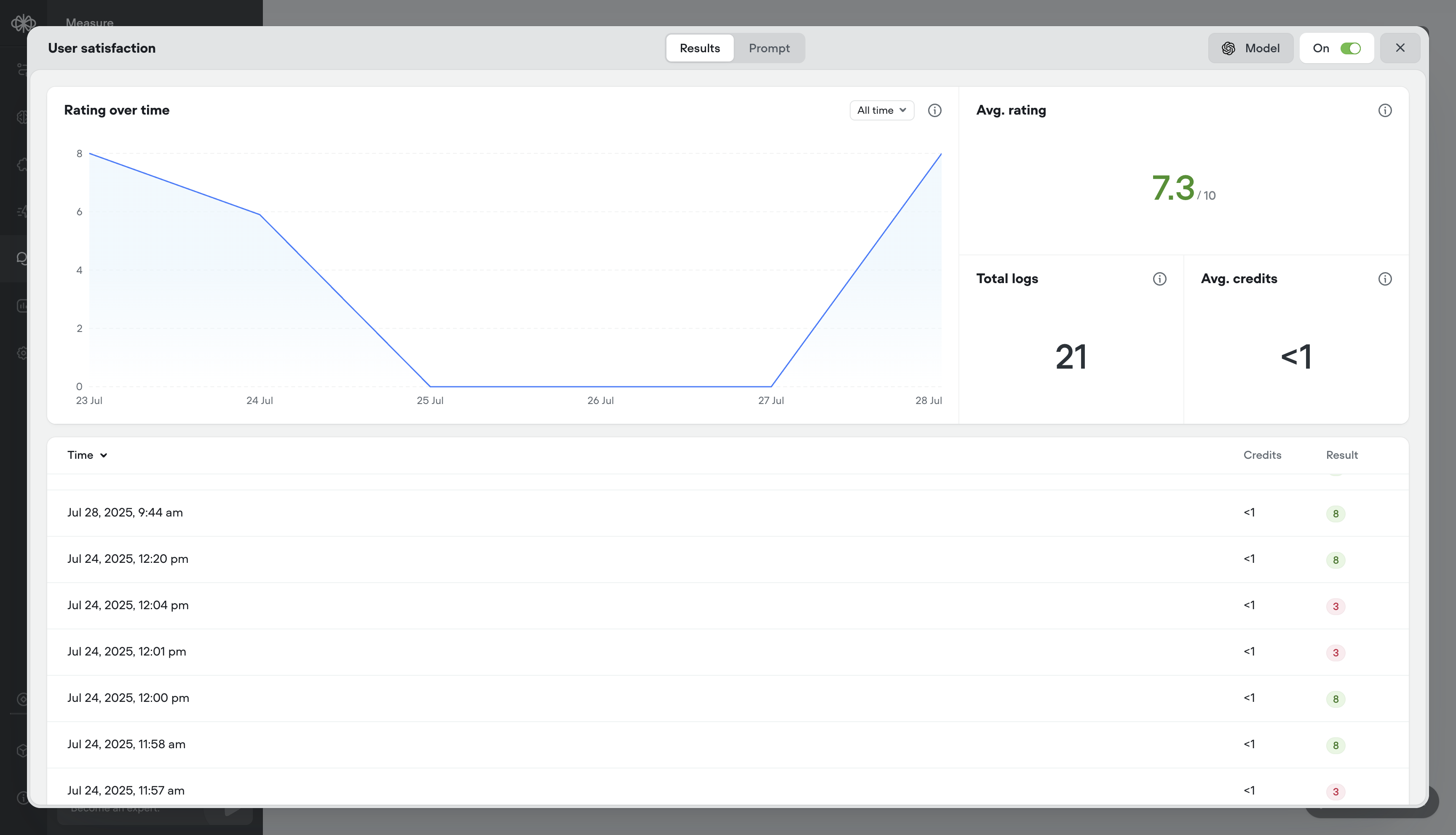

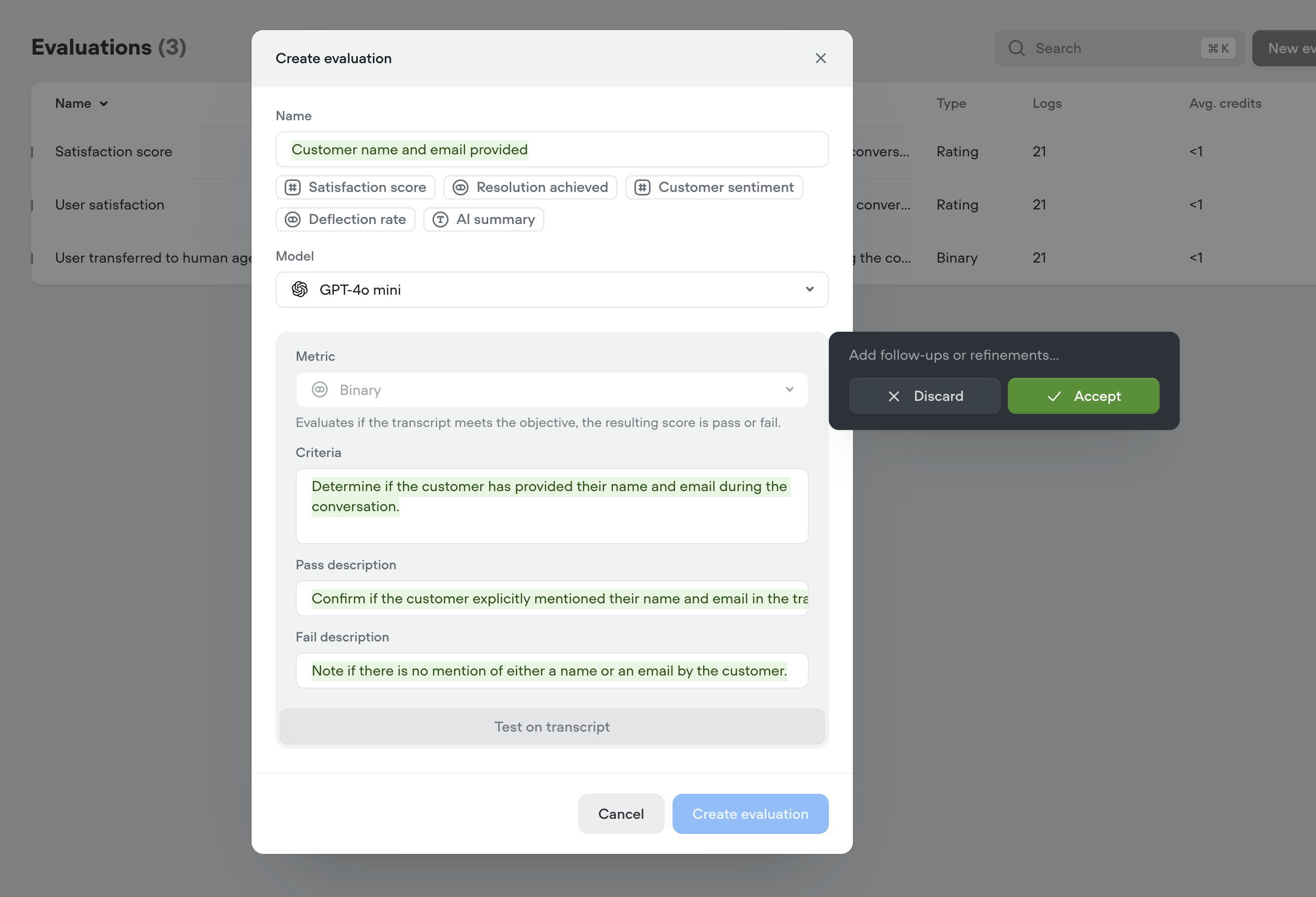

Define what “good” looks like — and measure it, your way. Build (or generate) your own evaluation criteria, tailor analysis to your business goals, and iterate with confidence.

Eval types – support for:

Also includes:

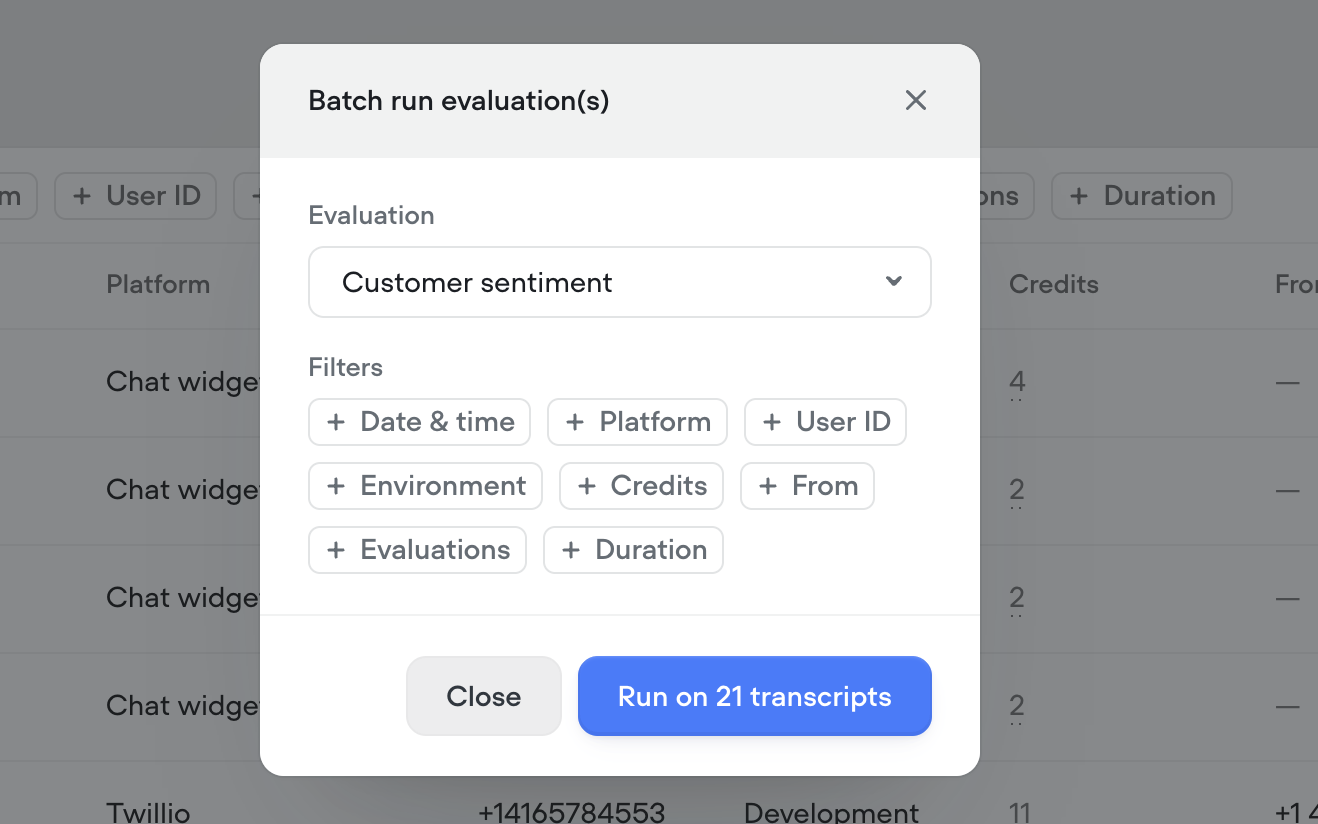

Batch or auto-run – Evaluate hundreds of transcripts in a few clicks, or automatically as they come in

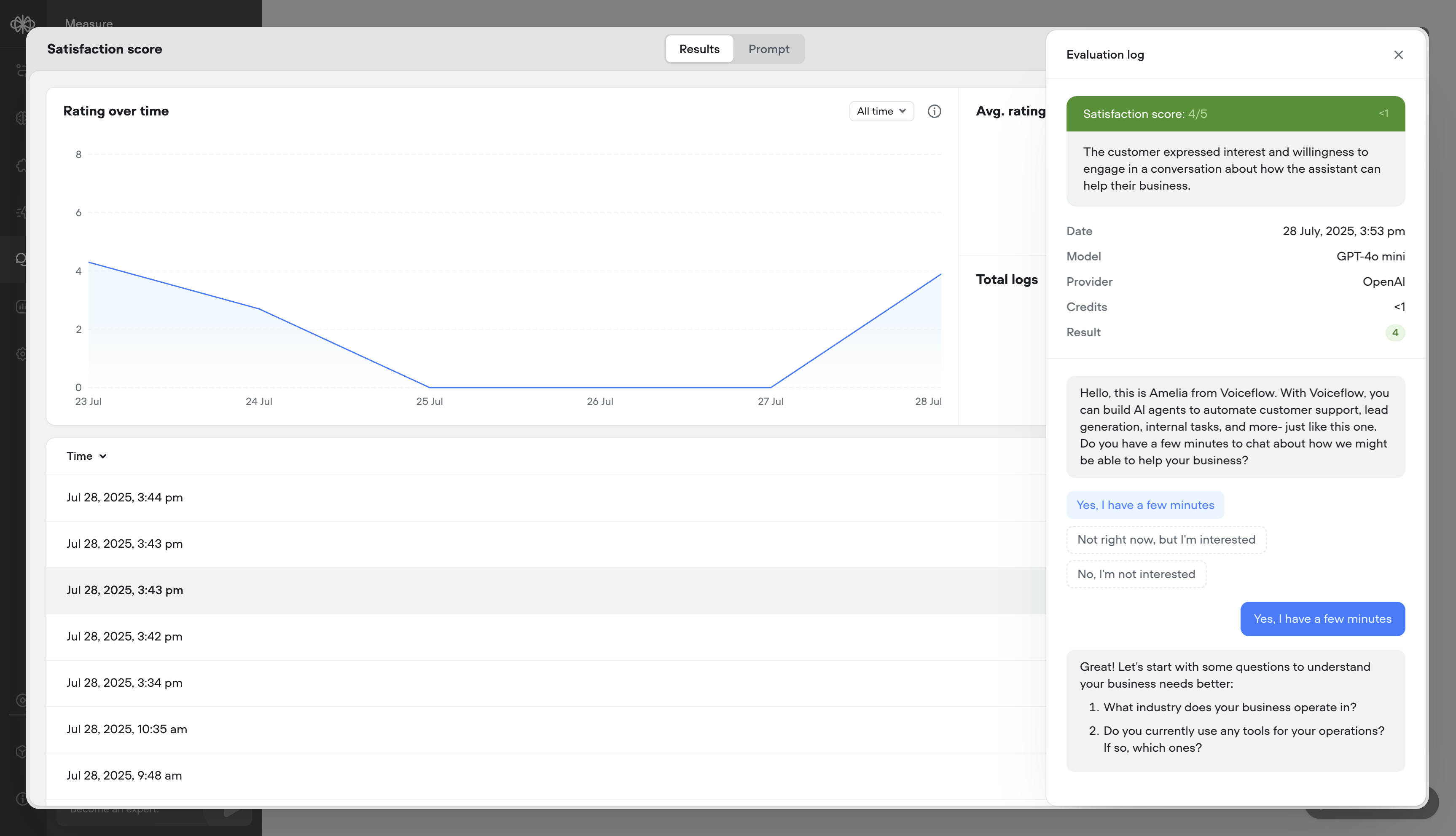

Analytics & logs – See detailed results per message or overall trends over time

🕓 Transition Period:

Until September 28, 2025, all transcripts will be available in the legacy view, for existing projects. On September 28th, 2025 the old view will be hidden and the new transcripts view will be the default. Transcripts older than 60 days will still be accessible via API for the foreseeable future.

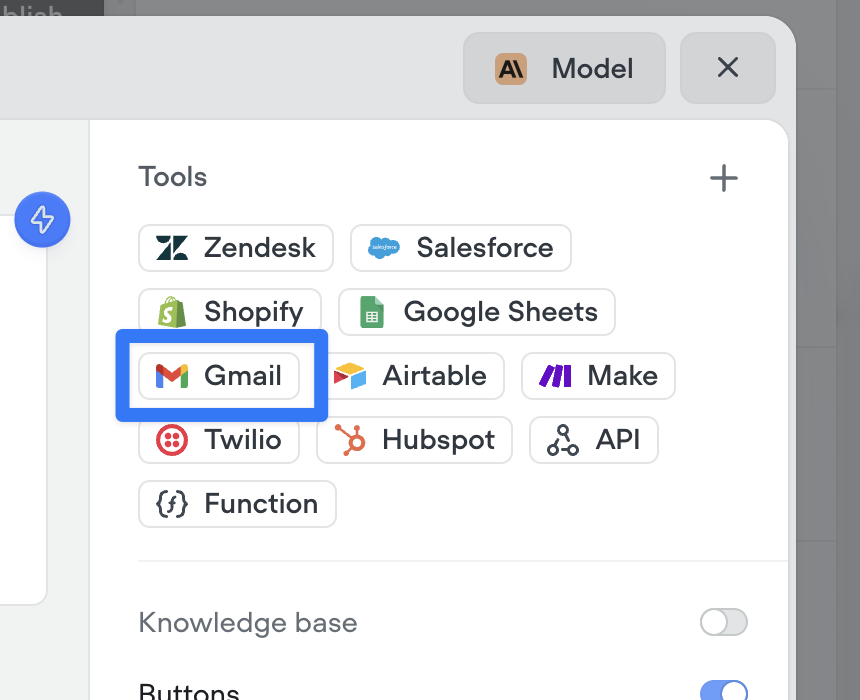

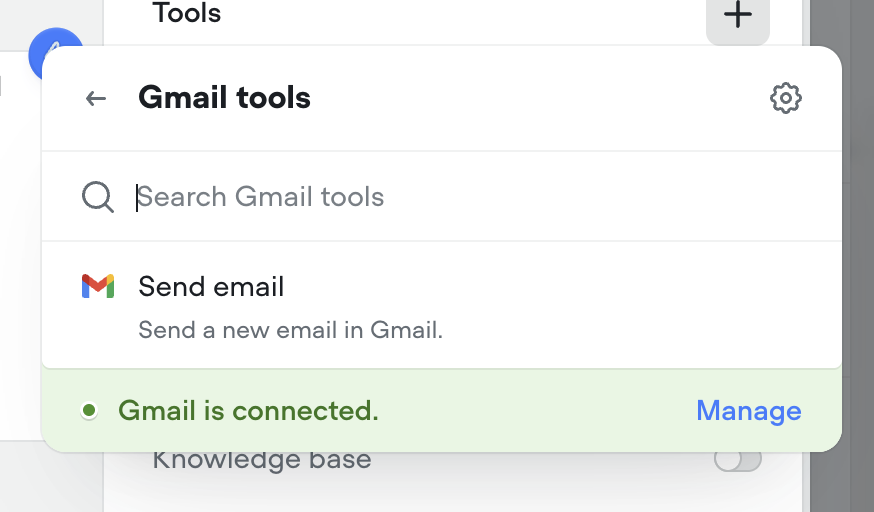

Agents can now send emails seamlessly as part of any conversation. Whether it’s a confirmation, follow-up, or lead nurture message — the new Send Email tool makes it easy to automate communication right from your agent. Just connect your Gmail account and you’re ready to go.

Make sure to instruct your agent on how to use this tool properly. Give it a try in the agent step!

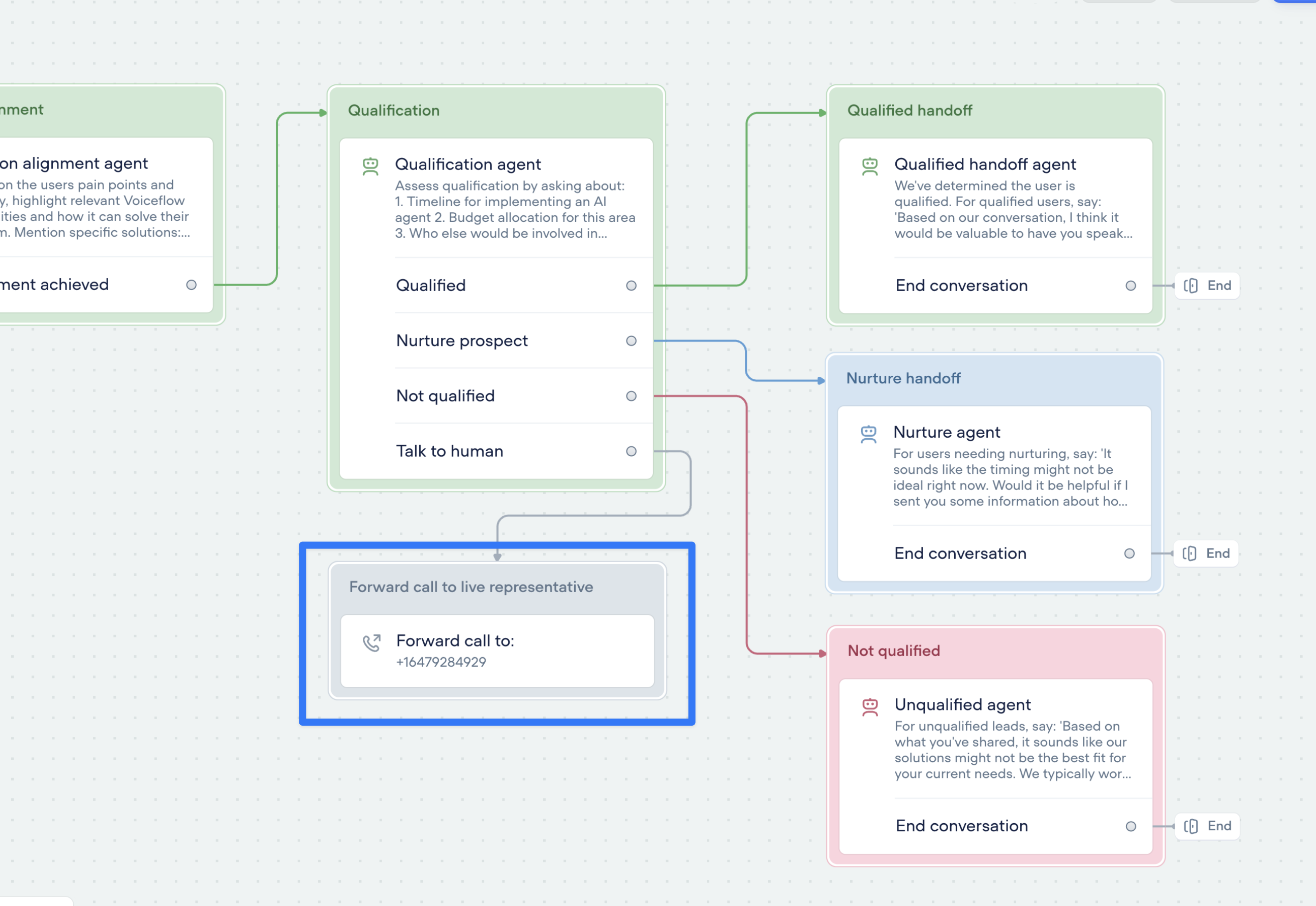

Seamlessly connect your voice AI agent to the real world with call forwarding.

The new call forwarding step lets your AI agent hand off calls to a real person (or another AI agent)—instantly and smoothly.

Build smarter, more human-ready voice agents—without sacrificing automation.

🛠️ You’ll find the call forwarding Step in the ‘Dev’ section of the step menu for now.

We’re planning to introduce a dedicated voice section soon—stay tuned!

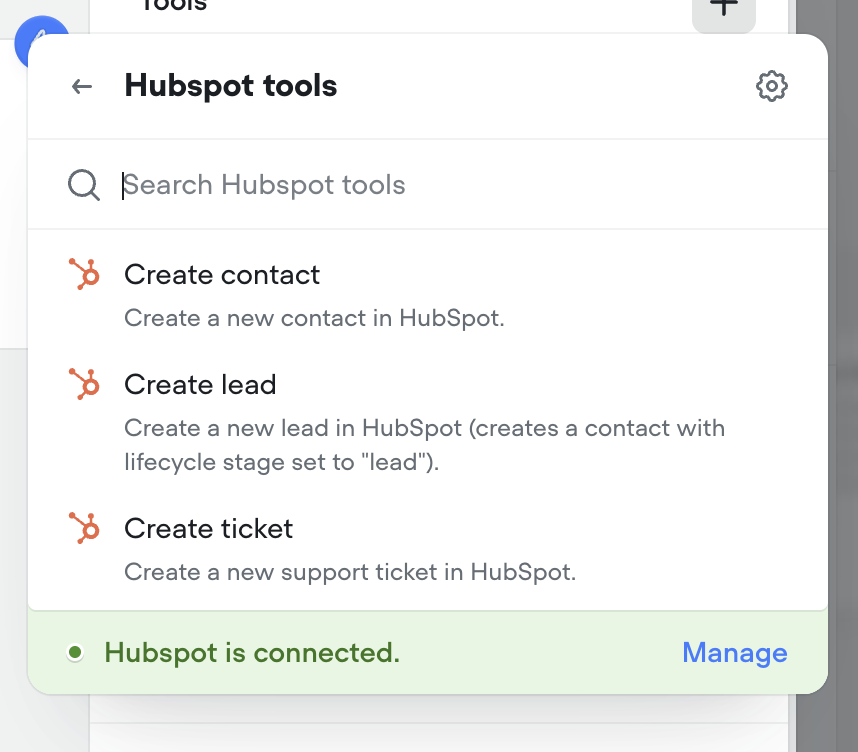

Connect your agents to Hubspot to create contacts, leads and tickets.

Enable your agents to send SMS messages with an effortless connection to Twilio. Try it now in the agent step.