How Voiceflow fits in your tech stack

Voiceflow is primarily a platform for building advanced AI agents, and is quite versatile in the ways that it can fit in your tech stack to solve specific solutions. From deploying chat and voice agents, to integrating knowledge bases, this article will cover different ways Voiceflow fits in your organization's tech stack.

How to think about Voiceflow

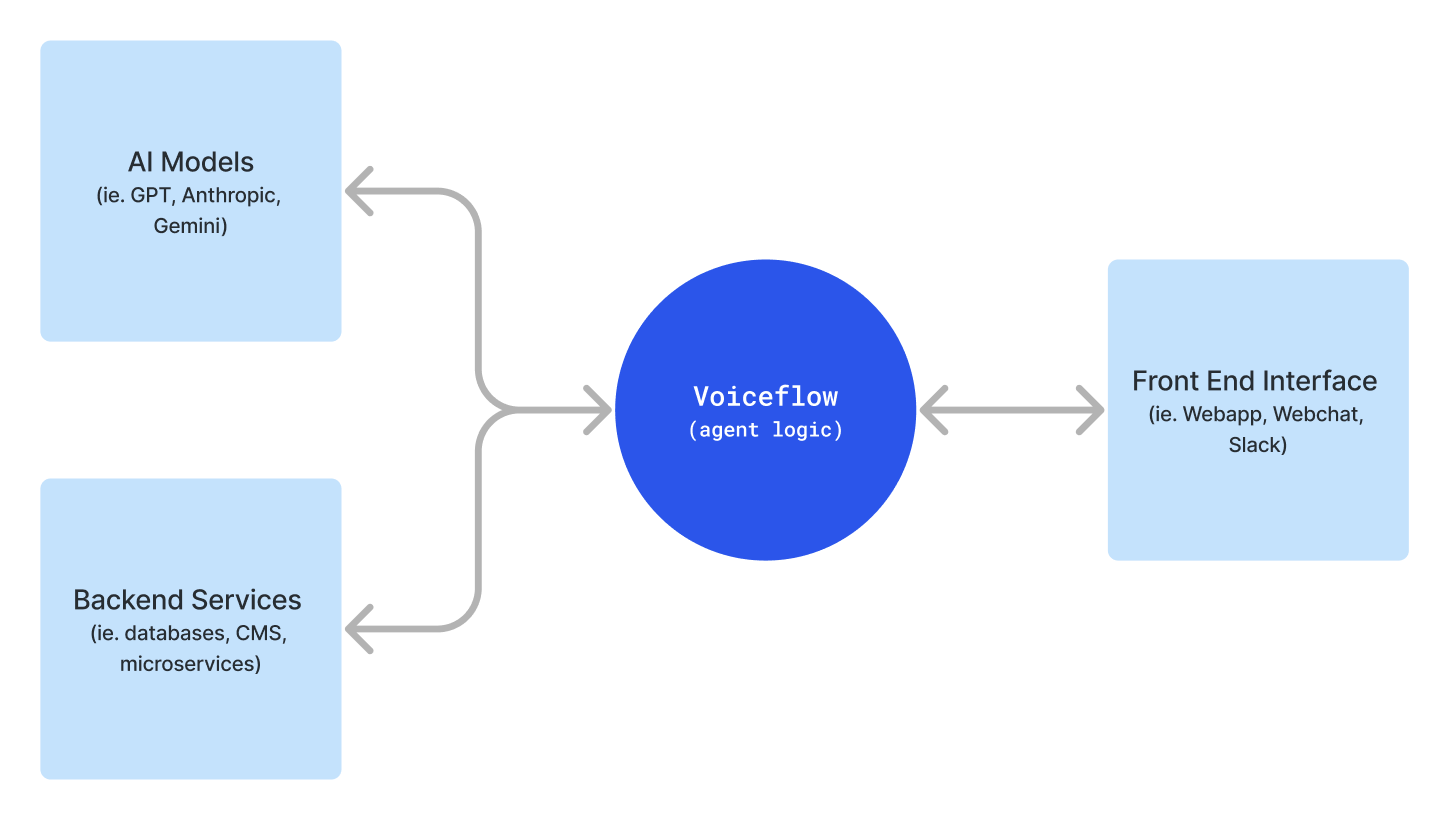

Think about Voiceflow as the orchestration layer between your front-end, back-end services, and AI models.

Voiceflow holds the agent logic and stores where the users are in their conversation (their conversation state). It is able to send and receive messages from your front-end interface (whether that is the first party web chat, a custom web app, Slack etc.) and push/pull data from your back-end services (like a database, CMS, or any other service with an API).

Voiceflow also has a number of AI models that it is connected to, so you can use them out of the box.

Our product focusAt Voiceflow we prioritize extensibility and customization. So rather than create many out of the box integrations, we have instead focused on creating powerful developer tools and APIs so you can connect to your tools in your way.

Building your Agents

Sending and Receiving Data from your Back-end Services

Voiceflow is able to send and receive data from other services in two ways.

- The API Step: This is a step in Voiceflow that allows you to make an API call to any service and store the response in variables.

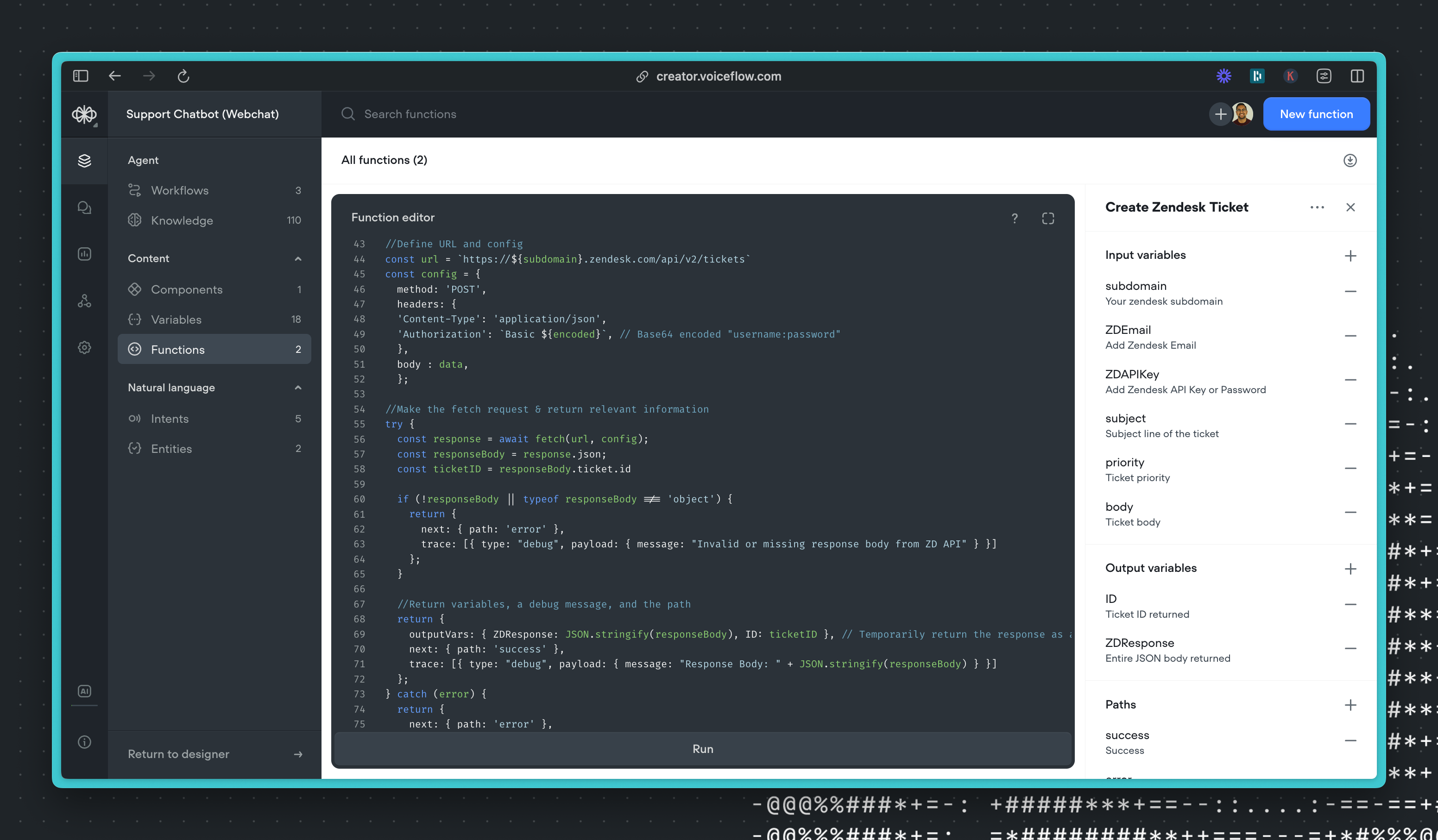

- Functions: Custom functions allow you to write a JavaScript function that can use a fetch request. You can therefore use this to send/receive data, transform data, and even return traces imitating any step from text or image, or even custom traces like a Custom Action.

This function makes a call to the Zendesk API to create a ticket containing information about the customer.

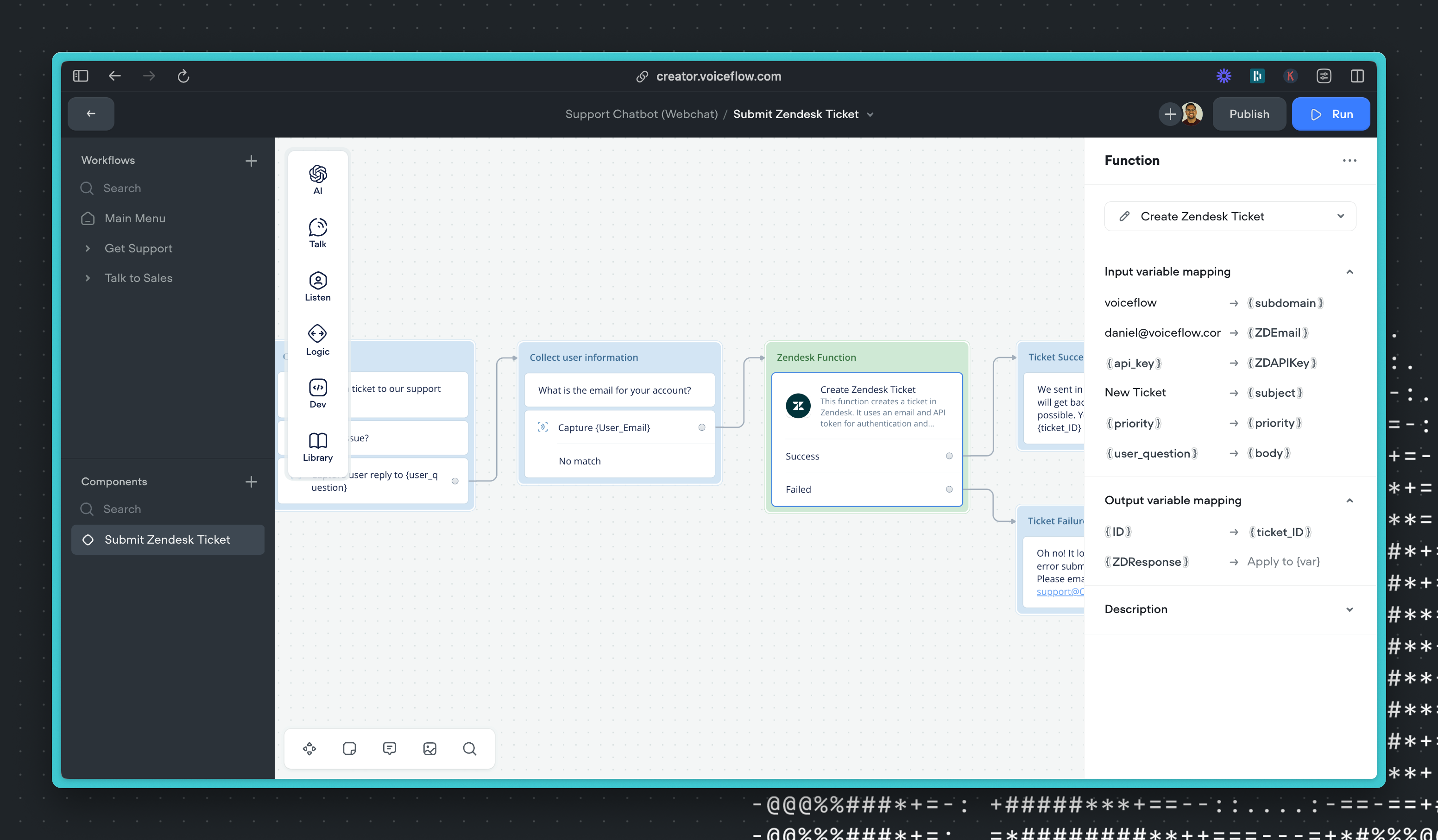

This is what the function looks like when it is added to a workflow.

Using AI Models in Voiceflow

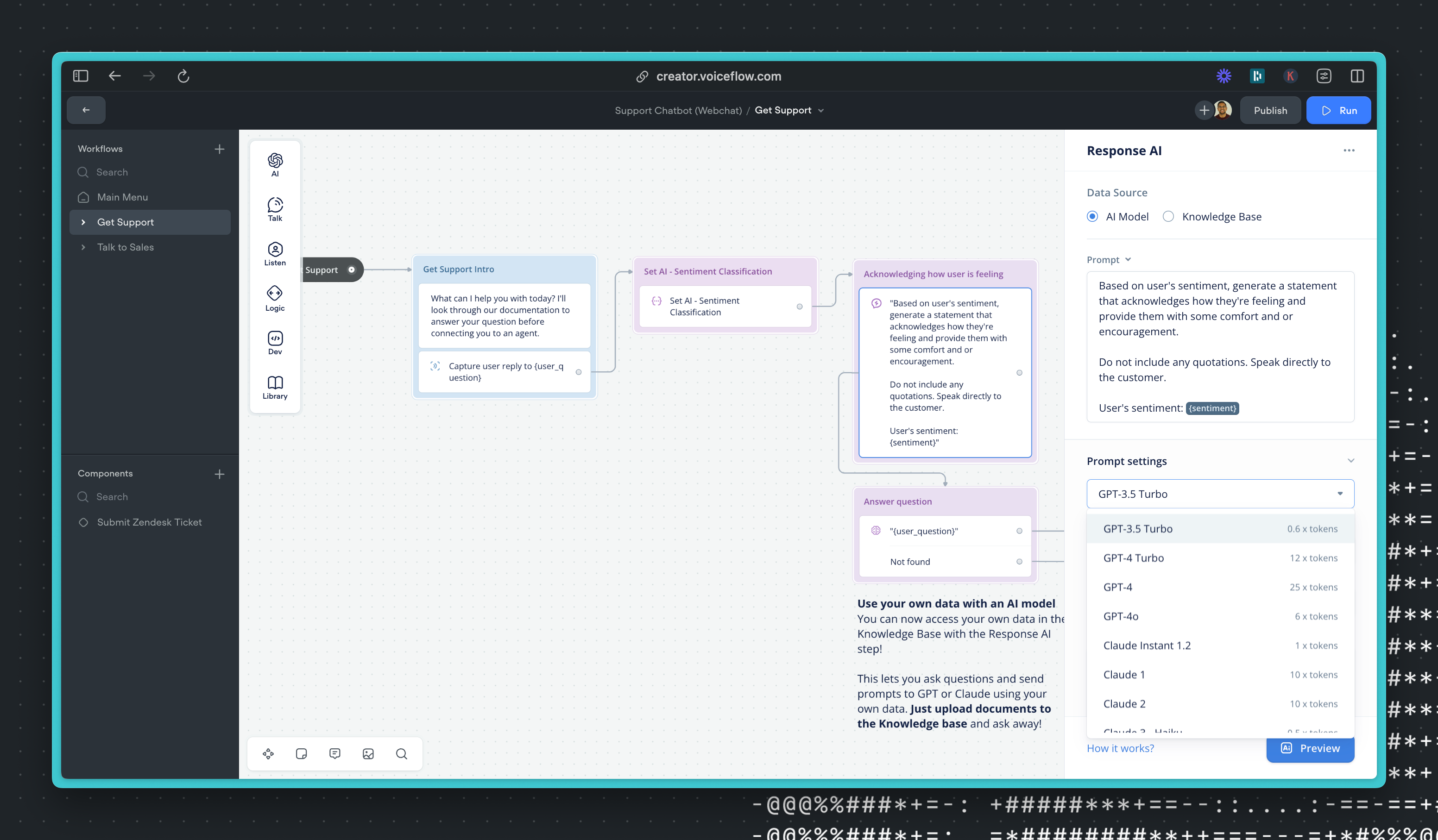

Voiceflow has a growing number of AI large language models (LLMs) that are natively built in. You can leverage different LLMs in different parts of your design (AI response, KB synthesis, LLM intent classification) and send prompts with dynamic variables to them. In this way, Voiceflow helps you save time with all the LLM integrations you might want already built in.

LLMs can be leveraged in the following ways (we are adding more each week)

- Response AI: Send a prompt to a LLM and display the response to the user (and even supports Markdown formatting).

- Set AI: Send a prompt to an LLM and store the output in a variable (useful for leveraging AI to assist with entity capture, summarization, computation and more).

- Knowledge Base: Voiceflow has a built-in Vector Database and RAG solution. When you use the Knowledge Base the KB uses the question passed to return relevant chunks from the Vector Database, and then passes those chunks along with your prompt to an LLM of your choice to synthesize a response to the user.

- LLM Intent Classification: LLMs can be used to classify intents instead of using the NLU, that can often be much more accurate or context aware.

If you have a self-hosted model or want to use a model outside the ones we provide, you can use a function or an API call to send and receive information to a custom model, so you can BYO model.

- Response and Set AI can be mimicked using custom functions that are either returning traces of text, or updating variables. For an example of this, see the GPT 4o function we made an hour after release.

- Knowledge Base Synthesis can be done in a custom function with a BYO model by using the KB API with synthesis disabled and then using your own model through API calls to synthesize an answer.

- LLM intent classification unfortunately can't currently be easily mimicked.

Deploying your Agents

There are various ways your Agents can fit into your tech stack for it to insert itself into user experiences:

Front-end website with web chat

Voiceflow's built-in web chat is the easiest way to deploy your Voiceflow agent into production. All the UI and Dialog Manager APIs are fully implemented for you.

Simply insert the out-of-the-box JavaScript snippet into your front-end HTML, typically through inserting it into your default footer component in React for any other front-end frameworks. Regardless of where you place the code snippet, the agent will appear fixed to the screen and in accord with your appearance settings for the web chat.

You can also embed your agent into an iframe.

You can learn more about the web chat here.

Custom front-end interfaces

Voiceflow is able to interact with any front-end interface. It does this through the Dialog Manager API through sending a variety of message types called traces. Our web chat implements all these traces out of the box, so to make a custom interface you would choose how you handle each trace type (text, images, buttons, etc.).

A trace is a standard format in which Voiceflow sends a message. Below is an example of an image trace where Voiceflow is sending an image.

{

"type": "visual",

"payload": {

"visualType": "image",

"image": "https://assets-global.website-files.com/example-file.png",

"dimensions": {

"width": 800,

"height": 800

}

}You can use these to render an image on your front-end. There are a variety of built-in trace types that you can find here: See all trace types.

If there is a type of message that Voiceflow does not have, you can create a custom trace to render things like file upload, calendar pickers, or trigger any kind of custom functionality. This is available when using our built-in webchat widget or your own custom interface.

You would use our custom action functionality to do this. You can see an example of how a custom action is used with our webchat to render a custom widget.

Custom back-end apps

Using the Dialog Manager API, you can build your own custom interface that interacts directly with your Voiceflow agent.

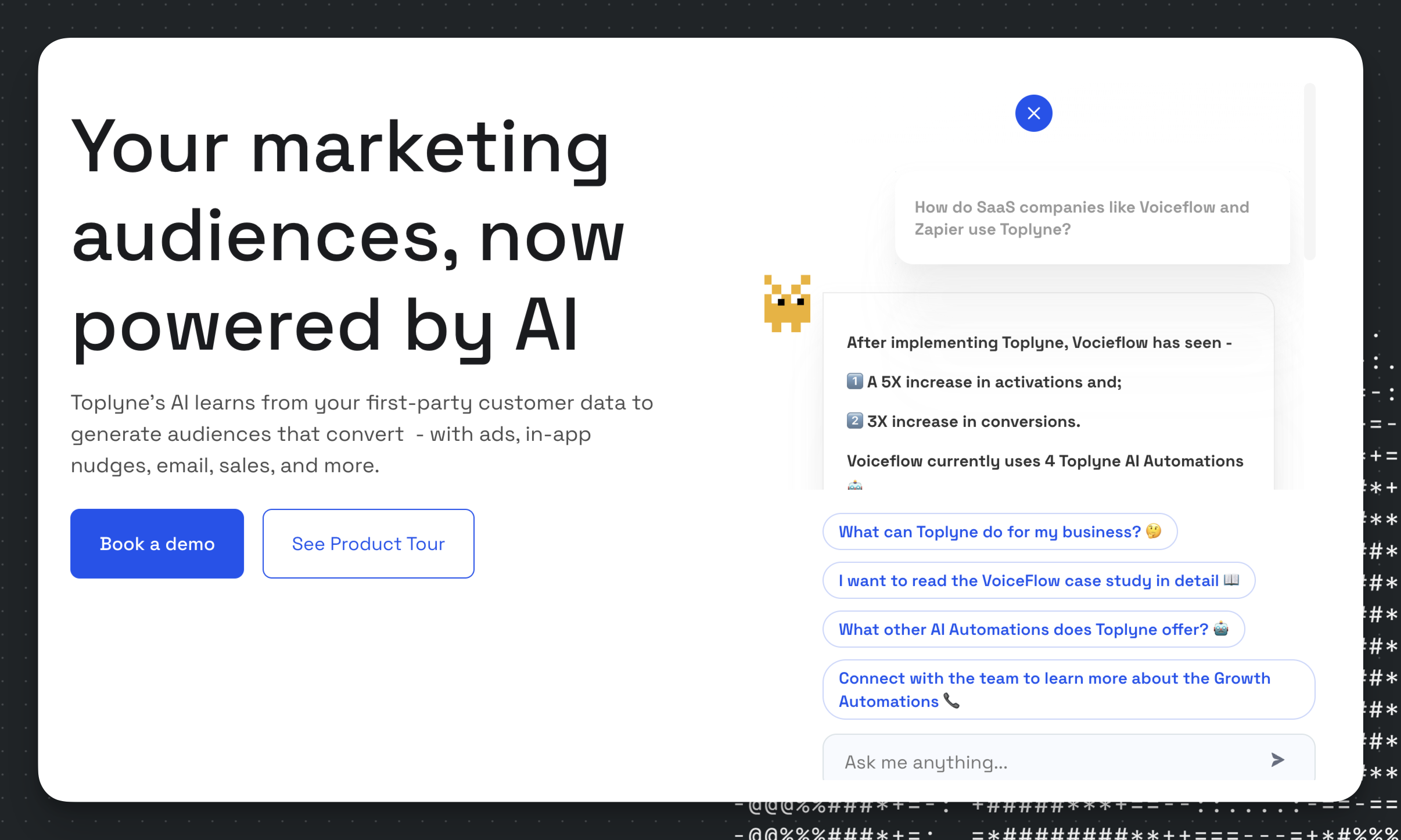

This can be used for everything from creating pretty custom chat agent UIs like toplyne.io does on their website.

Or fully custom interfaces like an AI enhanced article reader, Voiceflow in Minecraft or even in Unity!

You can learn more about building custom interfaces here.

Using the Knowledge Base Independently

Through the extensive Knowledge Base Management and Query APIs, Voiceflow's Knowledge Base can also be used as an easy-to-use RAG and vector database solution, outside of being integrated directly into a Voiceflow agent.

To use a Knowledge Base on its own:

- Upload documents to a new project's Knowledge Base (text files, PDFs, URLs)

- Query with the KB using the KB Query APIs

- Continuously update and add more documents to your knowledge base through CI/CD pipelines, for example with the KB Management APIs

This can be useful to use either the knowledge base on its own, or as secondary knowledge bases for a main project, letting you segregate different types of documents.

Updated about 1 month ago