Prompts CMS

This feature is part of the old way to build using Voiceflow.

Although this feature remains available, we recommend using the Agent step and Tool step to build modern AI agents. Legacy features, such as the Capture step and Intents often consume more credits and lead to a worse user experience.

The Prompts CMS is a centralized hub for creating, managing, and testing prompts used by your conversational agents. It offers a structured way to handle complex prompts, ensuring consistency and reusability across your projects. With the Prompts CMS, you can define sophisticated prompts that leverage system prompts, user-agent message pairs, variables, and more.

Accessing the Prompts CMS

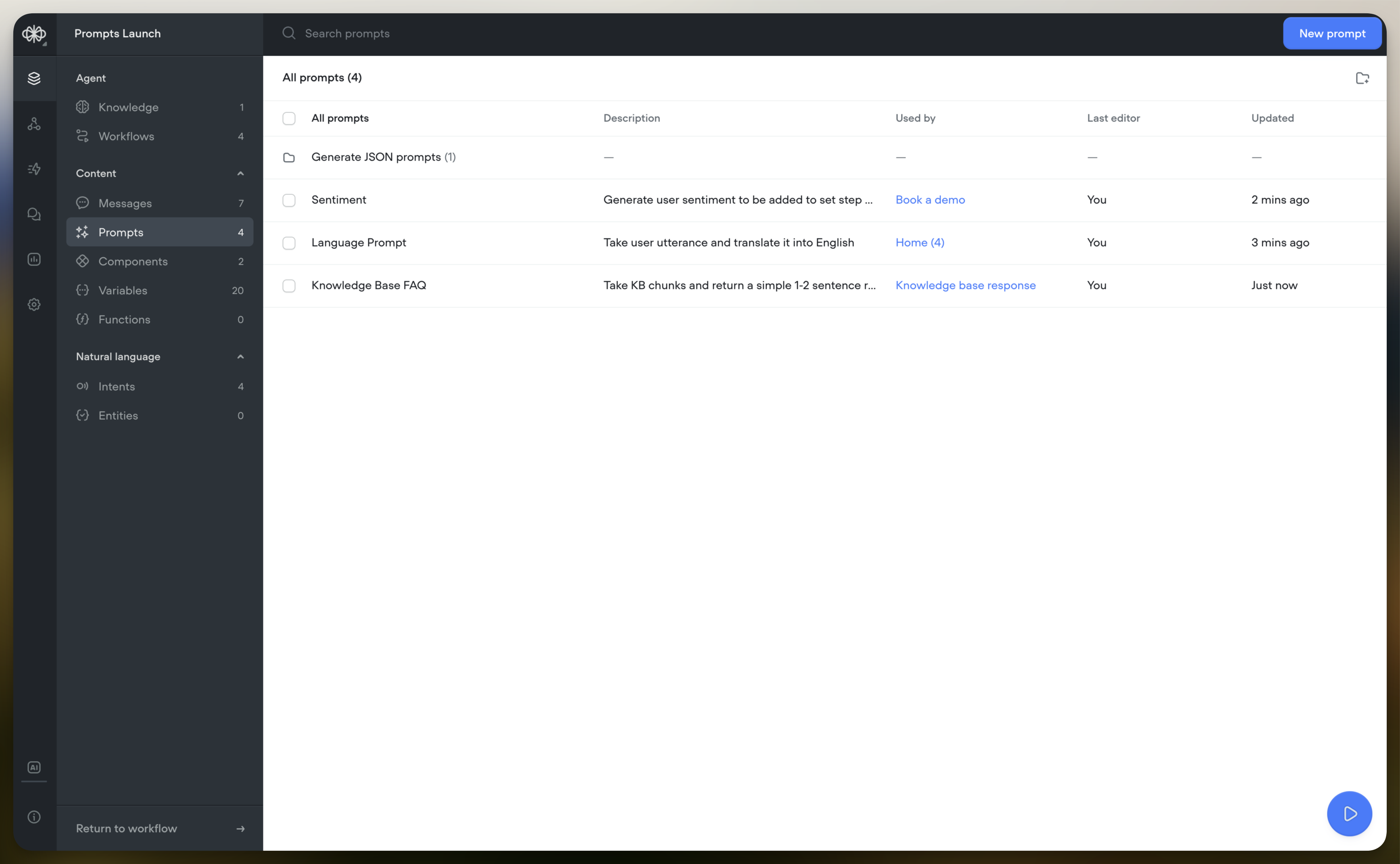

- Open “Prompts”: In the Agent CMS, choose Prompts to access the prompts management interface.

Understanding the Prompts Table

The Prompts CMS displays a table listing all your prompts with the following columns:

- Name: The name of the prompt.

- Description: A brief description of the prompt’s purpose.

- Used By: Indicates where the prompt is used within your agent. This will include links to specific workflows and steps.

- Last Editor: The team member who last edited the prompt.

- Updated: The date and time when the prompt was last modified.

Creating a New Prompt

1. Start the Creation Process

- Click “New Prompt”: Located at the top-right corner of the Prompts table.

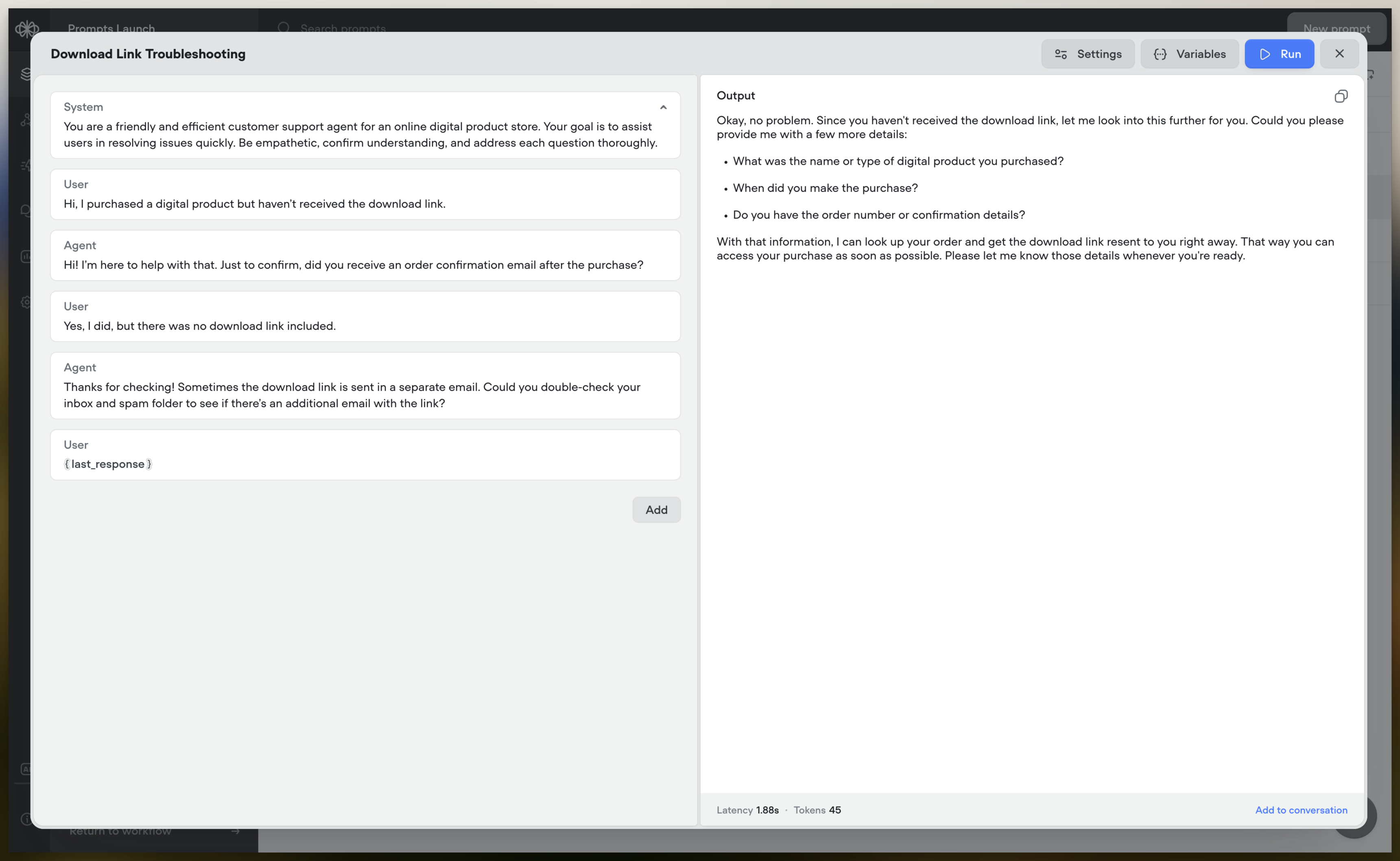

2. The Prompt Editor

- Upon clicking, the Prompt Editor appears with two main sections:

- Left Side (Prompt Definition): Where you define and configure your prompt.

- Right Side (Output Preview): Displays the generated output when you test the prompt.

3. Configuring the Prompt

- Prompt Name: Enter a clear and descriptive name for your prompt.

- Description (Optional): Provide additional details about the prompt’s purpose.

Prompt Configuration

System Prompt

- Purpose: Sets the overall behaviour or guidelines for the AI model.

- Usage: Click on the System Prompt box to enter your instructions.

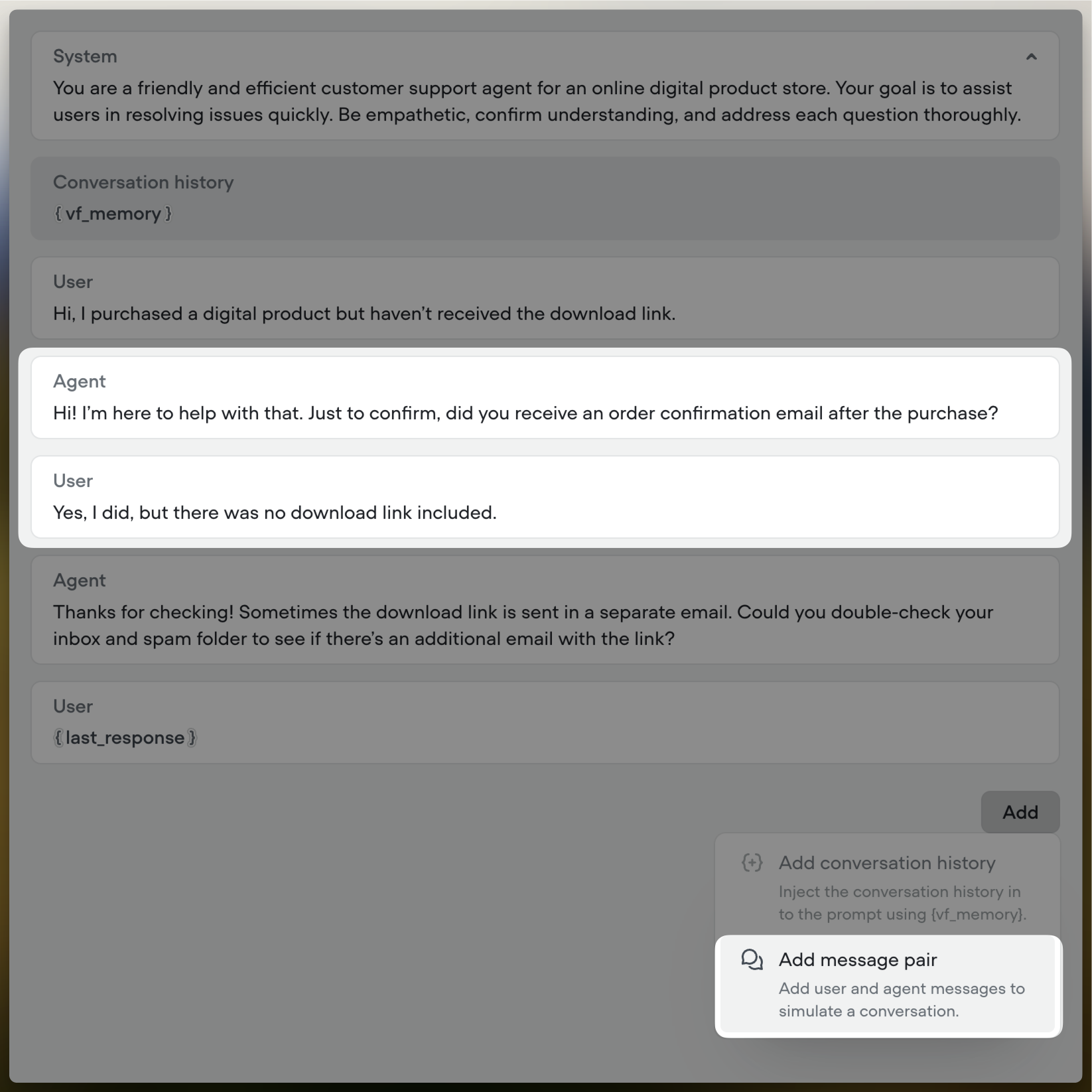

User and Agent Messages

- User Prompt: The initial message from the user.

- Adding Message Pairs:

- Click “Add”: Choose Add Message Pair.

- New Boxes Appear: An Agent box and a User box are added.

- Enter Messages: Fill in the agent’s response and the user’s subsequent input.

- Deleting Message Pairs:

- Deleted Together: Message pairs are added and deleted as a unit.

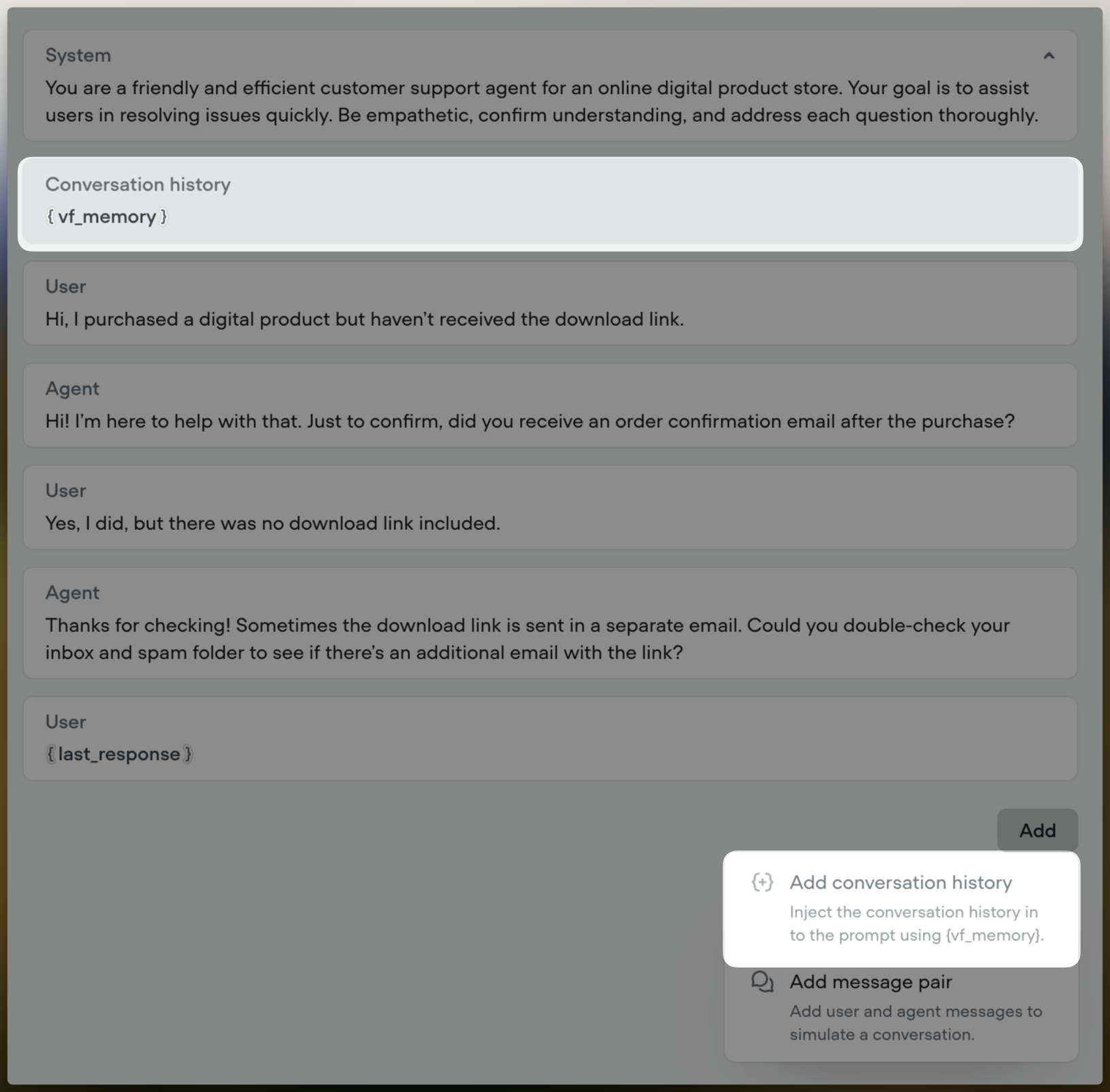

Conversation History

- Add Conversation History:

- Click “Add”: Choose Add Conversation History.

- Voiceflow Memory Variable: A special block representing

vf_memoryis inserted.

- Placement:

- Drag and Drop: Move the conversation history block to the desired position within your prompt.

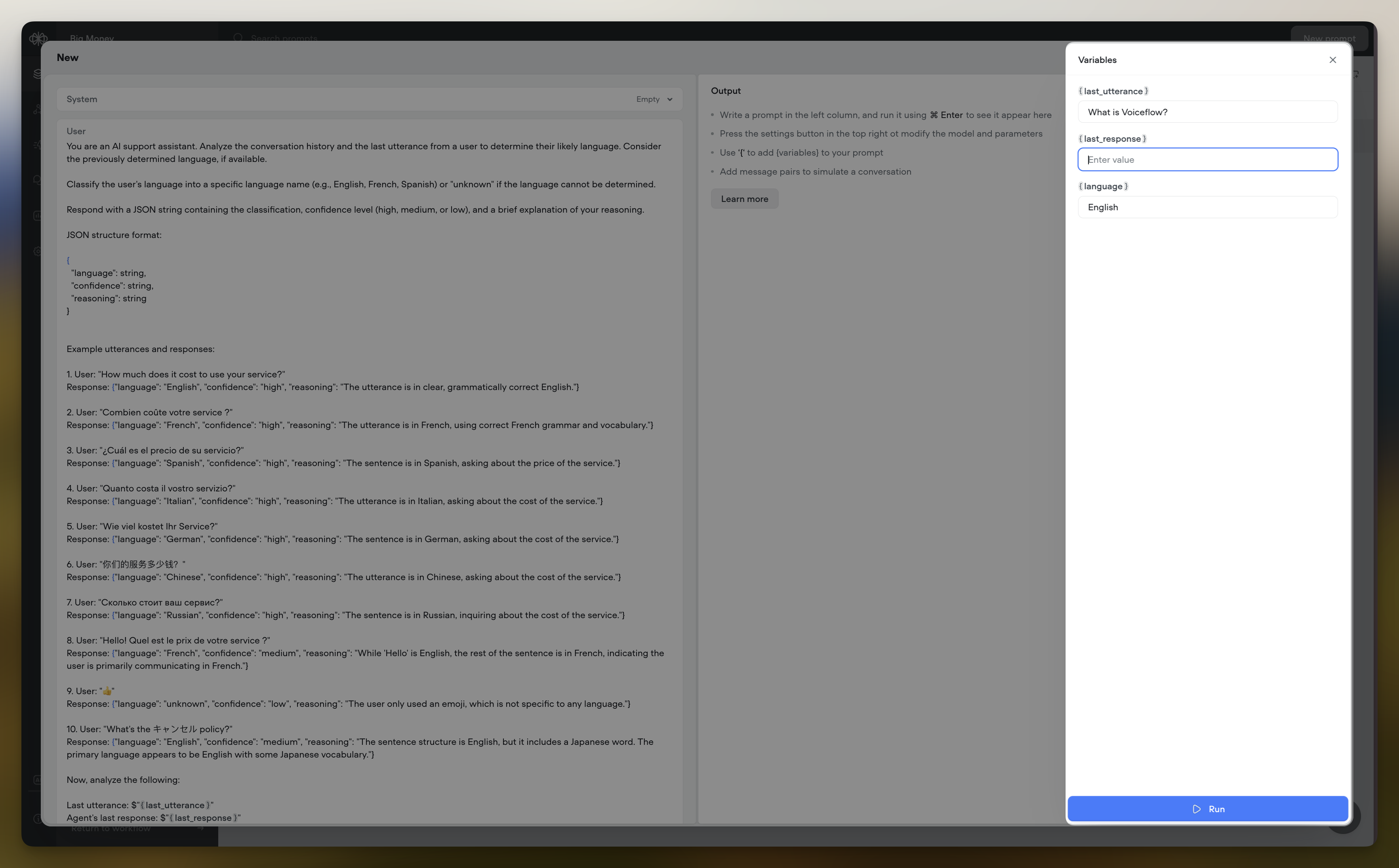

Prompt Variables

- Access Variables: Click on the Variables button in the header.

- Manage Variables:

- View Used Variables: Displays all variables referenced in your prompt (e.g., {agent_persona}).

- Set Test Values: Enter values for these variables to test how the prompt behaves with different inputs.

- Usage in Prompt:

- Syntax: Use curly braces {} to include variables in your prompt.

- Example: “As a helpful assistant named {agent_persona}, I will…”

- Syntax: Use curly braces {} to include variables in your prompt.

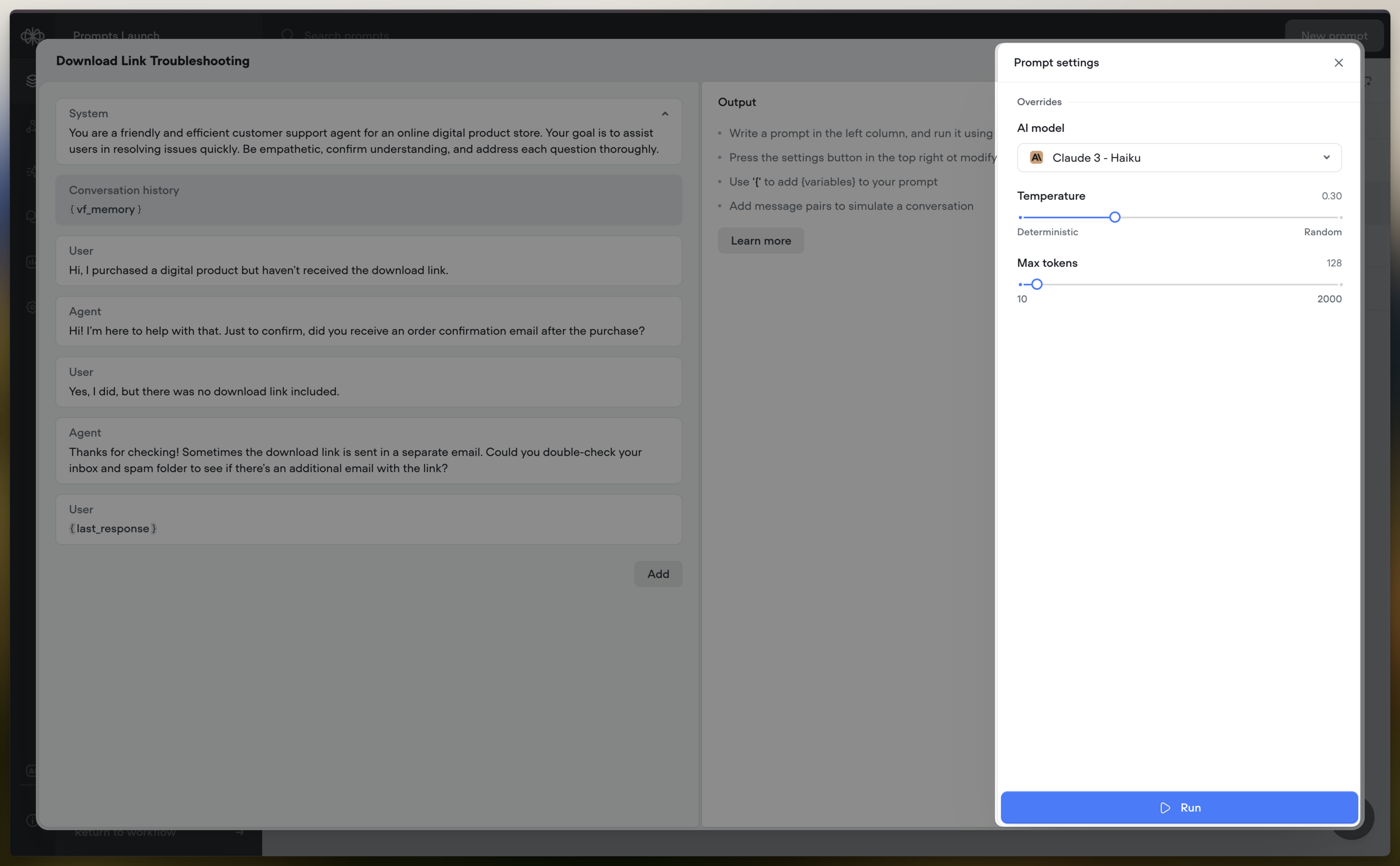

Prompt Settings

- Access Model Settings: Click on the Settings button in the header.

- AI Model Selection: Choose the AI model to use for generating responses.

- Temperature: Adjust the creativity level of the AI’s output.

- Max Tokens: Set the maximum length of the generated response.

Using Structured Outputs

Structured Outputs allow you to define the format of the data you expect the AI to return when using the Prompt step. This gives you more control and predictability over the generated responses.

Enabling Structured Outputs

- Select a Prompt: In the Prompt step editor, choose the prompt you want to use.

- Open Prompt Settings: Select the Settings tab

- Enable JSON Output: In the prompt settings, toggle on the "JSON Output" option.

- Define Output Structure: Specify the expected structure of the AI's response using the JSON Schema format. This includes defining the data types, required properties, and any additional constraints.

- Test: Run prompt in the editor to confirm response generated per expected and no errors are present in the output structure.

Example Output Format Definitions

Here are a couple of examples showcasing how you can define the output format using JSON Schema:

Example 1: Sentiment Analysis

{

"type": "object",

"required": [

"sentiment"

],

"properties": {

"sentiment": {

"enum": [

"happy",

"neutral",

"sad"

],

"type": "string"

}

},

"additionalProperties": false

}In this example, the output format specifies that the AI should return an object with a single required property called sentiment. The sentiment property is a string that must be one of the enumerated values: "happy", "neutral", or "sad".

Example 2: Problem Solving Steps

{

"type": "object",

"properties": {

"steps": {

"type": "string",

"description": "A single string where each step is separated by a comma. Each step includes 'explanation' and 'output' concatenated with a colon. Never use double quotes in the string."

},

"final_answer": {

"type": "string",

"description": "The final answer to the problem as a string."

}

},

"required": [

"steps",

"final_answer"

],

"additionalProperties": false

}This example defines an output format where the AI should return an object with two required properties: steps and final_answer. The steps property is a string containing problem-solving steps, with each step separated by a comma and including an explanation and output. The final_answer property is a string representing the final answer to the problem.

Working with Structured Outputs

- Accessing Response Data: The AI-generated response will adhere to the defined structure.

- Dot Notation: Access specific properties of the structured response using dot notation (e.g.,

response.propertyName). - Conditional Logic: Use the structured data to create conditional paths in your workflow based on the AI's response.

Supported Data Types

At launch, Structured Outputs support the following data types:

- String

- Number

- Boolean

- Integer

- Enum

- Arrays

- Objects

Model Compatibility

Structured Outputs are currently available with the gpt-4o-mini, gpt-4o and o3-mini models.

Testing Your Prompt

Running the Prompt

- Click “Run”: Located within the Prompt Editor.

- View Output: The right side displays the AI-generated response based on your prompt and variable values.

Monitoring Performance

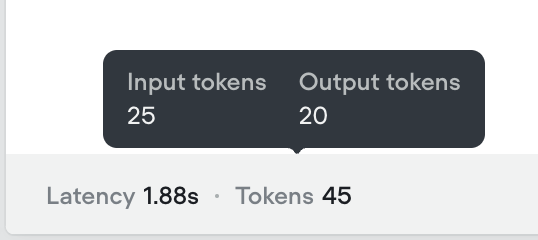

- Tokens Consumed: Displayed at the footer, shows the total tokens used.

- Detailed View: Hover over to see a breakdown of input and output tokens.

- Latency: Shows the time taken to generate the response.

Iterating

- Adjust Variables: Change values in the Variables tab to test different scenarios.

- Modify Prompt: Edit the prompt content to refine the output.

- Re-run Tests: Click “Run” again to see updated results.

Best Practices for Creating Prompts

Use Clear and Descriptive Names

- Ease of Selection: Makes it easier to identify the prompt when using the Prompt Step.

- Organization: Helps manage prompts as your collection grows.

Leverage Message Pairs

- Context Building: Simulate conversation history to provide context to the AI.

- Improved Responses: Leads to more accurate and relevant outputs.

Keep Prompts Modular

- Reusability: Design prompts that can be used in multiple contexts.

- Maintainability: Easier to update and manage over time.

Learn more

- Prompt step: Learn how to integrate prompts directly into your agent’s flow.

- Set step: Discover how to dynamically assign prompt outputs to variables for greater control over agent behaviour.

Updated 3 months ago