Streaming API for Real-Time Interactions

We're excited to announce the release of our new Streaming API endpoint, designed to enhance real-time interactions with your Voiceflow agents. This feature allows you to receive server-sent events (SSE) in real time using the text/event-stream format, providing immediate responses and a smoother conversational experience for your users.

Key Highlights

-

Real-Time Event Streaming: Receive immediate

traceevents as your Voiceflow project progresses, allowing for dynamic and responsive conversations. -

Improved User Experience: Drastically reduce latency by sending information to users as soon as it's ready, rather than waiting for the entire turn to finish.

-

Support for Long-Running Operations: Break up long-running steps (e.g., API calls, AI responses, JavaScript functions) by sending immediate feedback to the user while processing continues in the background.

-

Streaming LLM Responses: With the

completion_eventsquery parameter set totrue, stream large language model (LLM) responses (e.g., from Response AI or Prompt steps) as they are generated, providing instant feedback to users.

How to Use the Streaming API

Endpoint

POST /v2/project/{projectID}/user/{userID}/interact/stream

Required Headers

Accept: text/event-streamAuthorization: {Your Voiceflow API Key}Content-Type: application/json

Query Parameters

completion_events(optional): Set totrueto enable streaming of LLM responses as they are generated.

Example Request

curl --request POST \

--url https://general-runtime.voiceflow.com/v2/project/{projectID}/user/{userID}/interact/stream \

--header 'Accept: text/event-stream' \

--header 'Authorization: {Your Voiceflow API Key}' \

--header 'Content-Type: application/json' \

--data '{

"action": {

"type": "launch"

}

}'

Example Response

event: trace

id: 1

data: {

"type": "text",

"payload": {

"message": "Give me a moment...",

},

"time": 1725899197143

}

event: trace

id: 2

data: {

"type": "debug",

"payload": {

"type": "api",

"message": "API call successfully triggered"

},

"time": 1725899197146

}

event: trace

id: 3

data: {

"type": "text",

"payload": {

"message": "Got it, your flight is booked for June 2nd, from London to Sydney.",

},

"time": 1725899197148

}

event: end

id: 4

Streaming LLM Responses with completion_events

completion_eventsBy setting completion_events=true, you can stream responses from LLMs token by token as they are generated. This is particularly useful for steps like Response AI or Prompt, where responses may be lengthy.

Example Response with completion_events=true

completion_events=trueevent: trace

id: 1

data: {

"type": "completion",

"payload": {

"state": "start"

},

"time": 1725899197143

}

event: trace

id: 2

data: {

"type": "completion",

"payload": {

"state": "content",

"content": "Welcome to our service. How can I help you today? Perh"

},

"time": 1725899197144

}

... [additional content events] ...

event: trace

id: 6

data: {

"type": "completion",

"payload": {

"state": "end"

},

"time": 1725899197148

}

event: end

id: 7

Getting Started

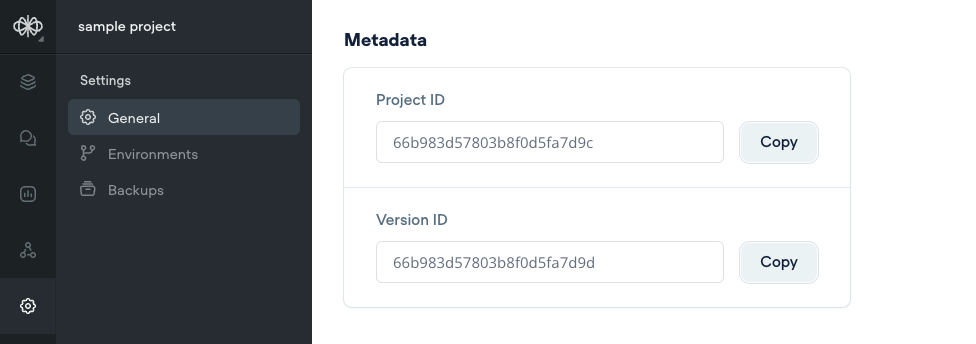

- Find Your

projectID: Locate yourprojectIDin the agent's settings page within the Voiceflow Creator. Note that this is not the same as the ID in the URLcreator.voiceflow.com/project/.../.

- Include Your API Key: Ensure you include your Voiceflow API Key in the

Authorizationheader of your requests.

Additional Resources

- Documentation:

- Example Project: Check out our streaming-wizard demo project for a practical implementation using Node.js.

Notes

-

Compatibility: This new streaming endpoint complements the existing

interactendpoint and is designed to enhance real-time communication scenarios. -

Deterministic and Streamed Messages: When using

completion_events, you may receive a mix of streamed and fully completed messages. Consider implementing logic in your client application to handle these different message types for a seamless user experience. -

Latency Reduction: By streaming events as they occur, you can significantly reduce perceived latency, keeping users engaged and informed throughout their interaction.

We believe this new Streaming API will greatly enhance the interactivity and responsiveness of your Voiceflow agents. We can't wait to see how you leverage this new capability in your projects!

For any questions or support, please reach out to our support team or visit our community forums.