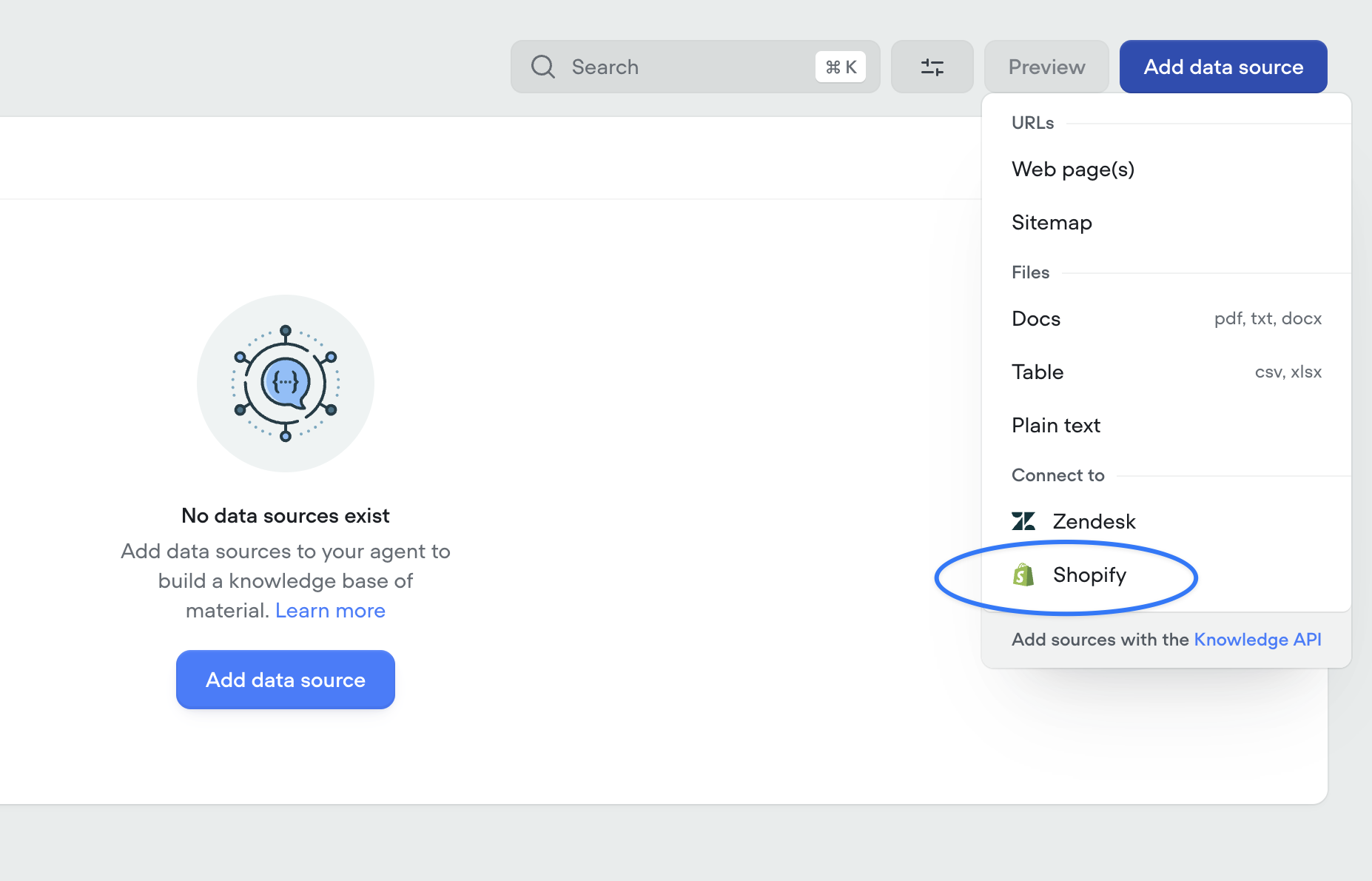

Shopify integration for knowledge base

by Michael HoodConnect your knowledge base to your Shopify store to give your agent access to always up to date product information.

Connect your knowledge base to your Shopify store to give your agent access to always up to date product information.

|  |

|---|

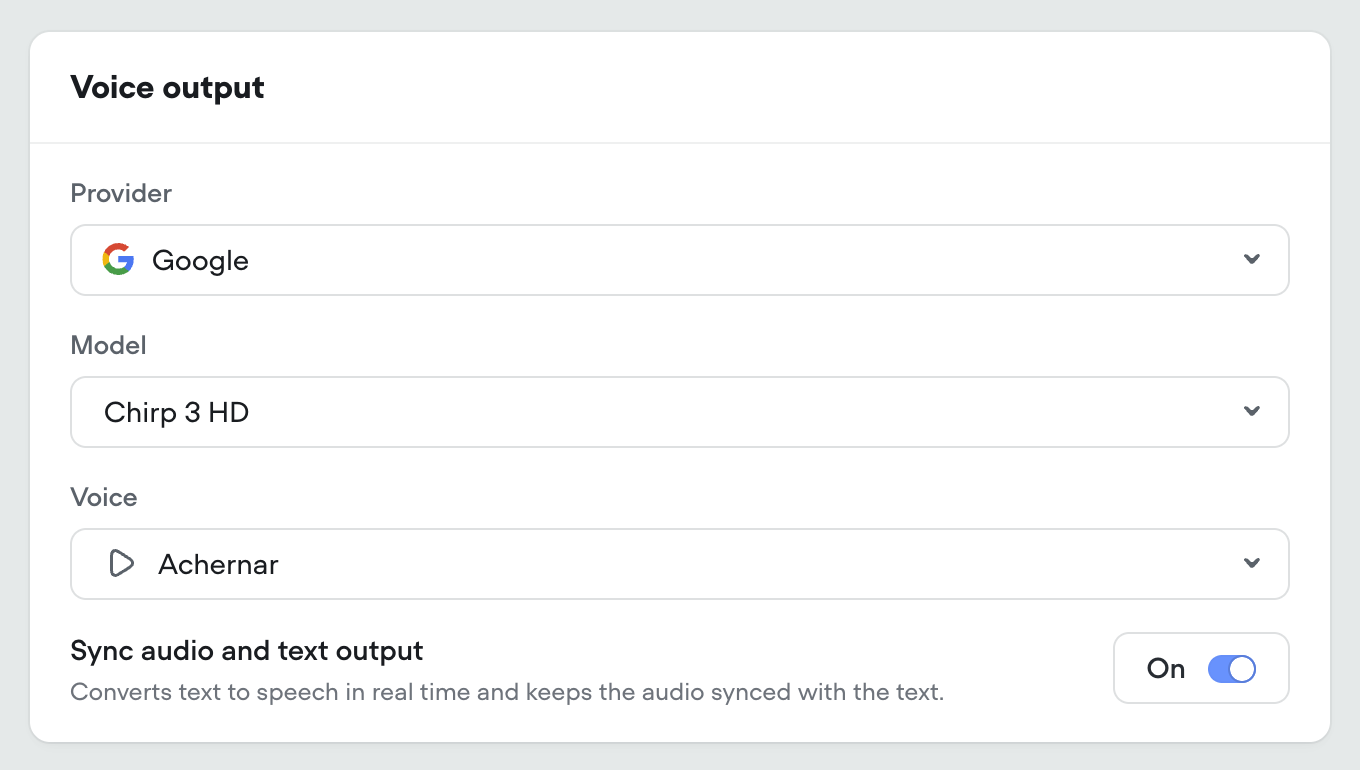

Voiceflow now supports Google's Chirp 3 for speech-to-text and text-to-speech capabilities.

Many new languages and locales are now supported, along with multilingual speech detection.

For more information reference:

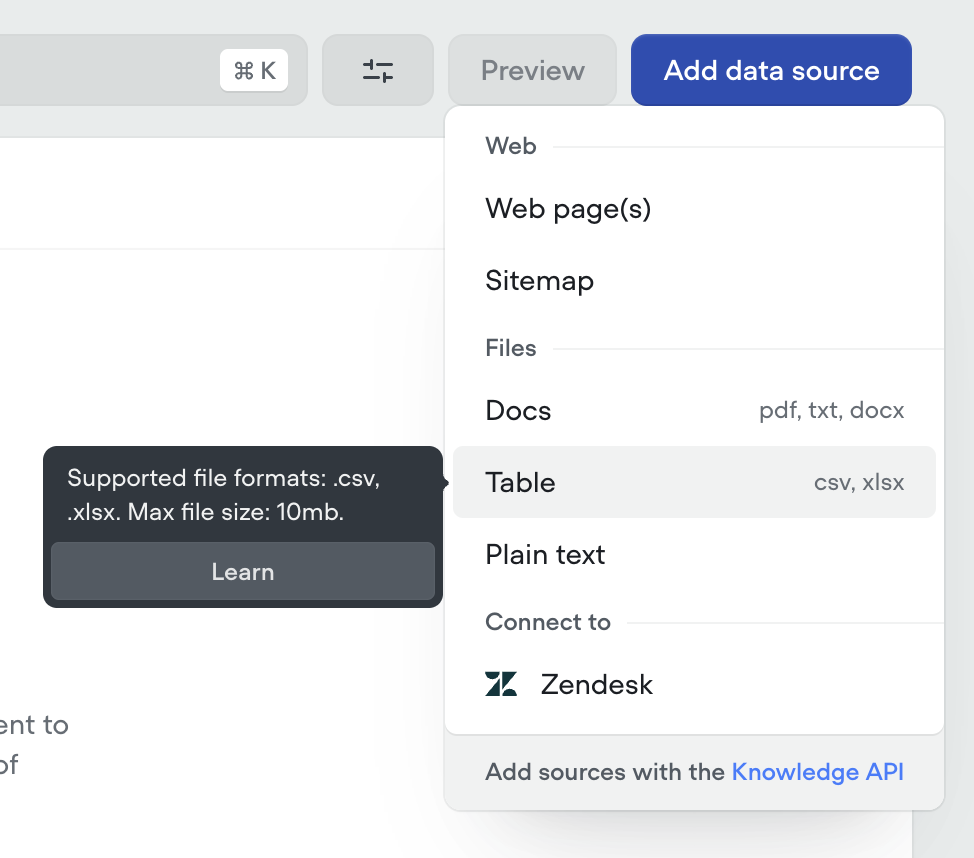

You can now upload CSV files directly into your Knowledge Base. Voiceflow supports both .CSV and .XLSX file formats.

This makes it easy to turn structured data—like FAQs, product catalogs, policies, pricing tables, or support logs—into instantly usable knowledge for your agent without manual copy-pasting or reformatting.

Your agent can now read rows as discrete knowledge entries, reference specific fields as context, and answer questions grounded in large, structured datasets.

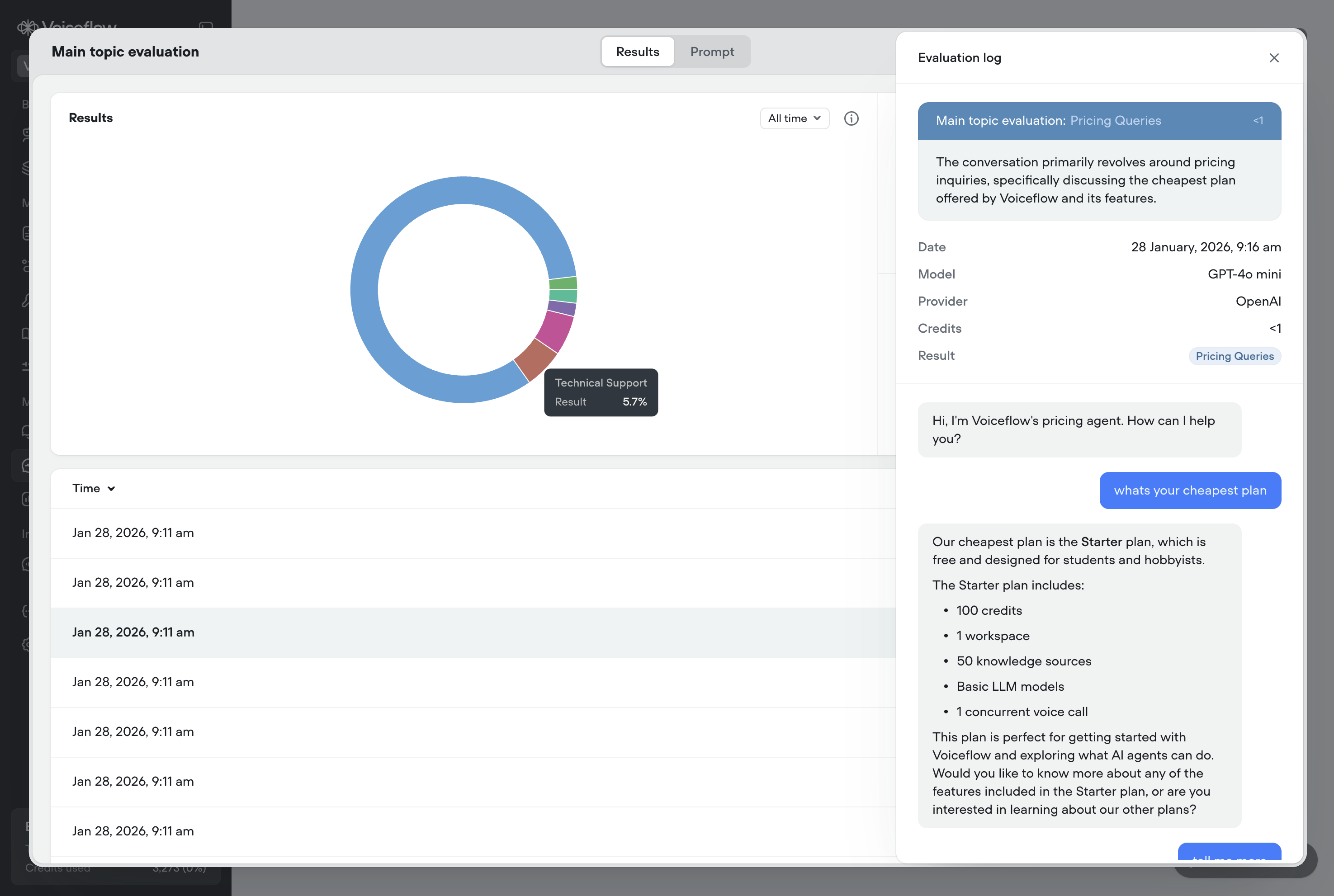

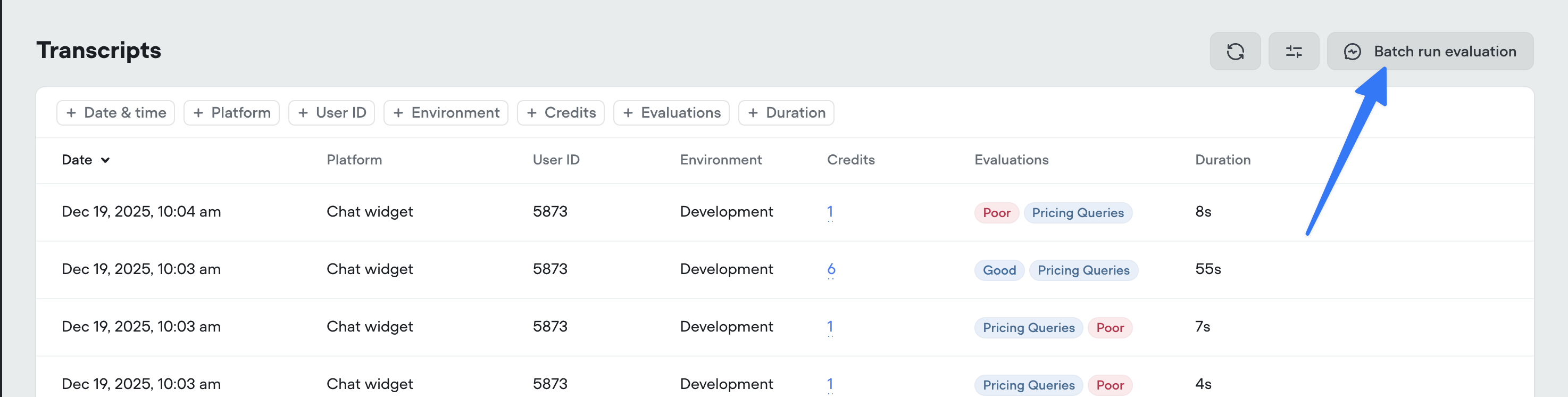

Drive measurable agent improvements with objective, repeatable scoring by evaluating conversations against predefined outcome options instead of subjective criteria.

How do I use this feature?

Tip

If you want to retroactively run evaluations on old transcripts, you can do so by using the 'Bulk run evaluations' feature found in the transcripts tab.

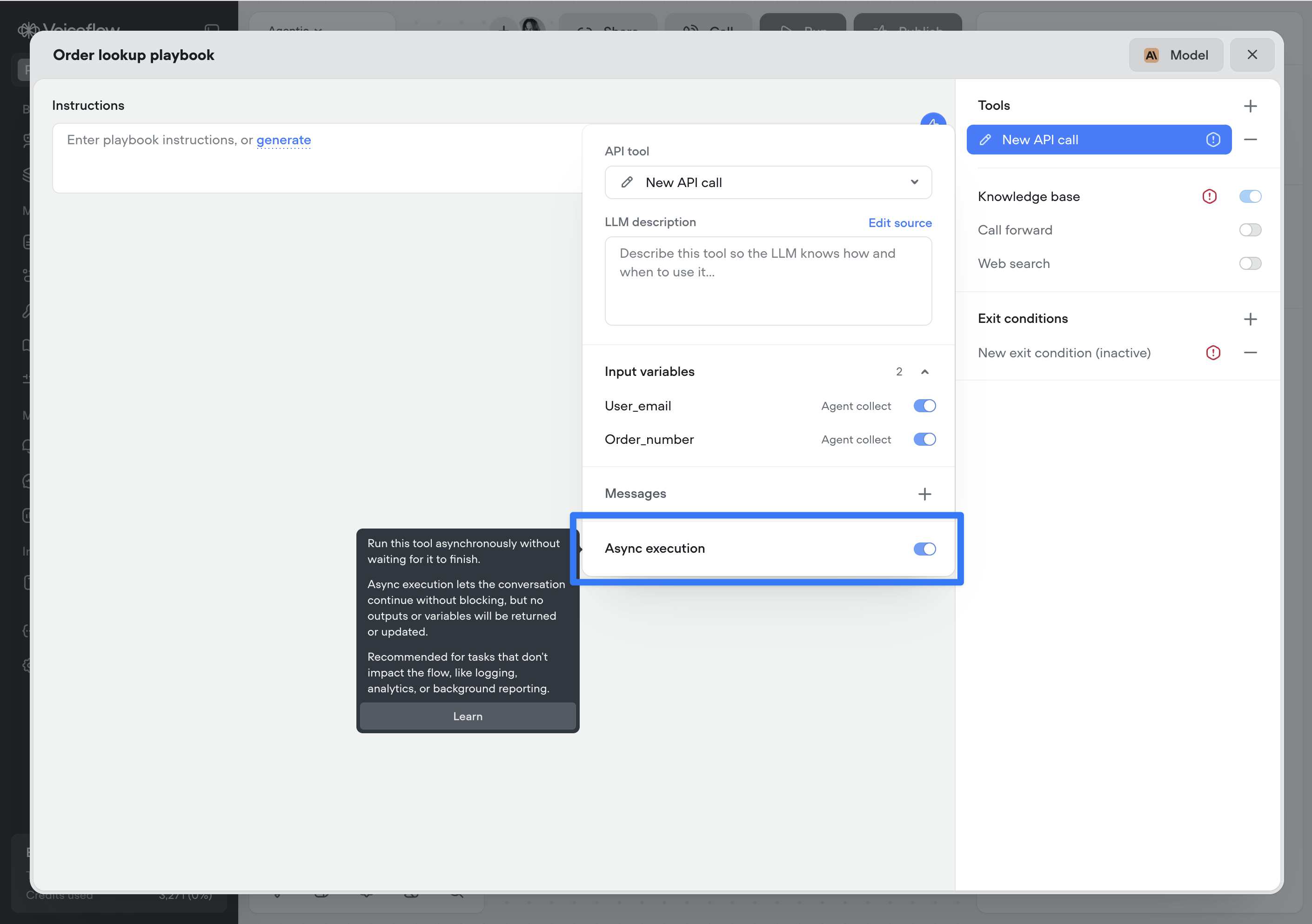

You can now run Function and API tool steps asynchronously.

Async execution allows the conversation to continue immediately without waiting for the tools to complete. No outputs or variables from the step will be returned or updated.

This is ideal for non-blocking tasks such as logging, analytics, telemetry, or background reporting that don’t affect the conversation.

Note: This setting applies to the reference of the Function or API tool — either where the tool is attached to an agent or where it’s used as a step on the canvas. It is not part of the underlying API or function definition, which allows the same tool to be reused with different async behaviour throughout your project.

Tool messages let you define static messages that are surfaced to the user as a tool progresses through its lifecycle:

This provides clear, predictable feedback during tool execution, improving transparency and user trust—especially for long-running or failure-prone tools.

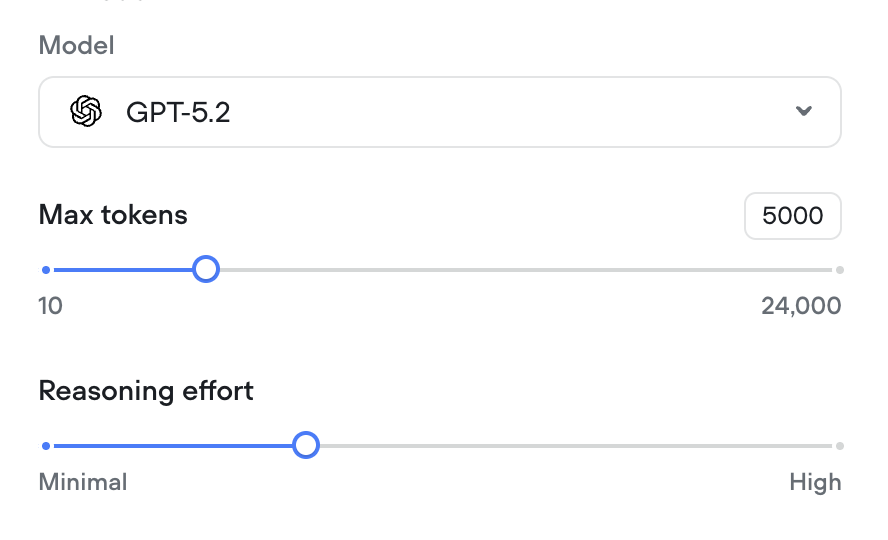

Added global support for GPT 5.2

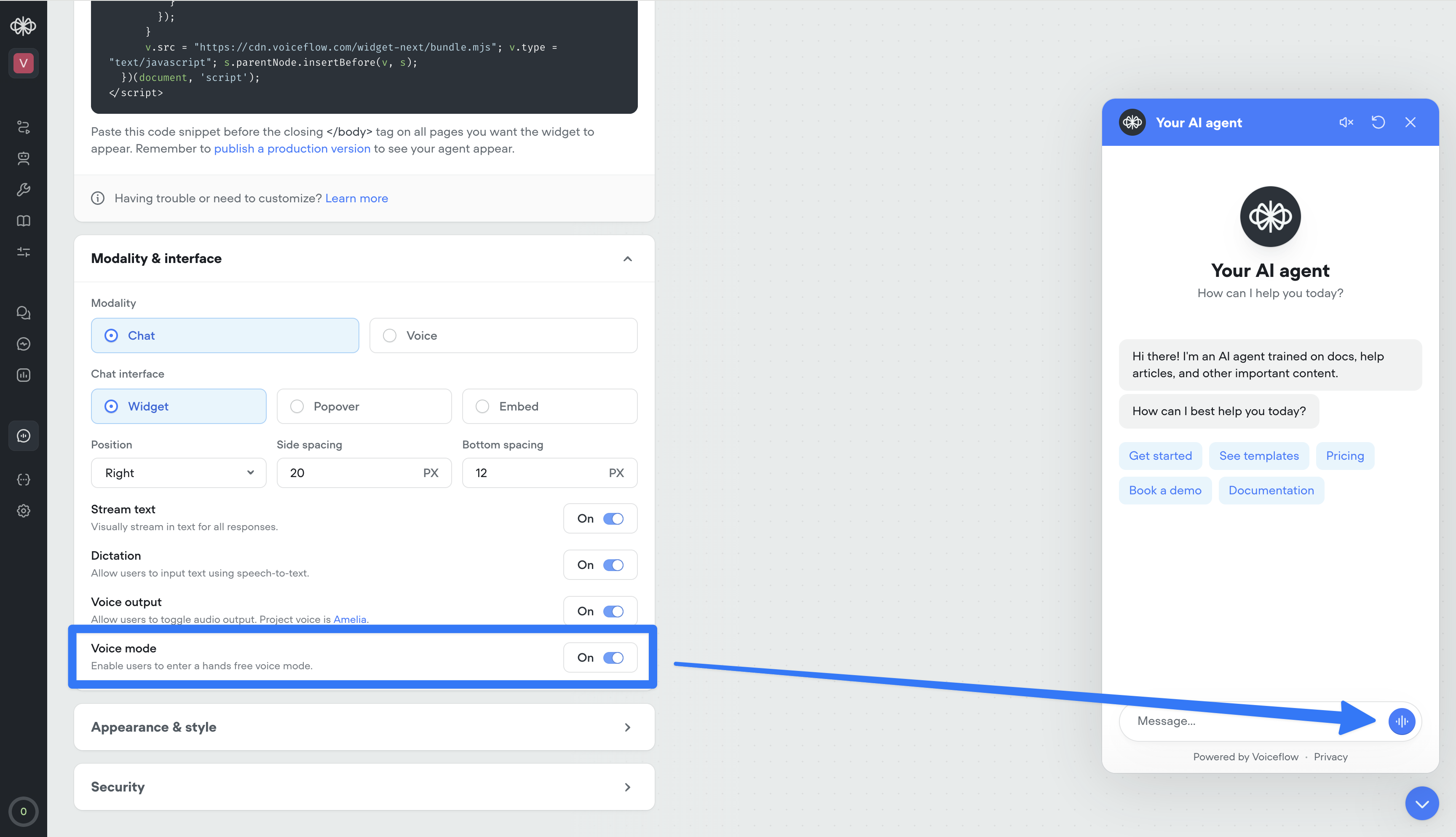

Your web widget now supports hands-free, real-time voice conversations. Enable it from the Widget tab for existing projects — it’s on by default for new ones.

Users can talk naturally, see transcripts stream in instantly, and get a frictionless voice-first experience. It also doubles as the perfect in-browser way to test your phone conversations—no dialing in, just open the widget and run the full voice flow instantly.

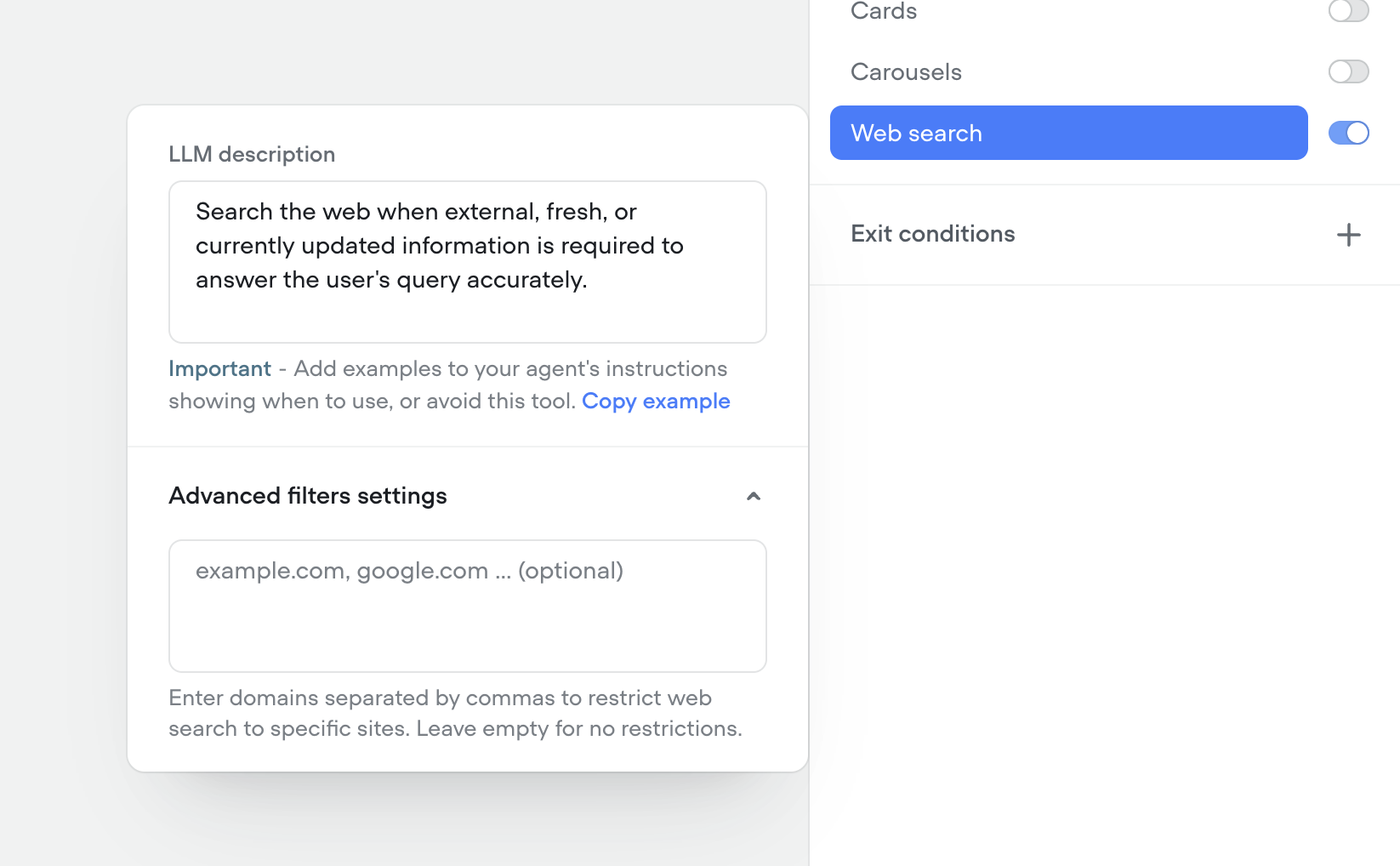

We’ve shipped a native Web Search tool so your agents can look up real-time information on the web mid-conversation—no custom integrations required.

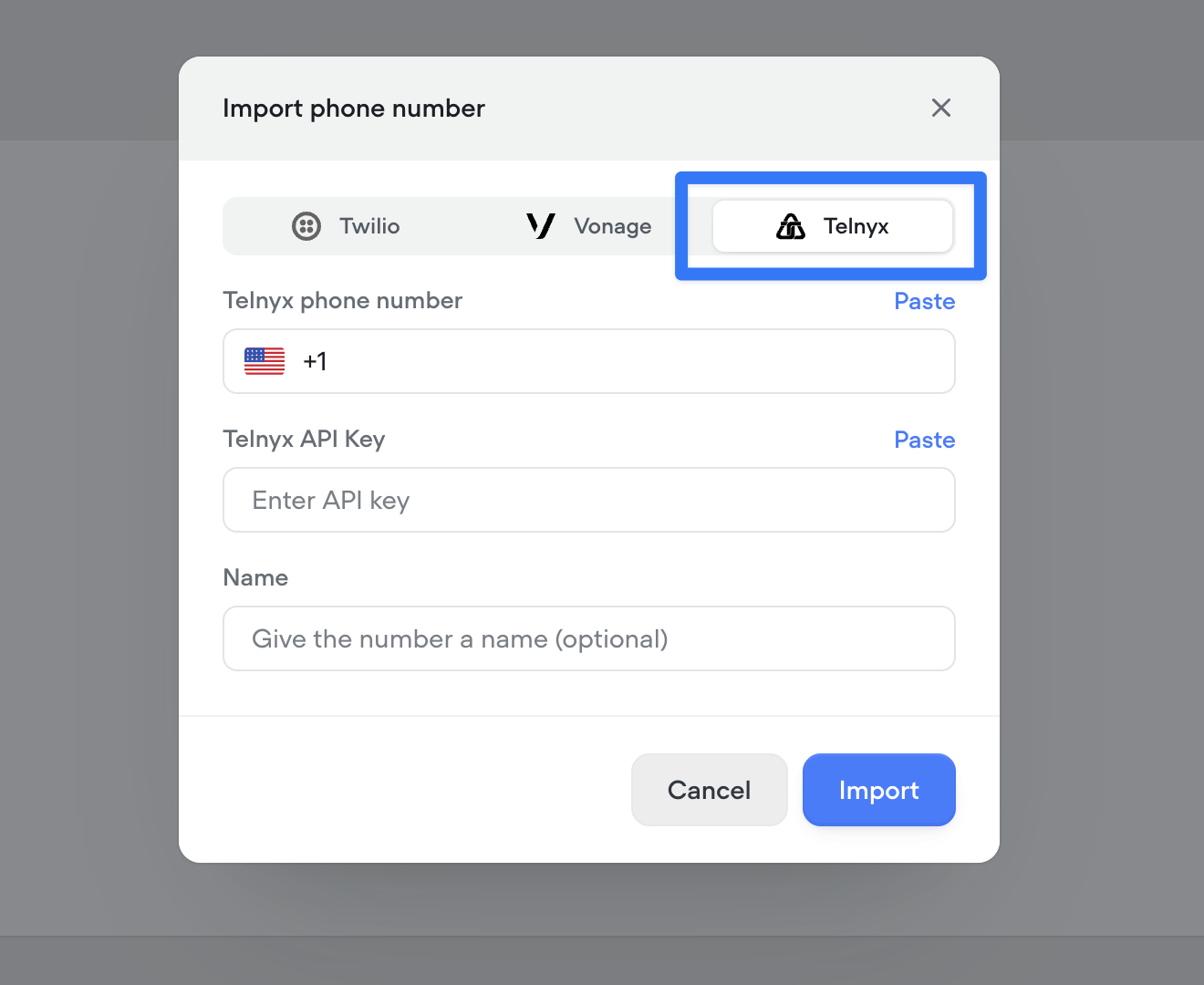

You can now connect your Telnyx account to import and manage phone numbers directly in Voiceflow, enabling Telnyx as your telephony provider for both inbound and outbound calls..